Today’s version of XKCD’s “my code is compiling” comic is “my tests are running”. Engineers are an expensive resource, and they’re just as much of a wasted resource if they have to wait long periods of time for their tests to run as they were when they had to wait long periods of time for their code to compile. At Bolt, we use CircleCI as our continuous integration (CI) tool and we have been pushing hard to make our test suites faster, easier to work with, and useful. This post explains how we reduced the time and the configuration complexity needed for our CI & integration test pipelines, and highlights what we learned along the way.

How we test at Bolt

There are two types of test workflows that we use at Bolt. The first are unit tests that run against each PR and on every commit to master. The second are integration tests that run against each of our environments after every deploy. The former are rarely flaky: if they fail, it probably requires investigation. The latter are browser automation tests that, by nature, tend to be unreliable. Let’s face it, when working with browser automation tests we’re always playing whack-a-mole with flakes. Even if you manage to stabilize your existing tests, your new tests are bound to introduce more chaos. At Bolt, we pursued a different optimization strategy for each of the two types of tests to make both more useful.

CI test improvements: reducing test time by 3x

Since CI tests run against every PR, we want to be able to let developers know which (if any) test failed as soon as possible. We evaluated our CI workflows and found that our build pipeline was about 15 minutes for backend and 20 minutes for our frontend. We knew we could do better.

Parallelizing tests based on runtime

The first thing we noticed is that we had logically sharded the tests for our Golang packages based on services. This meant that while some smaller services tested in 2 minutes, the bigger services took nearly 16 minutes. With the help of CircleCI’s parallelism and time-based splitting, we were able to balance shards to take roughly the same time. This snippet of a CircleCI command shows how we split all of our Go packages:

commands:

make-check:

description: Runs tests

steps:

- restore_cache:

keys:

- go-mod-1-13-v1-{{ checksum "go.mod" }}-{{ checksum "go.sum" }}

- go-mod-1-13-v1-{{ checksum "go.mod" }}

- go-mod-1-13-v1-

- run:

name: Go tests for hail

shell: /bin/bash

command: |

set -e

cd /home/circleci/project/

# Improve sharding: https://github.com/golang/go/issues/33527

PACKAGES="$(go list ./... | circleci tests split --split-by=timings --timings-type=classname)"

export PACKAGE_NAMES=$(echo $PACKAGES | tr -d '\n')

export BUILD_NUM=$CIRCLE_BUILD_NUM

export SHA1=$CIRCLE_SHA1

echo "Testing the following packages:"

echo $PACKAGE_NAMES

gotestsum --junitfile $ARTIFACTS_DIR/report.xml -- -covermode=count -coverprofile=$ARTIFACTS_DIR/coverage_tmp.out -p 1 $PACKAGE_NAMES

- save_cache:

key: go-mod-1-13-v1-{{ checksum "go.mod" }}-{{ checksum "go.sum" }}

paths:

- "/go/pkg/mod"

Let’s walk through what we did.

First, we restored the Go module cache. If there is no cache, nothing is done. This allows us to avoid having to download Go modules during every run. The cache is keyed by the go.mod and go.sum file hashes.

The run step lists all the packages, splits them by time, and uses gotestsum to run tests. Gotestsum can generate junit reports which makes it easy to see which tests failed.

Finally, we save the cache if the go.mod and go.sum files have changed. This step does nothing if this cache key was already saved.

This alone brought our workflow time down from 15 minutes to 5 minutes. The major bonus here is that we can simply increase our parallelism count as the number of tests grows.

We could have stopped there, but we thought we’d try and see what else we could do while we were at it.

Turns out that one of our setup steps which installed some needed tools took about a minute. We constructed a new image with all the tools installed and this brought the build times down by 30 to 45 seconds. The thing to be wary of here is that common images are pulled faster by CircleCI since they are used more often, and henced cached, so before you decide to make a custom image, make sure you measure the time saved.

Integration test improvements: saving time and reducing code complexity

Integration tests are a whole other beast. We run two types of browser automation tests: local browser tests using a headless browser like Chrome, and remote browser tests that use a service like Browserstack or Saucelabs. While local tests are only limited by our CircleCI plan limits, remote browser tests can get expensive because you pay for the amount of parallelism. We use CircleCI to power our remote browser automation tests and Browserstack hosts the remote browser.

Splitting tests while optimizing for reruns

We have integration tests run against three environments: staging, sandbox, and prod. We run the most tests on sandbox, fewer on staging, and only a small subset on prod. We need to be able to trigger our integration tests via an API, and since v1 of the API did not allow for this, we built a very complicated system to achieve what we needed. However, with the new v2 API, we’ve been able to re-organize our workflows in a much more reasonable way that is easy to understand, faster, and much easier to work with.

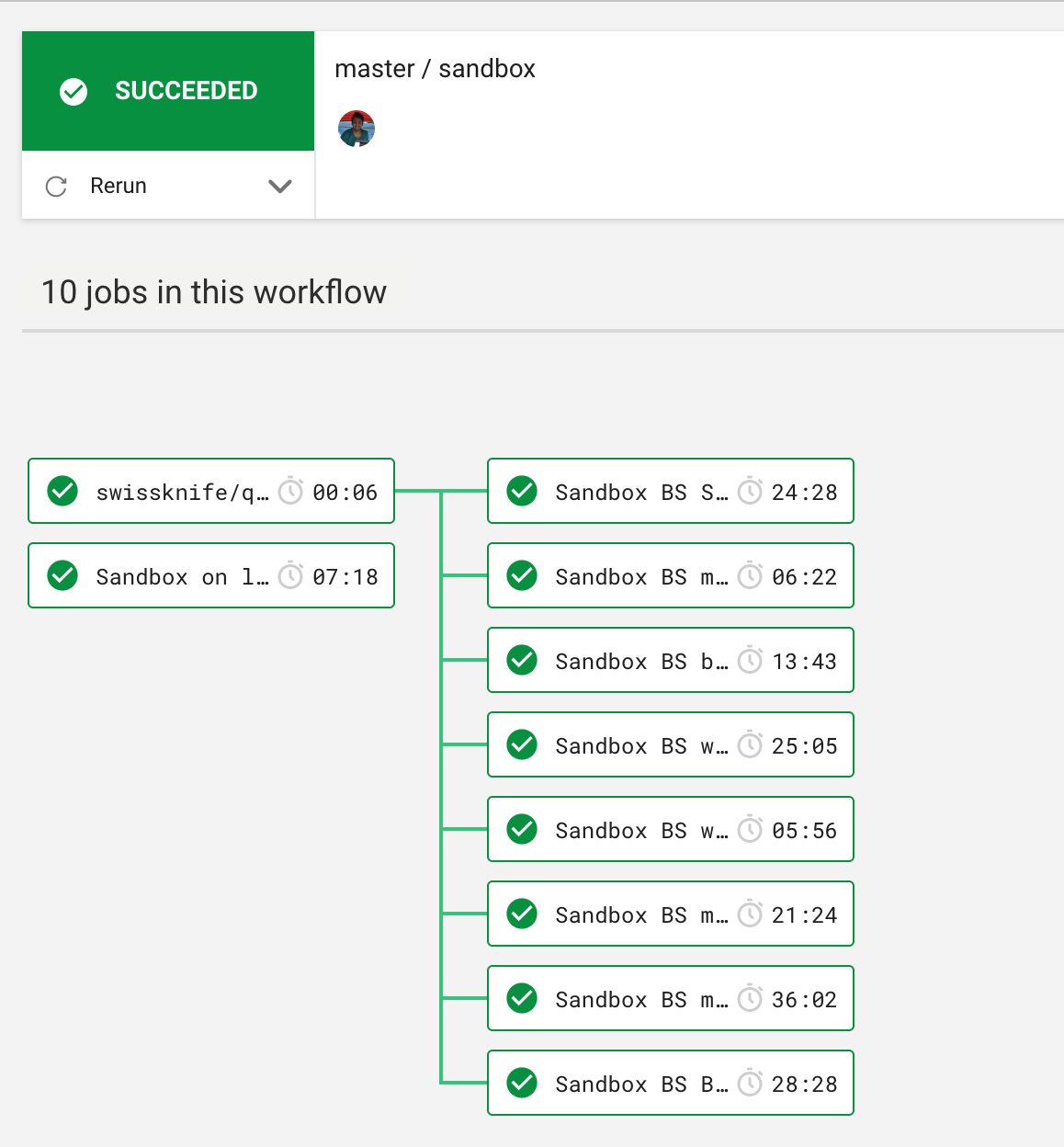

Previously, we had one job which ran hundreds of remote tests on three browser variants. This meant that if even one test failed, we had to rerun the whole suite which took more than an hour. We broke this down into eight jobs and now we can rerun only the jobs that failed. Our new workflow is pretty simple, and is shown below.

Workflows for when new tests are added

One type of integration test workflow is run on every pull request to the integration test repo. This ensures that any tests we add or modify are valid. These run only local headless browser tests and this workflow is kept as lightweight as possible.

Workflows for promoting builds

These integration tests run on master against one of our environments: staging, sandbox, or prod. These need to be more rigorous and this is where we use multiple browsers on Browserstack. Further, since the level of parallelization is limited by Browserstack, which fails parallel tests instead of queuing them, we form a fake queue on CircleCI by queuing up workflows that use Browserstack.

This snippet shows how we queue remote browser stack tests but don’t block headless Chrome tests that run within circleCI:

version: 2.1

orbs:

swissknife: roopakv/swissknife@0.8.0

# These parameters are used to run various workflows via the API and

# are not used directly for every PR. by setting the below params

# we can for example run only staging integration tests.

parameters:

run_base_tests:

type: boolean

default: true

run_cdstaging_integration_tests:

type: boolean

default: false

run_staging_integration_tests:

type: boolean

default: false

run_sandbox_integration_tests:

type: boolean

default: false

run_production_integration_tests:

type: boolean

default: false

workflows:

# This workflow is run on master changes merged to this repo

build:

when: << pipeline.parameters.run_base_tests >>

jobs:

- test-on-chrome:

name: Staging on local chrome

context: integration-tests-staging-context

- test-on-chrome:

name: Sandbox on local chrome

context: integration-tests-sandbox-context

- test-api:

name: Staging API

context: integration-tests-staging-context

staging-local-chrome:

when: << pipeline.parameters.run_cdstaging_integration_tests >>

jobs:

- test-on-chrome:

name: Staging on local chrome

context: integration-tests-staging-context

# This workflow is triggered via the API to run tests against the

# staging environment on browserstack and local chrome.

staging:

when: << pipeline.parameters.run_staging_integration_tests >>

jobs:

- swissknife/queue_up_workflow:

max-wait-time: "1800"

workflow-name: "^(staging|production|sandbox)$"

- test-on-chrome:

name: Staging on local chrome

context: integration-tests-staging-context

- bs-base:

name: Staging BS base tests

context: integration-tests-staging-context

requires:

- swissknife/queue_up_workflow

- bs-shopify:

name: Staging BS shopify tests

context: integration-tests-staging-context

requires:

- swissknife/queue_up_workflow

- bs-bigcommerce:

name: Staging BS bigcommerce tests

context: integration-tests-staging-context

requires:

- swissknife/queue_up_workflow

- test-api:

name: Staging API

context: integration-tests-staging-context

# This workflow is triggered via the API to run tests against the

# sandbox environment on browserstack and local chrome.

sandbox:

when: << pipeline.parameters.run_sandbox_integration_tests >>

jobs:

- swissknife/queue_up_workflow:

max-wait-time: "1800"

workflow-name: "^(staging|production|sandbox)$"

- test-on-chrome:

name: Sandbox on local chrome

context: integration-tests-sandbox-context

- bs-base:

name: Sandbox BS Base tests

context: integration-tests-sandbox-context

requires:

- swissknife/queue_up_workflow

- bs-shopify:

name: Sandbox BS Shopify tests

context: integration-tests-sandbox-context

requires:

- swissknife/queue_up_workflow

- bs-bigcommerce:

name: Sandbox BS bigcommerce tests

context: integration-tests-sandbox-context

requires:

- swissknife/queue_up_workflow

- bs-woocommerce:

name: Sandbox BS woocommerce tests

context: integration-tests-sandbox-context

requires:

- swissknife/queue_up_workflow

- bs-miva:

name: Sandbox BS miva tests

context: integration-tests-sandbox-context

requires:

- swissknife/queue_up_workflow

- bs-magento1:

name: Sandbox BS magento1 tests

context: integration-tests-sandbox-context

requires:

- swissknife/queue_up_workflow

- bs-magento2:

name: Sandbox BS magento2 tests

context: integration-tests-sandbox-context

requires:

- swissknife/queue_up_workflow

# This workflow is triggered via the API to run tests against the

# production environment on browserstack and local chrome.

production:

when: << pipeline.parameters.run_production_integration_tests >>

jobs:

- swissknife/queue_up_workflow:

max-wait-time: "1800"

workflow-name: "^(staging|production|sandbox)$"

- test-on-chrome:

name: Prod on local chrome

context: integration-tests-production-context

- bs-base:

name: Prod BS Base tests

context: integration-tests-production-context

requires:

- swissknife/queue_up_workflow

Note that we have the same jobs in our staging and sandbox environments. The tests are the same, and all that changes is which environment we are testing, so we move the test config into contexts and attach a context according to the environment being tested.

We use Jenkins (due to compliance reasons) to manage our deploys and trigger integration tests. It is now very easy for us to trigger integration tests and wait for them on Jenkins. This is an example of how we do this using the new v2 API:

#!/bin/bash -x

# Exit immediately if a command exits with a non-zero status.

set -e

if [ "$1" == "" ]; then

echo "Usage: $0 env"

exit 1

fi

ENV=$1

SLUG=$2

PIPELINE_ID=""

WORKFLOW_ID=""

WORKFLOW_STATUS="running"

start_integration_test () {

CREATE_PIPELINE_OUTPUT=$(curl --silent -X POST \

"https://circleci.com/api/v2/project/${SLUG}/pipeline?circle-token=${CIRCLE_TOKEN}" \

-H 'Accept: */*' \

-H 'Content-Type: application/json' \

-d '{

"branch": "master",

"parameters": {

"run_'${ENV}'_integration_tests": true,

"run_base_tests": false

}

}')

PIPELINE_ID=$(echo $CREATE_PIPELINE_OUTPUT | jq -r .id)

echo "The created pipeline is ${PIPELINE_ID}"

# Sleep till circle starts a workflow from this pipeline

# TODO(roopakv): Change this to a curl loop instead of a sleep

sleep 20

}

get_workflow_from_pipeline () {

GET_PIPELINE_OUTPUT=$(curl --silent -X GET \

"https://circleci.com/api/v2/pipeline/${PIPELINE_ID}?circle-token=${CIRCLE_TOKEN}" \

-H 'Accept: */*' \

-H 'Content-Type: application/json')

WORKFLOW_ID=$(echo $GET_PIPELINE_OUTPUT | jq -r .items[0].id)

echo "The created worlkflow is ${WORKFLOW_ID}"

echo "Link to workflow is"

echo "https://circleci.com/workflow-run/${WORKFLOW_ID}"

}

running_statuses=("running" "failing")

wait_for_workflow () {

while [[ " ${running_statuses[@]} " =~ " ${WORKFLOW_STATUS} " ]]

do

echo "Sleeping, will check status in 30s"

sleep 30

WORFLOW_GET_OUTPUT=$(curl --silent -X GET \

"https://circleci.com/api/v2/workflow/${WORKFLOW_ID}?circle-token=${CIRCLE_TOKEN}" \

-H 'Accept: */*' \

-H 'Content-Type: application/json')

WORKFLOW_STATUS=$(echo $WORFLOW_GET_OUTPUT | jq -r .status)

echo "The workflow currently has status - ${WORKFLOW_STATUS}."

done

if [ "$WORKFLOW_STATUS" == "success" ]; then

echo "Workflow was successful!"

exit 0

else

echo "Workflow did not succeed. Status was ${WORKFLOW_STATUS}"

exit 1

fi

}

# remove noise so set +x

set +x

start_integration_test

get_workflow_from_pipeline

wait_for_workflow

Moving from a single job to this workflow-controlled approach has given us the following benefits:

- Ability to re-run only some of the tests and not all of them

- Reduced time

- Rather than use complex code to handle multiple environments, we now have config in CircleCI contexts.

Conclusion

In summary, as we set out to reduce test run time and make the tests easier to work with, we realized that we had to use different approaches for the different types of tests. We split our unit tests by runtime, and also moved any possible repeated work to a prebuilt container. As for integration tests, we optimized for working with remote browsers with limited parallelism. We built a mechanism to run the same tests across environments with simple configuration changes. As a result, we also reduced our pipeline complexity, which made it easier for new engineers to understand our testing infrastructure.

Roopak Venkatakrishnan is currently building systems at Bolt. Prior to this, he was at Spoke, Google, and Twitter. Apart from solving complex problems, he has a strong penchant for dev infra & tooling. When he isn’t working, he enjoys a cup of coffee, hiking, and tweeting random things.