Phase 3 - execution environments

このページの内容

- 1. Nomad clients

- a. Create your cluster with Terraform

- AWS cluster

- GCP cluster

- b. Nomad Autoscaler configuration

- AWS autoscaler IAM/role

- IAM policy for Nomad Autoscaler

- GCP autoscaler service account

- 2. Nomad servers

- a. Nomad gossip encryption key

- b. Nomad mTLS

- c. Nomad Autoscaler

- AWS

- GCP

- d. Helm upgrade

- 3. Nomad clients validation

- GitHub cloud

- GitHub Enterprise

- 3. VM service

- AWS

- Set up security group

- Set up authentication

- GCP

- VM service validation

- 4. Runner

- Overview

- Next steps

Before you begin with the CircleCI server v4.2 execution environment installation phase, ensure you have run through Phase 1 – Prerequisites and Phase 2 - Core Services Installation.

In the following sections, replace any sections indicated by < > with your details. |

1. Nomad clients

Nomad is a workload orchestration tool that CircleCI uses to schedule (through Nomad server) and run (through Nomad clients) CircleCI jobs.

Nomad clients are installed outside of the Kubernetes cluster, while their control plane (Nomad Server) is installed within the cluster. Communication between your Nomad Clients and the Nomad control plane is secured with mTLS. The mTLS certificate, private key, and certificate authority will be output after you complete installation of the Nomad Clients.

a. Create your cluster with Terraform

CircleCI curates Terraform modules to help install Nomad clients in your chosen cloud provider. You can browse the modules in our public repository, including example Terraform config files for both AWS and GCP.

AWS cluster

You need some information about your cluster and server installation to populate the required variables for the Terraform module. A full example, as well as a full list of variables, can be found in the example AWS Terraform configuration.

-

Server endpoint - The domain name of the CircleCI application.

-

Subnet ID (subnet), VPC ID (vpc_id), and DNS server (dns_server) of your cluster. Run the following commands to get the cluster VPC ID (vpcId), and subnets (subnetIds):

# Fetch VPC ID aws eks describe-cluster --name=<cluster-name> --query "cluster.resourcesVpcConfig.vpcId" --region=<region> --output text | xargs # Fetch Subnet IDs aws eks describe-cluster --name=<cluster-name> --query "cluster.resourcesVpcConfig.subnetIds" --region=<region> --output text | xargsThis returns something similar to the following:

# VPC Id vpc-02fdfff4ca # Subnet Ids subnet-08922063f12541f93 subnet-03b94b6fb1e5c2a1d subnet-0540dd7b2b2ddb57e subnet-01833e1fa70aa4488Then, using the VPCID you just found, run the following command to get the CIDR Block for your cluster. For AWS, the DNS server is the third IP in your CIDR block (

CidrBlock), for example your CIDR block might be10.100.0.0/16, so the third IP would be10.100.0.2.aws ec2 describe-vpcs --filters Name=vpc-id,Values=<vpc-id> --query "Vpcs[].CidrBlock" --region=<region> --output text | xargsThis returns something like the following:

192.168.0.0/16

Once you have filled in the appropriate information, you can deploy your Nomad clients by running the following commands:

terraform initterraform planterraform applyAfter Terraform is done spinning up the Nomad client(s), it outputs the certificates and keys needed for configuring the Nomad control plane in CircleCI server. Copy them somewhere safe. The apply process usually only takes a minute.

GCP cluster

You need the following information:

-

The Domain name of the CircleCI application

-

The GCP Project you want to run Nomad clients in

-

The GCP Zone you want to run Nomad clients in

-

The GCP Region you want to run Nomad clients in

-

The GCP Network you want to run Nomad clients in

-

The GCP Subnetwork you want to run Nomad clients in

A full example, as well as a full list of variables, can be found in the example GCP Terraform configuration.

Once you have filled in the appropriate information, you can deploy your Nomad clients by running the following commands:

terraform initterraform planterraform applyAfter Terraform is done spinning up the Nomad client(s), it outputs the certificates and key needed for configuring the Nomad control plane in CircleCI server. Copy them somewhere safe.

b. Nomad Autoscaler configuration

Nomad can automatically scale up or down your Nomad clients, provided your clients are managed by a cloud provider’s autoscaling resource. With Nomad Autoscaler, you need to provide permission for the utility to manage your autoscaling resource and specify where it is located. CircleCI’s Nomad Terraform module can provision the permissions resources, or it can be done manually.

AWS autoscaler IAM/role

Create an IAM user or role and policy for Nomad Autoscaler. You may take one of the following approaches:

-

The CircleCI Nomad module creates an IAM user and outputs the keys if you set variable

nomad_auto_scaler = true. You may reference the example in the link for more details. If you have already created the clients, you can update the variable and runterraform apply. The created user’s access and secret key will be available in Terraform’s output. -

Create a Nomad Autoscaler IAM user manually with the IAM policy below. Then, generate an access and secret key for this user.

-

You may create a Role for Service Accounts for Nomad Autoscaler and attach the IAM policy below:

When using access and secret keys, you have two options for configuration:

IAM policy for Nomad Autoscaler

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"autoscaling:CreateOrUpdateTags",

"autoscaling:UpdateAutoScalingGroup",

"autoscaling:TerminateInstanceInAutoScalingGroup"

],

"Resource": "<<Your Autoscaling Group ARN>>"

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": [

"autoscaling:DescribeScalingActivities",

"autoscaling:DescribeAutoScalingGroups"

],

"Resource": "*"

}

]

}GCP autoscaler service account

Create a service account for Nomad Autoscaler. You may take one of the following approaches:

2. Nomad servers

Now that you have successfully deployed your Nomad clients and have the permission resources, you can configure the Nomad Servers.

a. Nomad gossip encryption key

Nomad requires a key to encrypt communications. This key must be exactly 32 bytes long. CircleCI will not be able to recover the values if lost. Depending on how you prefer to manage Kubernetes Secrets, there are two options:

b. Nomad mTLS

The CACertificate, certificate and privateKey can be found in the output of the Terraform module. They must be base64 encoded.

nomad:

server:

...

rpc:

mTLS:

enabled: true

certificate: "<base64-encoded-certificate>"

privateKey: "<base64-encoded-private-key>"

CACertificate: "<base64-encoded-ca-certificate>"c. Nomad Autoscaler

If you have enabled Nomad Autoscaler, also include the following section under nomad:

AWS

You created these values in the Nomad Autoscaler Configuration section.

nomad:

...

auto_scaler:

enabled: true

scaling:

max: <max-node-limit>

min: <min-node-limit>

aws:

enabled: true

region: "<region>"

autoScalingGroup: "<asg-name>"

accessKey: "<access-key>"

secretKey: "<secret-key>"

# or

irsaRole: "<role-arn>"GCP

You created these values in the Nomad Autoscaler Configuration section.

nomad:

...

auto_scaler:

enabled: true

scaling:

max: <max-node-limit>

min: <min-node-limit>

gcp:

enabled: true

project_id: "<project-id>"

mig_name: "<instance-group-name>"

region: "<region>"

# or

zone: "<zone>"

workloadIdentity: "<service-account-email>"

# or

service_account: "<service-account-json>"d. Helm upgrade

Apply the changes made to your values.yaml file:

namespace=<your-namespace>

helm upgrade circleci-server oci://cciserver.azurecr.io/circleci-server -n $namespace --version 4.2.7 -f <path-to-values.yaml>3. Nomad clients validation

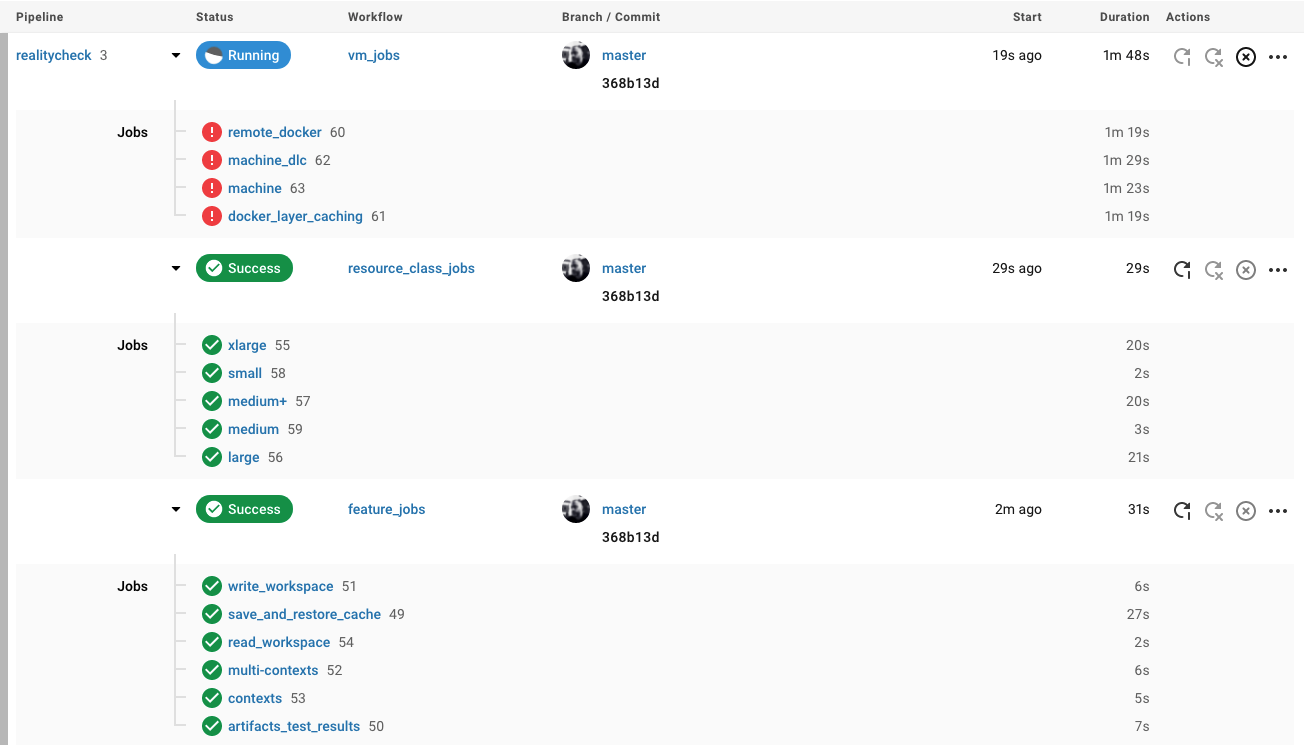

CircleCI has created a project called Reality Check which allows you to test your server installation. We are going to follow the project so we can verify that the system is working as expected. As you continue through the next phase, sections of Reality Check will move from red (fail) to green (pass).

Before running Reality Check, check if the Nomad servers can communicate with the Nomad clients by executing the below command.

kubectl -n <namespace> exec -it $(kubectl -n <namespace> get pods -l app=nomad-server -o name | tail -1) -- nomad node statusYou should be able to see output like this:

ID DC Name Class Drain Eligibility Status

132ed55b default ip-192-168-44-29 linux-64bit false eligible readyTo run Reality Check, you need to clone the repository. Depending on your GitHub setup, you can use one of the following commands:

GitHub cloud

git clone https://github.com/circleci/realitycheck.gitGitHub Enterprise

git clone https://github.com/circleci/realitycheck.git

git remote set-url origin <YOUR_GH_REPO_URL>

git pushOnce you have successfully cloned the repository, you can follow it from within your CircleCI server installation. You need to set the following variables. For full instructions see the repository readme.

| Name | Value |

|---|---|

CIRCLE_HOSTNAME | <YOUR_CIRCLECI_INSTALLATION_URL> |

CIRCLE_TOKEN | <YOUR_CIRCLECI_API_TOKEN> |

CIRCLE_CLOUD_PROVIDER | < |

| Name | Environmental Variable Key | Environmental Variable Value |

|---|---|---|

org-global | CONTEXT_END_TO_END_TEST_VAR | Leave blank |

individual-local | MULTI_CONTEXT_END_TO_END_VAR | Leave blank |

Once you have configured the environmental variables and contexts, rerun the Reality Check tests. You should see the features and resource jobs complete successfully. Your test results should look something like the following:

3. VM service

VM service is used to configure virtual machines for jobs that run in Linux VM, Windows and Arm VM execution environments, and those that are configured to use remote Docker. You can configure a number of options for VM service, such as scaling rules. VM service is unique to AWS and GCP installations because it relies on specific features of these cloud providers.

| Once you have completed the server installation process you can further configure VM service, including building and specifying a Windows image to give developers access to the Windows execution environment, specifying an alternative Linux machine image, and specifying a number of preallocated instances to remain spun up at all times. For more information, see the Manage Virtual Machines with VM Service page. |

AWS

Set up security group

-

Get the information needed to create security groups

The following command returns your VPC ID (

vpcId) and CIDR Block (serviceIpv4Cidr) which you need throughout this section:# Fetch VPC Id aws eks describe-cluster --name=<cluster-name> --query "cluster.resourcesVpcConfig.vpcId" --region=<region> --output text | xargs # Fetch CIDR Block aws eks describe-cluster --name=<cluster-name> --query "cluster.kubernetesNetworkConfig.serviceIpv4Cidr" --region=<region> --output text | xargs -

Create a security group

Run the following commands to create a security group for VM service:

aws ec2 create-security-group --vpc-id "<VPC_ID>" --description "CircleCI VM Service security group" --group-name "circleci-vm-service-sg"This outputs a GroupID to be used in the next steps:

{ "GroupId": "<VM_SECURITY_GROUP_ID>" } -

Apply security group Nomad

Use the security group you just created, and your CIDR block values, to apply the security group. This allows VM service to communicate with created EC2 instances on port 22.

aws ec2 authorize-security-group-ingress --group-id "<VM_SECURITY_GROUP_ID>" --protocol tcp --port 22 --cidr "<SERVICE_IPV4_CIDR>"For each subnet used by the Nomad clients, find the subnet CIDR block and add two rules with the following commands.

# find CIDR block aws ec2 describe-subnets --subnet-ids=<nomad-subnet-id> --query "Subnets[*].[SubnetId, CidrBlock]" --region=<region> | xargs# add a security group allowing docker access from nomad clients, to VM instances aws ec2 authorize-security-group-ingress --group-id "<VM_SECURITY_GROUP_ID>" --protocol tcp --port 2376 --cidr "<SUBNET_IPV4_CIDR>"# add a security group allowing SSH access from nomad clients, to VM instances aws ec2 authorize-security-group-ingress --group-id "<VM_SECURITY_GROUP_ID>" --protocol tcp --port 22 --cidr "<SUBNET_IPV4_CIDR>" -

Apply the security group for SSH (If using public IPs for machines)

If using public IPs for VM service instances, run the following command to apply the security group rules so users can SSH into their jobs:

aws ec2 authorize-security-group-ingress --group-id "<VM_SECURITY_GROUP_ID>" --protocol tcp --port 54782 --cidr "0.0.0.0/0"

Set up authentication

Authenticate CircleCI with your cloud provider in one of two ways:

-

IAM Roles for Service Accounts (IRSA) - recommended

-

IAM access keys

GCP

You need additional information about your cluster to complete the next section. Run the following command:

gcloud container clusters describeThis command returns something like the following, which includes network, region and other details that you need to complete the next section:

addonsConfig:

gcePersistentDiskCsiDriverConfig:

enabled: true

kubernetesDashboard:

disabled: true

networkPolicyConfig:

disabled: true

clusterIpv4Cidr: 10.100.0.0/14

createTime: '2021-08-20T21:46:18+00:00'

currentMasterVersion: 1.20.8-gke.900

currentNodeCount: 3

currentNodeVersion: 1.20.8-gke.900

databaseEncryption:

…-

Create firewall rules

External VMs need the networking rules described in Hardening your Cluster

-

Create user

We recommend you create a unique service account used exclusively by VM Service. The Compute Instance Admin (Beta) role is broad enough to allow VM Service to operate. If you wish to make permissions more granular, you can use the Compute Instance Admin (beta) role documentation as reference.

gcloud iam service-accounts create circleci-server-vm --display-name "circleci-server-vm service account"If you are deploying CircleCI server in a shared VCP, you should create this user in the project in which you intend to run your VM jobs. -

Get the service account email address

gcloud iam service-accounts list --filter="displayName:circleci-server-vm service account" --format 'value(email)' -

Apply role to service account

Apply the Compute Instance Admin (beta) role to the service account:

gcloud projects add-iam-policy-binding <YOUR_PROJECT_ID> --member serviceAccount:<YOUR_SERVICE_ACCOUNT_EMAIL> --role roles/compute.instanceAdmin --condition=NoneAnd

gcloud projects add-iam-policy-binding <YOUR_PROJECT_ID> --member serviceAccount:<YOUR_SERVICE_ACCOUNT_EMAIL> --role roles/iam.serviceAccountUser --condition=None -

Enable Workload Identity for Service Account or get JSON key file

Choose one of the following options, depending on whether you are using Workload Identity.

-

Configure CircleCI server

When using service account keys for configuring access for the VM service, there are two options.

VM service validation

Apply they changes made to your values.yaml file.

namespace=<your-namespace>

helm upgrade circleci-server oci://cciserver.azurecr.io/circleci-server -n $namespace --version 4.2.7 -f <path-to-values.yaml>Once you have configured and deployed CircleCI server, you should validate that VM Service is operational. You can rerun the Reality Check project within your CircleCI installation and you should see the VM Service Jobs complete. At this point, all tests should pass.

4. Runner

Overview

CircleCI runner does not require any additional server configuration. CircleCI server ships ready to work with runner. However, you need to create a runner and configure the runner agent to be aware of your server installation. For complete instructions for setting up runner, see the runner documentation.

| Runner requires a namespace per organization. CircleCI server can have many organizations. If your company has multiple organizations within your CircleCI installation, you need to set up a runner namespace for each organization within your server installation. |