Highly performant apps that load fast and interact smoothly have become a necessity. These qualities are expected by users, and not just a nice-to-have anymore. The way to ensure smooth interactions is to add performance validation as part of your release flow.

Google recently released updates to their Jetpack benchmarking libraries. One notable addition is the Macrobenchmark library. This lets you test your app’s performance in areas like startup and scroll jank. In this tutorial you will get started with the Jetpack benchmarking libraries, and learn how to implement it as part of a CI/CD flow.

Prerequisites

You will need a few things to get the most from this tutorial:

- Experience with Android development and testing, including instrumentation testing

- Experience with Gradle

- A free CircleCI account

- A Firebase and Google Cloud platform account

- Android Studio Arctic Fox

Note: The version I used to write this tutorial is 2020.3.1 Beta 4. Macrobenchmark is not supported on the current stable version of Android Studio at the time of writing (July 2021).

About the project

The project is based on an earlier testing sample I created for a blog post on testing Android apps in a CI/CD pipeline.

I have expanded the sample project to include a benchmark job as part of a CI/CD build. This new job runs app startup benchmarks using the Jetpack Macrobenchmark library.

Jetpack benchmarking

Android Jetpack offers two types of benchmarking: Microbenchmark and Macrobenchmark. Microbenchmark, which has been around since 2019, allows for making performance measurement of application code (think caching or similar processes that might take a while to process).

Macrobenchmark is the new addition to Jetpack. It allows you to measure your app’s performance as a whole on easy-to-notice areas like app startup and scrolling. The sample application uses these macrobenchmark tests for measuring app startup.

Both benchmarking libraries and approaches work with the familiar Android Instrumentation framework that runs on connected devices and emulators.

Set up the library

The library setup is documented well on the official Jetpack site - Macrobenchmark Setup. Note: we will not cover all steps in fine detail because they might change in future preview releases. Instead, here is an overview of the procedure:

- Create a new test module named

macrobenchmark. In Android Studio create an Android library module, and change thebuild.gradleto instead usecom.android.testas the plugin. The new module needs minimum SDK level to API 29: Android 10 (Q). - Make several modifications to the new module’s

build.gradlefile. Change test implementations to implementation, point to the app module you want to test, and make the release build typedebuggable. - Add the profileable tag to your app’s

AndroidManifest. - Specify the local release signing config. You can use the existing

debugconfig.

Please refer to the guide in the official Macrobenchmark library documentation for complete step-by-step instructions: https://developer.android.com/studio/profile/macrobenchmark#setup.

Writing and executing Macrobenchmark tests

Macrobenchmark library introduces a few new JUnit rules and metrics.

We are using the StartupTimingMetric taken from the performance-samples project on GitHub.

const val TARGET_PACKAGE = "com.circleci.samples.todoapp"

fun MacrobenchmarkRule.measureStartup(

profileCompiled: Boolean,

startupMode: StartupMode,

iterations: Int = 3,

setupIntent: Intent.() -> Unit = {}

) = measureRepeated(

packageName = TARGET_PACKAGE,

metrics = listOf(StartupTimingMetric()),

compilationMode = if (profileCompiled) {

CompilationMode.SpeedProfile(warmupIterations = 3)

} else {

CompilationMode.None

},

iterations = iterations,

startupMode = startupMode

) {

pressHome()

val intent = Intent()

intent.setPackage(TARGET_PACKAGE)

setupIntent(intent)

startActivityAndWait(intent)

}

@LargeTest

@RunWith(Parameterized::class)

class StartupBenchmarks(private val startupMode: StartupMode) {

@get:Rule

val benchmarkRule = MacrobenchmarkRule()

@Test

fun startupMultiple() = benchmarkRule.measureStartup(

profileCompiled = false,

startupMode = startupMode,

iterations = 5

) {

action = "com.circleci.samples.target.STARTUP_ACTIVITY"

}

companion object {

@Parameterized.Parameters(name = "mode={0}")

@JvmStatic

fun parameters(): List<Array<Any>> {

return listOf(StartupMode.COLD, StartupMode.WARM, StartupMode.HOT)

.map { arrayOf(it) }

}

}

}

The code above takes 3 types of startup metrics: hot, warm, and cold startup as parameterized options. These options are passed to the test using the MacroBenchmarkRule (meaning how recently the app has been run and whether it is being kept in memory or not).

Running benchmarks in a CI/CD pipeline

You can run these samples in Android Studio, which gives you a nice printout of your app’s performance metrics, but won’t do anything to ensure your app is always performant. To do that you need to integrate benchmarks in your CI/CD process.

The steps required are:

- Build the release variants of app and Macrobenchmark modules

- Run the tests on Firebase Test Lab (FTL) or similar tool

- Download the benchmark results

- Store benchmarks as artifacts

- Process the benchmark results to get timings data

- Pass or fail the build based on the results

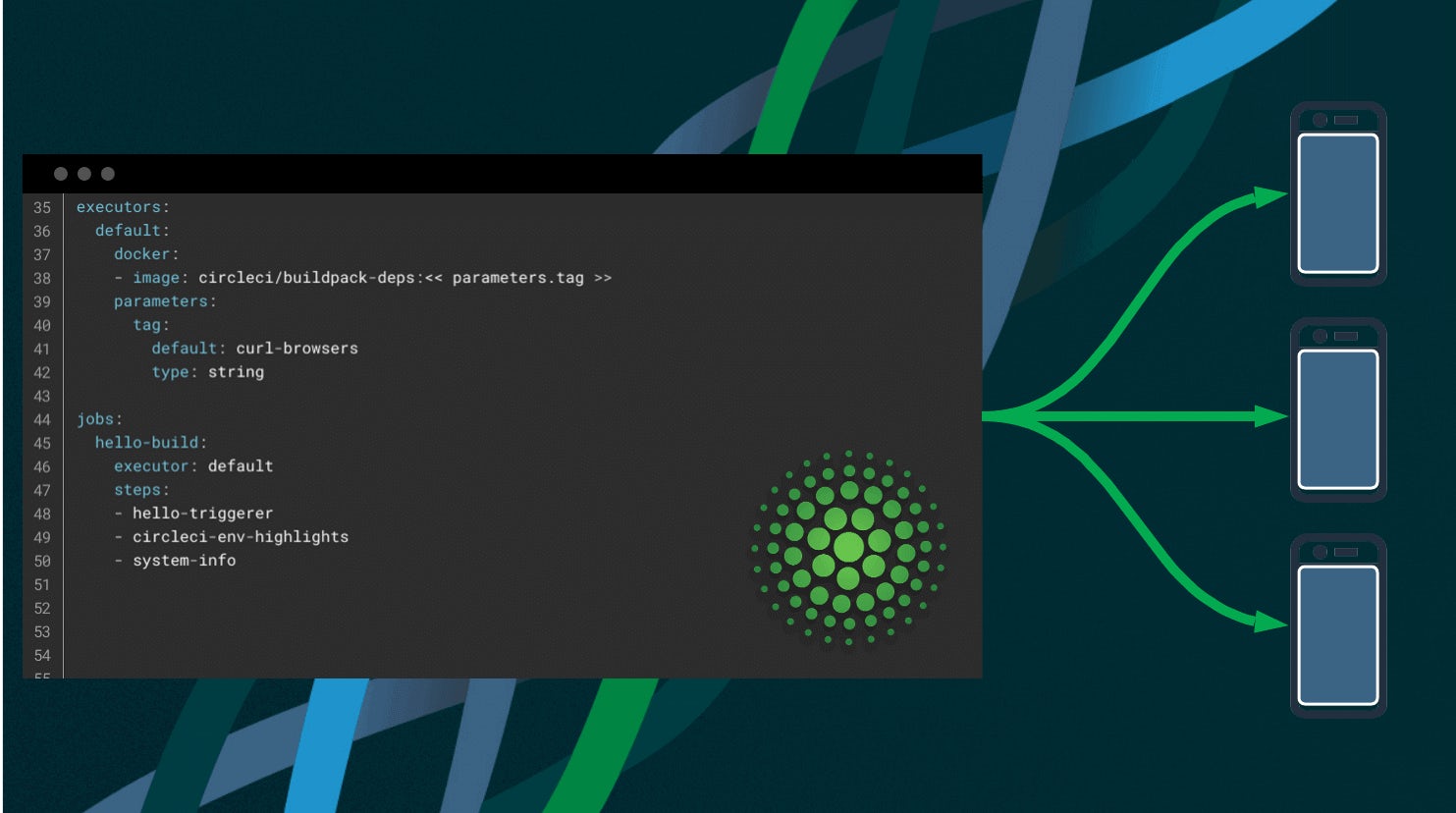

I created a new benchmark-ftl job to run my tests:

orbs:

android: circleci/android@1.0.3

gcp-cli: circleci/gcp-cli@2.2.0

...

jobs:

...

benchmarks-ftl:

executor:

name: android/android

sdk-version: "30"

variant: node

steps:

- checkout

- android/restore-gradle-cache

- android/restore-build-cache

- run:

name: Build app and test app

command: ./gradlew app:assembleRelease macrobenchmark:assemble

- gcp-cli/initialize:

gcloud-service-key: GCP_SA_KEY

google-project-id: GCP_PROJECT_ID

- run:

name: run on FTL

command: |

gcloud firebase test android run \

--type instrumentation \

--app app/build/outputs/apk/release/app-release.apk \

--test macrobenchmark/build/outputs/apk/release/macrobenchmark-release.apk \

--device model=flame,version=30,locale=en,orientation=portrait \

--directories-to-pull /sdcard/Download \

--results-bucket gs://android-sample-benchmarks \

--results-dir macrobenchmark \

--environment-variables clearPackageData=true,additionalTestOutputDir=/sdcard/Download,no-isolated-storage=true

- run:

name: Download benchmark data

command: |

mkdir ~/benchmarks

gsutil cp -r 'gs://android-sample-benchmarks/macrobenchmark/**/artifacts/sdcard/Download/*' ~/benchmarks

gsutil rm -r gs://android-sample-benchmarks/macrobenchmark

- store_artifacts:

path: ~/benchmarks

- run:

name: Evaluate benchmark results

command: node scripts/eval_startup_benchmark_output.js

This snippet represents a job that runs the macrobenchmark tests on a real device in Firebase Test Lab. We are using the Docker executor with the image provided by the Android orb, which has Android SDK 30 installed and includes NodeJS. We will use Node for the script that evaluates our results.

After building the app and macrobenchmark module APKs, we need to initialize the Google Cloud CLI using the orb. This is where we provide the environmental variables in CircleCI for Google Cloud. Then, we run the tests in Firebase Test Lab:

- run:

name: run on FTL

command: |

gcloud firebase test android run \

--type instrumentation \

--app app/build/outputs/apk/release/app-release.apk \

--test macrobenchmark/build/outputs/apk/release/macrobenchmark-release.apk \

--device model=flame,version=30,locale=en,orientation=portrait \

--directories-to-pull /sdcard/Download \

--results-bucket gs://android-sample-benchmarks \

--results-dir macrobenchmark \

--environment-variables clearPackageData=true,additionalTestOutputDir=/sdcard/Download,no-isolated-storage=true

This uploads the APKs to Firebase, specifies the device (Pixel 4 in our case), provides environmental variables for the test, and specifies the Cloud Storage Bucket to store the results in. We are always putting it in the macrobenchmark directory for easier fetching. We do not need to install the gcloud tool used to run the tests; that comes bundled with the CircleCI Android Docker image.

Once the job terminates we need to download the benchmark data using the gsutil tool that is bundled with the GCP CLI tool:

- run:

name: Download benchmark data

command: |

mkdir ~/benchmarks

gsutil cp -r 'gs://android-sample-benchmarks/macrobenchmark/**/artifacts/sdcard/Download/*' ~/benchmarks

gsutil rm -r gs://android-sample-benchmarks/macrobenchmark

- store_artifacts:

path: ~/benchmarks

This creates a benchmarks directory and copies the benchmarks to it from cloud storage. After copying the files we are also removing the macrobenchmark directory in our storage bucket to avoid cluttering it with prior trace files.

You could alternatively retain the files in Google Cloud Storage by instead constructing the directory name from the job ID, for example.

Evaluating benchmark results

The benchmark test command will terminate successfully if the benchmarks have completed. It won’t give you any indication of how well they actually performed, though. For that you will need to analyze the benchmark results and decide for yourself whether the test has failed or passed.

The results are exported in a JSON file containing the minimum, maximum, and median timings of each test. I wrote a short Node.js script comparing these benchmarks that fails if any of them runs outside my expected timings. Node.js is well suited for working with JSON files and is readily available on CircleCI machine images.

Running the script is just a single command:

- run:

name: Evaluate benchmark results

command: node scripts/eval_startup_benchmark_output.js

const benchmarkData = require('/home/circleci/benchmarks/com.circleci.samples.todoapp.macrobenchmark-benchmarkData.json')

const COLD_STARTUP_MEDIAN_THRESHOLD_MILIS = YOUR_COLD_THRESHOLD

const WARM_STARTUP_MEDIAN_THRESHOLD_MILIS = YOUR_WARM_THRESHOLD

const HOT_STARTUP_MEDIAN_THRESHOLD_MILIS = YOUR_HOT_THRESHOLD

const coldMetrics = benchmarkData.benchmarks.find(element => element.params.mode === "COLD").metrics.startupMs

const warmMetrics = benchmarkData.benchmarks.find(element => element.params.mode === "WARM").metrics.startupMs

const hotMetrics = benchmarkData.benchmarks.find(element => element.params.mode === "HOT").metrics.startupMs

let err = 0

let coldMsg = `Cold metrics median time - ${coldMetrics.median}ms `

let warmMsg = `Warm metrics median time - ${warmMetrics.median}ms `

let hotMsg = `Hot metrics median time - ${hotMetrics.median}ms `

if(coldMetrics.median > COLD_STARTUP_MEDIAN_THRESHOLD_MILIS){

err = 1

console.error(`${coldMsg} ❌ - OVER THRESHOLD ${COLD_STARTUP_MEDIAN_THRESHOLD_MILIS}ms`)

} else {

console.log(`${coldMsg} ✅`)

}

if(warmMetrics.median > WARM_STARTUP_MEDIAN_THRESHOLD_MILIS){

err = 1

console.error(`${warmMsg} ❌ - OVER THRESHOLD ${WARM_STARTUP_MEDIAN_THRESHOLD_MILIS}ms`)

} else {

console.log(`${warmMsg} ✅`)

}

if(hotMetrics.median > HOT_STARTUP_MEDIAN_THRESHOLD_MILIS){

err = 1

console.error(`${hotMsg} ❌ - OVER THRESHOLD ${HOT_STARTUP_MEDIAN_THRESHOLD_MILIS}ms`)

} else {

console.log(`${hotMsg} ✅`)

}

process.exit(err)

To establish a threshold for timings, I based my expected timings on prior benchmark run results. If we introduce some code that slows down the startup, like a lengthy network call, the benchmark evaluation would run above the threshold and fail the build.

To fail a build I am able to call process.exit(err), which returns a non-zero status code from a script, which fails the benchmark evaluation job.

This is a very simplified sample of what is possible for benchmarking. The folks working on Jetpack benchmarking libraries have written about comparing benchmarks to recent builds to detect any regressions using a step-fitting approach. You can read about it in this blog post.

Developers using CircleCI could implement a similar step-fitting approach by getting historical job data from previous builds using the CircleCI API: https://circleci.com/docs/api/v2/#operation/getJobArtifacts.

Caveats

The end-to-end benchmarks are by definition flaky tests, and need to be run on real devices. Running on an emulator will not produce results that are close to what your users would see. To illustrate, I have created a benchmarks-emulator job to show you the difference in timings.

The benchmarking tools are still in beta as of writing this (July 2021). To use them, you need to preview version of Android Studio (Arctic Fox). The plugins and libraries are being actively developed so updating everything might not work as described.

Conclusion

In this article, I covered how to include Android app performance benchmarking in a CI/CD pipeline alongisde your other tests. This prevents performance regressions from reaching your users as you add new features and other improvements.

We used the new Android Jetpack macrobenchmarking library and showed ways to integrate it with Firebase Test Lab to run benchmarks on real devices. We showed how to analyze the results, passing the build if the application startup time exceeds our allowed threshold.

If you have any feedback or suggestions for what topics I should cover next, contact me on Twitter - @zmarkan.