Deploy a Clojure web application to AWS using Terraform

Software Architect

This is the third blog post in a three-part series about building, testing, and deploying a Clojure web application. You can find the first post here and the second here.

In this post, we will be focusing on how to use HashiCorp Terraform to stand up a fairly complex infrastructure to host our web application Docker containers with a PostgreSQL container and then use CircleCI to deploy to our infrastructure with zero downtime. If you don’t want to go through the laborious task of creating the web application described in the first two posts from scratch, you can get the source by forking this repository and checking out the part-2 branch.

Although we are building a Clojure application, only limited Clojure knowledge is required to follow along with this part of the series.

Prerequisites

In order to build this web application, you need to install the following:

- Java JDK 8 or greater - Clojure runs on the Java Virtual Machine and is in fact just a Java library (JAR). I built this using version 8, but a greater version should work fine, too.

- Leiningen - Leiningen, usually referred to as lein (pronounced ‘line’) is the most commonly used Clojure build tool.

- Git - The ubiquitous distributed version control tool.

- Docker - A tool designed to make it easier to create, deploy, and run applications by using containers.

- Docker Compose - A tool for defining and running multi-container Docker applications.

- HashiCorp Terraform - A tool to create and change infrastructure in a predictable and reproducible way. This blog was tested using version V0.12.2.

- SSH installed as a command line utility. You may need to use a search engine for instructions on how to install SSH if you don’t already have it as it’s dependent on your operating system.

You will also need to sign up for:

- CircleCI account - CircleCI is a continuous integration and delivery platform.

- GitHub account - GitHub is a web-based hosting service for version control using Git.

- Docker Hub account - Docker Hub is a cloud-based repository in which Docker users and partners create, test, store and distribute container images.

- AWS account - Amazon Web Services provides on-demand computing platforms.

Note: The infrastructure we are going to build will involve a small cost in standing up the AWS services we require. If you leave services up for approximately one hour to complete this tutorial, the charge would be between $0.50 to $1.00.

You will also need to have set up your CircleCI, Docker Hub, and Web Application Github accounts as described in part 1 and part 2 of the series.

Create an AWS account & credentials

First, we need to sign up for an AWS account. Although you can pick the “Free” account, because of the resources we will be using there will still be a charge. You will want to tear down the infrastructure once you’ve finished and I’ll show you how to do that in this blog.

Once you have your AWS account, sign in as your root user. The email that you used to sign up is your username, but make sure you click login as root account. Once signed in, select IAM (Identity Access and Management) from the Services menu.

Select Users from the nav bar on the left side of the screen and click Add User. Create a user and make sure you give it both programmatic and console access.

Give the user Administrator Access either by creating a new role or by attaching the existing policy directly.

You can keep the defaults and accept everything else in the setup wizard, but make sure you take careful note of the AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY before you leave the Add user Success screen.

![Add user success]!(//images.ctfassets.net/il1yandlcjgk/5MCHp9w9LhGnO73XCIOzCF/845c3351052b503eb57e32943b1427b6/2019-06-27-add-user.png){: .zoomable }

Once you’ve created your IAM user making a note of your account id (how to find your AWS account id), sign out of the root user by selecting the down arrow next to the account name or number and picking ‘Sign Out’ from the drop-down.

Now sign in to your account as the newly created IAM user. If you are presented with Root user sign in, make sure to pick Sign in to a different account. Use the AWS account id that you took note of earlier, the user name you set up (filmappuser, in my example), and the password you set for management console access.

Once logged in, select EC2 from the Services drop down and click Key Pairs in the Resources on the EC2 dashboard.

Click Create Key Pair and enter a key pair name. I’ve used film_ratings_key_pair in the Terraform so if you don’t want to edit the Terraform, use that as the name. Copy the automatically downloaded .pem file to your .ssh/ directory and set the permission on that file like so:

chmod 400 ~/.ssh/film_ratings_key_pair.pem

Getting the application to wait for the database

This step is not absolutely necessary, but it speeds up the set up of the Elastic Container Service (ECS) resources, so it’s worth doing. When the web application starts in its ECS task container, it will have to connect to the database task container via a load balancer. We will see more on this in the section below. However, it can take a few minutes for the load balancer to register the database container and if we let the web application try and connect immediately, it will fail and then ECS will have to destroy the app container and spin up a new one which takes more time.

To try and minimize this, we will add a borrowed script to wait for the database connection. Create a wait-for-it.sh file in the root directory of the web application project and cut-and-paste the contents of this file into your newly created file.

Then change the Dockerfile in the film ratings web application project to read like so:

FROM openjdk:8u181-alpine3.8

WORKDIR /

RUN apk update && apk add bash

COPY wait-for-it.sh wait-for-it.sh

COPY target/film-ratings.jar film-ratings.jar

EXPOSE 3000

RUN chmod +x wait-for-it.sh

CMD ["sh", "-c", "./wait-for-it.sh --timeout=90 $DB_HOST:5432 -- java -jar film-ratings.jar"]

This ensures that the wait-for-it.sh script runs and repeatedly tries to connect to the DB_HOST on port 5432. If it connects within 90 seconds, it then runs java -jar film-ratings.jar.

Also, update the version in your project.clj file to 0.1.1:

(defproject film-ratings "0.1.1"

...

Once you’ve made these changes, add and commit them to Git and push them to your GitHub repository for the film ratings project:

$ git add . --all

$ git commit -m "Add wait for it script"

[master 45967e8] Add wait for it script

2 files changed, 185 insertions(+), 1 deletion(-)

create mode 100644 wait-for-it.sh

$ git push

You can check that the CircleCI build runs OK in your CircleCI dashboard.

Now tag your change as 0.1.1 and push that tag so that CircleCI publishes this as the latest version:

$ git tag -a 0.1.1 -m "v0.1.1"

$ git push origin 0.1.1

...

* [new tag] 0.1.1 -> 0.1.1

Check on CircleCI that the build_and_deploy workflow publishes the Docker image to Docker Hub.

AWS infrastructure using Terraform

We are going to use Terraform to build a production-like infrastructure in AWS. This is quite a lot of Terraform config so I am not going to walk through every resource that I’ve defined. Feel free to review the code at your leisure.

First, fork my film-ratings-terraform repo in GitHub using the Fork button to the right of the repository title and clone your forked version to your local machine.

$ git clone <the github URL for your forked version of chrishowejones/film-ratings-terraform>

Now that you’ve forked and cloned the Terraform repository, let’s take a look at some of the important bits of it. What does the Terraform do overall?

The diagram below is an extremely simplified version of a few of the more important parts of the infrastructure.

This shows that we are going to set up two ECS tasks called film_ratings_app and film_ratings_db that are going to run in ECS containers with two instances of the app and one of the database. The app and db tasks will run inside their own services similarly called film_ratings_app_service and film_ratings_db_service, respectively (not shown in the diagram).

Here are the app service and app task definitions:

resource "aws_ecs_service" "film_ratings_app_service" {

name = "film_ratings_app_service"

iam_role = "${aws_iam_role.ecs-service-role.name}"

cluster = "${aws_ecs_cluster.film_ratings_ecs_cluster.id}"

task_definition = "${aws_ecs_task_definition.film_ratings_app.family}:${max("${aws_ecs_task_definition.film_ratings_app.revision}", "${data.aws_ecs_task_definition.film_ratings_app.revision}")}"

depends_on = [ "aws_ecs_service.film_ratings_db_service"]

desired_count = "${var.desired_capacity}"

deployment_minimum_healthy_percent = "50"

deployment_maximum_percent = "100"

lifecycle {

ignore_changes = ["task_definition"]

}

load_balancer {

target_group_arn = "${aws_alb_target_group.film_ratings_app_target_group.arn}"

container_port = 3000

container_name = "film_ratings_app"

}

}

Note: The minimum healthy percentage is 50%. This allows the service to stop a container task (leaving only one running) in order to use the resource freed up to start a new version of the container task when we do rolling deployment.

data "aws_ecs_task_definition" "film_ratings_app" {

task_definition = "${aws_ecs_task_definition.film_ratings_app.family}"

depends_on = ["aws_ecs_task_definition.film_ratings_app"]

}

resource "aws_ecs_task_definition" "film_ratings_app" {

family = "film_ratings_app"

container_definitions = <<DEFINITION

[

{

"name": "film_ratings_app",

"image": "${var.film_ratings_app_image}",

"essential": true,

"portMappings": [

{

"containerPort": 3000,

"hostPort": 3000

}

],

"environment": [

{

"name": "DB_HOST",

"value": "${aws_lb.film_ratings_nw_load_balancer.dns_name}"

},

{

"name": "DB_PASSWORD",

"value": "${var.db_password}"

}

],

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "film_ratings_app",

"awslogs-region": "${var.region}",

"awslogs-stream-prefix": "ecs"

}

},

"memory": 1024,

"cpu": 256

}

]

DEFINITION

}

The app instances need to communicate with the db instance via port 5432. In order to do this, they need to route their requests via a network load balancer (film-ratings-nw-load-balancer) so when we set up the film_ratings_app task, we need to pass the containers the network load balancer’s DNS name so that the application within the container can use it as the DB_HOST to talk to the database.

...

resource "aws_lb_target_group" "film_ratings_db_target_group" {

name = "film-ratings-db-target-group"

port = "5432"

protocol = "TCP"

vpc_id = "${aws_vpc.film_ratings_vpc.id}"

target_type = "ip"

health_check {

healthy_threshold = "3"

unhealthy_threshold = "3"

interval = "10"

port = "traffic-port"

protocol = "TCP"

}

tags {

Name = "film-ratings-db-target-group"

}

}

resource "aws_lb_listener" "film_ratings_nw_listener" {

load_balancer_arn = "${aws_lb.film_ratings_nw_load_balancer.arn}"

port = "5432"

protocol = "TCP"

default_action {

target_group_arn = "${aws_lb_target_group.film_ratings_db_target_group.arn}"

type = "forward"

}

}

The application load balancer (film-ratings-alb-load-balancer) is what we will use to point our browser to. It will be responsible for routing HTTP requests to one of the two instances of the film_ratings_app containers, mapping the default port 80 to port 3000 for us.

...

resource "aws_alb_target_group" "film_ratings_app_target_group" {

name = "film-ratings-app-target-group"

port = 3000

protocol = "HTTP"

vpc_id = "${aws_vpc.film_ratings_vpc.id}"

deregistration_delay = "10"

health_check {

healthy_threshold = "2"

unhealthy_threshold = "6"

interval = "30"

matcher = "200,301,302"

path = "/"

protocol = "HTTP"

timeout = "5"

}

stickiness {

type = "lb_cookie"

}

tags = {

Name = "film-ratings-app-target-group"

}

}

resource "aws_alb_listener" "alb-listener" {

load_balancer_arn = "${aws_alb.film_ratings_alb_load_balancer.arn}"

port = "80"

protocol = "HTTP"

default_action {

target_group_arn = "${aws_alb_target_group.film_ratings_app_target_group.arn}"

type = "forward"

}

}

resource "aws_autoscaling_attachment" "asg_attachment_film_rating_app" {

autoscaling_group_name = "film-ratings-autoscaling-group"

alb_target_group_arn = "${aws_alb_target_group.film_ratings_app_target_group.arn}"

depends_on = [ "aws_autoscaling_group.film-ratings-autoscaling-group" ]

}

Another important part of the infrastructure shown is that the film_ratings_db container has a volume mounted that is mapped to an Elastic File System volume to persist the data outside the EC2 instances that are running the containers. We do this so that if we have to scale the instances up or down (or if instances die), we don’t lose the data in the database.

resource "aws_ecs_task_definition" "film_ratings_db" {

family = "film_ratings_db"

volume {

name = "filmdbvolume"

host_path = "/mnt/efs/postgres"

}

network_mode = "awsvpc"

container_definitions = <<DEFINITION

[

{

"name": "film_ratings_db",

"image": "postgres:alpine",

"essential": true,

"portMappings": [

{

"containerPort": 5432

}

],

"environment": [

{

"name": "POSTGRES_DB",

"value": "filmdb"

},

{

"name": "POSTGRES_USER",

"value": "filmuser"

},

{

"name": "POSTGRES_PASSWORD",

"value": "${var.db_password}"

}

],

"mountPoints": [

{

"readOnly": null,

"containerPath": "/var/lib/postgresql/data",

"sourceVolume": "filmdbvolume"

}

],

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "film_ratings_db",

"awslogs-region": "${var.region}",

"awslogs-stream-prefix": "ecs"

}

},

"memory": 512,

"cpu": 256

}

]

DEFINITION

}

The launch configuration makes sure that when an EC2 instance is started the EFS volume is mounted via the user_data entry.

...

user_data = <<EOF

#!/bin/bash

echo ECS_CLUSTER=${var.ecs_cluster} >> /etc/ecs/ecs.config

mkdir -p /mnt/efs/postgres

cd /mnt

sudo yum install -y amazon-efs-utils

sudo mount -t efs ${aws_efs_mount_target.filmdbefs-mnt.0.dns_name}:/ efs

EOF

Creating the AWS infrastructure

We are almost ready to run our Terraform, but before we do so we need to make sure we have all the correct values for the various variables. Let’s look at the terraform.tfvars file.

# You may need to edit these variables to match your config

db_password= "password"

ecs_cluster="film_ratings_cluster"

ecs_key_pair_name="film_ratings_key_pair"

region= "eu-west-1"

film_ratings_app_image= "chrishowejones/film-ratings-app:latest"

# no need to change these unless you want to

film_ratings_vpc = "film_ratings_vpc"

film_ratings_network_cidr = "210.0.0.0/16"

film_ratings_public_01_cidr = "210.0.0.0/24"

film_ratings_public_02_cidr = "210.0.10.0/24"

max_instance_size = 3

min_instance_size = 1

desired_capacity = 2

You can edit the password if required, but for this demo it’s not really necessary. You could also override the password by setting an environment variable TF_VAR_db_password. The ecs_key_pair_name value must match the name you gave the key pair you created for the AWS user in your .ssh/ directory earlier. You must also change the film_ratings_app_image to be the Docker Hub repository image name for your image and not mine (make sure you have published a Docker Hub repository image of the correct name - you should have if you’ve already completed part 2 of this blog series).

If you want to use a different AWS region from the one I am using, you will need to change the region value. The data.tf file ensures that the ${data.aws_ami.latest_ecs.id} variable is set to the appropriate ECS optimized AMI image for your region.

There shouldn’t be a reason to change anything else.

Running the Terraform

Once you have the terraform.tfvars values set correctly, you need to initialize Terraform in your cloned film-ratings-terraform directory:

$ terraform init

Initializing provider plugins...

- Checking for available provider plugins on https://releases.hashicorp.com...

...

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

Then you can run a Terraform plan to check what resources will be created when it’s applied. You will be prompted for your AWS access key id and AWS secret access key. If you tire of typing these in, you can set environment variables TF_VAR_aws_access_key_id and TF_VAR_aws_secret_access_key in your terminal session.

$ terraform plan

var.aws_access_key_id

AWS access key

Enter a value: ...

...

Refreshing Terraform state in-memory prior to plan...

...

Plan: 32 to add, 0 to change, 0 to destroy.

------------------------------------------------------------------------

Note: You didn't specify an "-out" parameter to save this plan, so Terraform

can't guarantee that exactly these actions will be performed if

"terraform apply" is subsequently run.

To actually build the AWS resources listed in the plan, use the following command (enter `yes` when prompted with `Do you want to perform these actions?`):

$ terraform apply

data.aws_iam_policy_document.ecs-instance-policy: Refreshing state...

data.aws_availability_zones.available: Refreshing state...

data.aws_iam_policy_document.ecs-service-policy: Refreshing state...

...

Plan: 32 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

...

aws_autoscaling_group.film-ratings-autoscaling-group: Creation complete after 1m10s (ID: film-ratings-autoscaling-group)

Apply complete! Resources: 32 added, 0 changed, 0 destroyed.

Outputs:

app-alb-load-balancer-dns-name = film-ratings-alb-load-balancer-895483441.eu-west-1.elb.amazonaws.com

app-alb-load-balancer-name = film-ratings-alb-load-balancer

ecs-instance-role-name = ecs-instance-role

ecs-service-role-arn = arn:aws:iam::731430262381:role/ecs-service-role

film-ratings-app-target-group-arn = arn:aws:elasticloadbalancing:eu-west-1:731430262381:targetgroup/film-ratings-app-target-group/8a35ef20a2bab372

film-ratings-db-target-group-arn = arn:aws:elasticloadbalancing:eu-west-1:731430262381:targetgroup/film-ratings-db-target-group/5de91812c3fb7c63

film_ratings_public_sg_id = sg-08af1f2ab0bb6ca95

film_ratings_public_sn_01_id = subnet-00b42a3598abf988f

film_ratings_public_sn_02_id = subnet-0bb02c32db76d7b05

film_ratings_vpc_id = vpc-06d431b5e5ad36195

mount-target-dns = fs-151074dd.efs.eu-west-1.amazonaws.com

nw-lb-load-balancer-dns-name = film-ratings-nw-load-balancer-4c3a6e6a0dab3cfb.elb.eu-west-1.amazonaws.com

nw-lb-load-balancer-name = film-ratings-nw-load-balancer

region = eu-west-1

The Terraform can take up to five minutes to run and it can take another five minutes for the load balancers and the ECS services to properly recognize each other. You can check the progress by logging into the AWS console and selecting ECS from the Services drop-down, selecting your cluster (named film_ratings_cluster, by default), and examining the logs attached to each service. The film_ratings_app_service can take a little while to connect and occasionally, even with the wait-for-it.sh script, the first task to start can fail to connect to the database and you have to wait for autoscaling to start another task instance.

Once the ECS services and tasks have started, you can try and connect to the application through your browser using the app-alb-load-balancer-dns-name value as the URL (shown in my output above as film-ratings-alb-load-balancer-895483441.eu-west-1.elb.amazonaws.com in this case, but your value will differ).

Don’t worry if you see an HTTP status 502 or 503 initially as even after the ECS services are up, it can take a few minutes for the application load balancer to detect that everything is healthy. Eventually, if you enter the URL for the alb load balancer DNS name in your browser, film-ratings-alb-load-balancer-895483441.eu-west-1.elb.amazonaws.com in my example, you should see this.

![Film ratings page(//images.ctfassets.net/il1yandlcjgk/1HMKd4c429Xn1hiDK84hiQ/972e7de203491e10115f8012eb85e7c8/2019-06-27-film-ratings-page.png){: .zoomable }

At this point, it’s worth noting that to tear down all these resources you can issue the terraform destroy command (entering ‘yes’ when prompted if you really want to destroy all the resources).

Getting CircleCI to deploy to an ECS cluster

Next, we want to use our continuous integration service, CircleCI, to publish our Docker instances for us.

So far in our CircleCI configuration, we are building and testing our application whenever we push a change to GitHub, and we are building, testing, packaging as a Docker container, and publishing the Docker container to Docker Hub whenever we tag our project in GitHub.

In this section of the post, we will add the config to also push our packaged Docker container to our ECS cluster when we tag our project, but we will add a manual approval step that allows us to control the moment we start the deployment to ECS. Since we have set up our ECS services to use rolling deployment, this process will deploy our changed, tagged application with zero downtime.

Right now our .circleci/config.yml has three jobs, build, build-docker, and publish-docker. Let’s add another job below publish-docker called deploy.

...

deploy:

docker:

- image: circleci/python:3.6.1

environment:

AWS_DEFAULT_OUTPUT: json

IMAGE_NAME: chrishowejones/film-ratings-app

steps:

- checkout

- restore_cache:

key: v1-{{ checksum "requirements.txt" }}

- run:

name: Install the AWS CLI

command: |

python3 -m venv venv

. venv/bin/activate

pip install -r requirements.txt

- save_cache:

key: v1-{{ checksum "requirements.txt" }}

paths:

- "venv"

- run:

name: Deploy

command: |

. venv/bin/activate

./deploy.sh

...

This job will install the AWS CLI tool using pip (a Python package manager) by reading the requirements.txt file for the CLI package requirement. Then the run step Deploy runs a deploy.sh script which we haven’t created yet. Remember to change the IMAGE_NAME to the Docker Hub repository image name for your image and not mine. Let’s add the extra jobs we need to the end of the build_and_deploy workflow before we move on.

...

- hold:

requires:

- publish-docker

type: approval

filters:

branches:

ignore: /.*/

tags:

only: /^\d+\.\d+\.\d+$/

- deploy:

requires:

- hold

filters:

branches:

ignore: /.*/

tags:

only: /^\d+\.\d+\.\d+$/

We have added two new jobs to the build_and_deploy workflow. The first, hold, requires the previous publish-docker step to complete before it executes and is of type ‘approval’. The ‘approval’ type is a built-in CircleCI type that will stop the workflow and require a user to click Approve for it to continue to any subsequent steps.

The deploy job relies on hold being approved.

Note: Like every other step in the build_and_deploy workflow, these steps are only triggered when a semantic version style tag is pushed (e.g., 0.1.1 ).

Let’s add the requirements.txt file in the project root directory to make sure the AWS CLI tool is installed by CircleCI. The contents of this file should be:

awscli>=1.16.0

Let’s also add the deploy.sh script referenced in the deploy job by creating this new file in the root directory of our project.

#!/usr/bin/env bash

# more bash-friendly output for jq

JQ="jq --raw-output --exit-status"

configure_aws_cli(){

aws --version

aws configure set default.region eu-west-1 # change this if your AWS region differs

aws configure set default.output json

}

deploy_cluster() {

family="film_ratings_app"

make_task_def

register_definition

if [[ $(aws ecs update-service --cluster film_ratings_cluster --service film_ratings_app_service --task-definition $revision | \

$JQ '.service.taskDefinition') != $revision ]]; then

echo "Error updating service."

return 1

fi

# wait for older revisions to disappear

# not really necessary, but nice for demos

for attempt in {1..15}; do

if stale=$(aws ecs describe-services --cluster film_ratings_cluster --services film_ratings_app_service | \

$JQ ".services[0].deployments | .[] | select(.taskDefinition != \"$revision\") | .taskDefinition"); then

echo "Waiting for stale deployments:"

echo "$stale"

sleep 45

else

echo "Deployed!"

return 0

fi

done

echo "Service update took too long."

return 1

}

make_task_def(){

task_template='[

{

"name": "film_ratings_app",

"image": "%s:%s",

"essential": true,

"portMappings": [

{

"containerPort": 3000,

"hostPort": 3000

}

],

"environment": [

{

"name": "DB_HOST",

"value": "%s"

},

{

"name": "DB_PASSWORD",

"value": "%s"

}

],

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "film_ratings_app",

"awslogs-region": "eu-west-1",

"awslogs-stream-prefix": "ecs"

}

},

"memory": 1024,

"cpu": 256

}

]'

task_def=$(printf "$task_template" $IMAGE_NAME $CIRCLE_TAG $DB_HOST $DB_PASSWORD)

}

register_definition() {

if revision=$(aws ecs register-task-definition --container-definitions "$task_def" --family $family | $JQ '.taskDefinition.taskDefinitionArn'); then

echo "Revision: $revision"

else

echo "Failed to register task definition"

return 1

fi

}

configure_aws_cli

deploy_cluster

This script will make a new task definition for our film_ratings_app task. If you look at the config, it mirrors that in the Terraform definition in film-ratings-app-task-definition.tf. It then deploys this new task to the cluster.

Notice that the task_def set up in the make_task_def function substitutes in values from variables called $IMAGE_NAME, $CIRCLE_TAG, $DB_HOST, and $DB_PASSWORD. We will set these variables in the CircleCI project environment variables later.

Note: If you are using a different AWS region than eu-west-1 you will need to change the entries on lines 8 & 67.

Before moving on, let’s make this new script file executable.

$ chmod +x deploy.sh

Now, let’s add those variables and the AWS variables we require to our CircleCI config.

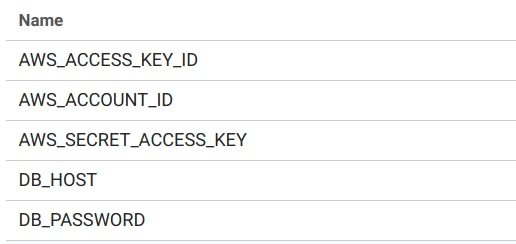

Go to your CircleCI dashboard and select the settings for your film ratings project, select Environment Variables, under Build Settings, and enter the following variables:

Note: You should already have DOCKERHUB_PASS and DOCKERHUB_USERNAME set up here.

Use your AWS access key id and AWS secret access key you got when you created your user (the same values you set when running the Terraform). Remember you can find your AWS account id here. Set your DB_PASSWORD to whatever value you used in the terraform.tfvars file.

The DB_HOST value needs to be the DNS name of the network load balancer that routes TCP requests to the film_ratings_db task instance. You can find this in the output from the terraform apply command or by logging on to your AWS management console and navigating from Services to EC2 and from there to Load Balancers, if you select the film-ratings-nw-load-balancer entry, you should see the DNS name (in the Basic Configuration section).

nw-lb-load-balancer-dns-name = film-ratings-nw-load-balancer-4c3a6e6a0dab3cfb.elb.eu-west-1.amazonaws.com

nw-lb-load-balancer-name = film-ratings-nw-load-balancer

region = eu-west-1

In the example here, the DB_HOST variable in CircleCI should be set to film-ratings-nw-load-balancer-4c3a6e6a0dab3cfb.elb.eu-west-1.amazonaws.com.

Note: If you’ve destroyed your AWS resources you will need to set them up again using the terraform apply command in the film-ratings-terraform directory to get the AWS resources set up to get the network load balancer DNS.

Once you have set up the environment variables, you can commit the changes to the CircleCI config and the new deploy script file and push these to GitHub.

$ git add . --all

$ git commit -m "Added config to deploy to ECS"

$ git push origin master

Change the application and deploy

Now we are going to prove this CircleCI config is working by adding some functionality to our application and then tagging it and deploying the changes to the ECS cluster.

Let’s add a search feature to our application.

First, add the mappings for the routes and handler keys to the resources/film_ratings/config.edn file to show the search form and handle the post of the search form:

...

:duct.module/ataraxy

{\[:get "/"\] [:index]

"/add-film"

{:get [:film/show-create]

\[:post {film-form :form-params}\] [:film/create film-form]}

\[:get "/list-films"\] [:film/list]

"/find-by-name"

{:get [:film/show-search]

\[:post {search-form :form-params}\] [:film/find-by-name search-form]}}

:film-ratings.handler/index {}

:film-ratings.handler.film/show-create {}

:film-ratings.handler.film/create {:db #ig/ref :duct.database/sql}

:film-ratings.handler.film/list {:db #ig/ref :duct.database/sql}

:film-ratings.handler.film/show-search {}

:film-ratings.handler.film/find-by-name {:db #ig/ref :duct.database/sql}

...

Next, let’s add the handlers for the show-search and find-by-name keys to the bottom of the src/film_ratings/handler/film.clj file:

...

(defmethod ig/init-key :film-ratings.handler.film/show-search [_ _]

(fn [_]

[::response/ok (views.film/search-film-by-name-view)]))

(defmethod ig/init-key :film-ratings.handler.film/find-by-name [_ {:keys [db]}]

(fn [{[_ search-form] :ataraxy/result :as request}]

(let [name (get search-form "name")

films-list (boundary.film/fetch-films-by-name db name)]

(if (seq films-list)

[::response/ok (views.film/list-films-view films-list {})]

[::response/ok (views.film/list-films-view [] {:messages [(format "No films found for %s." name)]})]))))

Let’s add a new button to the index view in the src/film_ratings/views/index.clj file:

(defn list-options []

(page

[:div.container.jumbotron.bg-white.text-center

[:row

[:p

[:a.btn.btn-primary {:href "/add-film"} "Add a Film"]]]

[:row

[:p

[:a.btn.btn-primary {:href "/list-films"} "List Films"]]]

[:row

[:p

[:a.btn.btn-primary {:href "/find-by-name"} "Search Films"]]]]))

Now we need a view for the search-films-by-name-view at the bottom of the src/film_ratings/views/film.clj file:

...

(defn search-film-by-name-view

[]

(page

[:div.container.jumbotron.bg-light

[:div.row

[:h2 "Search for film by name"]]

[:div

(form-to [:post "/find-by-name"]

(anti-forgery-field)

[:div.form-group.col-12

(label :name "Name:")

(text-field {:class "mb-3 form-control" :placeholder "Enter film name"} :name)]

[:div.form-group.col-12.text-center

(submit-button {:class "btn btn-primary text-center"} "Search")])]]))

The database boundary protocol and associated implementation need the fetch-films-by-name function referenced in the find-by-name handler. Add the appropriate function to the protocol and the extension to the protocol in the src/film_ratings/boundary/film.clj file like so:

...

(defprotocol FilmDatabase

(list-films [db])

(fetch-films-by-name [db name])

(create-film [db film]))

(extend-protocol FilmDatabase

duct.database.sql.Boundary

(list-films [{db :spec}]

(jdbc/query db ["SELECT * FROM film"]))

(fetch-films-by-name [{db :spec} name]

(let [search-term (str "%" name "%")]

(jdbc/query db ["SELECT * FROM film WHERE LOWER(name) like LOWER(?)" search-term])))

(create-film [{db :spec} film]

(try

(let [result (jdbc/insert! db :film film)]

(if-let [id (val (ffirst result))]

{:id id}

{:errors ["Failed to add film."]}))

(catch SQLException ex

(log/errorf "Failed to insert film. %s\n" (.getMessage ex))

{:errors [(format "Film not added due to %s" (.getMessage ex))]}))))

Also, change the version number of the build in the project file to 0.2.0.

(defproject film-ratings "0.2.0"

...

You should now be able to test this by running:

$ lein repl

nREPL server started on port 43477 on host 127.0.0.1 - nrepl://127.0.0.1:43477

REPL-y 0.3.7, nREPL 0.2.12

Clojure 1.9.0

Java HotSpot(TM) 64-Bit Server VM 1.8.0_191-b12

Docs: (doc function-name-here)

(find-doc "part-of-name-here")

Source: (source function-name-here)

Javadoc: (javadoc java-object-or-class-here)

Exit: Control+D or (exit) or (quit)

Results: Stored in vars *1, *2, *3, an exception in *e

user=> (dev)

:loaded

dev=> (go)

:duct.server.http.jetty/starting-server {:port 3000}

:initiated

dev=>

You should see Search Films on the index if you open a browser to http://localhost:3000/

Now add some films to your test (SQLite) database and use Search Films to take you to the search form. Fill in part of the name of one or more of your films.

See if you get the results you expect from the search by pressing Search.

You can also try a non-matching search.

Time to commit your changes to GitHub.

$ git add . --all

$ git commit -m "Added search for films"

$ git push origin master

You can check that the CircleCI build runs OK in your CircleCI dashboard.

Next, we are going to tag our build with the new version 0.2.0.

$ git tag -a 0.2.0 -m "v0.2.0"

$ git push origin 0.2.0

...

* [new tag] 0.2.0 -> 0.2.0

This should trigger the build_and_deploy workflow in CircleCI

After a couple of minutes or so you should see the workflow finish publishing to Docker Hub and then go on hold.

If you click the hold job and then Approve it, the workflow will continue and deploy to ECS. The deploy step can take several minutes and AWS Elastic Container Service can take up to five minutes to rolling deploy both instances. You can check the progress of the ECS deployment in the ECS console. If you try entering the application load balancer DNS name in a browser after about 5 minutes or so, you should see the new search button and you can add films and search for them.

Don’t forget to destroy the AWS resources when you’ve finished with them by running the terraform destroy command in the film-ratings-terraform directory.

Summary

Congratulations! If you’ve followed the entire blog series, you’ve created a Clojure web application with a PostgreSQL database, tested it, built it, packaged it in a Docker container, published the container, stood up an AWS Elastic Container Service and deployed to the cluster with Terraform!

This is a realistic, if slightly simple, set up that could be used in a live web application. You would want to add a web domain pointing to your application load balancer, secure the communication with a TLS certificate, and add more monitoring, but you’ve already built the majority of what you need.