How the Insights team uses Insights to optimize our own pipelines

Senior Software Engineer, Insights

Here on the CircleCI Insights team we don’t just develop stuff for CircleCI users, we are CircleCI users. Really, there’s no better way to get to know your product than to use it, and the Insights team is no exception.

A few months ago, we realized that our pipeline configuration for the Insights UI left much to be desired. End-to-end tests were slowing down our pull requests, and we were spending a little too much time waiting for deploys — our P95 run time was hovering around the 25 minute mark. For a team that deploys multiple times a day, these numbers really add up.

We spent a couple of days rewriting our pipeline, and we were ruthlessly efficient. The ROI was astounding — a two-day engineering effort resulted in approximately a 50% reduction in build times. Our P95 run time has been slashed to 12 minutes. Even better news? The tools we used are available to every CircleCI user, and I’m going to walk you through the process in the hopes that you can repeat our success.

Get to know your workflows

Before you write a single line of YAML, it’s important to know what you’re working with. In our case, the Insights UI is built, tested, and deployed in a single workflow, so that’s where our investigation began. To create a mental model of your workflows, answer these questions:

- What is each job responsible for? Look for jobs that are trying to multitask and prioritize streamlining those first — for example, building a Docker image, running a security scan on the image, and uploading it to Docker Hub.

- What does each job need? If you’re uploading static assets somewhere, you’ll need to ensure that those are already built.

- When do jobs run? When should they run? A branch that deploys a canary might need a different set of jobs from a feature branch.

Being able to provide clear answers to these questions will help you apply some best practices to your workflow architecture, including writing atomic jobs and optimizing data flow with caching and workspaces.

Writing atomic jobs

A well-written job is a lot like a well-written unit test: it’s atomic. It does one thing and one thing only. One big reason for this is that atomic jobs provide shorter feedback loops. If you want to be able to fix failures quickly, you need to be able to find the source of failure as fast as possible. This becomes much harder when a job is multitasking, because you have to parse through output that might be spread out over different steps.

Atomic jobs are also easier to refactor in the future. Chances are, you will need to add more steps to the middle of your workflow at some point, and this will be much easier when all of your existing jobs are decoupled from each other.

Controlling data flow with workspaces and caching

Often, one job will depend upon the output of another. For example, you might want to build a Storybook and publish it somewhere, or build a Docker container that gets uploaded to a container registry. Data should always flow downstream. Your workflow should be built from left to right progressively; if there’s an asset that multiple jobs depend upon, that needs to be built as early as possible. The very first step in our workflow is to install node_modules, since we need Jest and Storybook packages to run our other jobs.

For this to work properly, you’ll need to use caching or workspaces. These are tools that allow you to skip expensive processes unless they are absolutely necessary.

Caching

You should cache any data that is expensive to generate and might be reused between workflow runs. If you’re using a package manager such as yarn, make sure you cache those dependencies! It takes about 15 seconds for us to pull dependencies from a cache, versus around a minute for a clean install. We only have to reinstall when we add a brand-new dependency, which isn’t very often.

Workspaces

If you have data that changes between every workflow run, but still needs to be accessed between jobs, you should be using workspaces. For example, if your test job generates a coverage report that another job will upload to a code analysis tool.

For more information about persisting data, check out our docs.

Using Insights to identify optimization targets

I might be a little biased, but Insights is a really valuable tool. Any optimization effort should start here: you can identify trends, long-running jobs, and flaky tests. Grab your detective hat and take a look at your target workflows and jobs. While this post isn’t going to cover tests in detail, it might be worth your time to check out the Tests tab as well.

Here are some things to be on the lookout for:

- Slow jobs: I think this almost goes without saying, but if a single job is taking up a significant chunk of your workflow’s time, it warrants a closer look.

- Jobs with trends that are moving disproportionately: Using the runs trend as a baseline, take a look at the trends for duration and credit spend. Ideally, they should be moving at roughly the same pace — but in this screenshot, you can see that our runs have increased by 35%, while our P95 Duration has shot up over 500%. This is a huge red flag, and it indicates that your pipeline will likely be unable to scale.

- Flaky tests: Not only do flakes erode your team’s confidence in your product’s resilience, but they’re a huge waste of time. We’ve seen test suites that have to be rerun 3-4 times before they finally pass.

- Slow unit tests: If you don’t already use parallelization and test splitting, I would encourage you to check it out. The time it takes to run a test suite sequentially is equal to the sum of the time it takes to run each test; however, the time it takes to run a test suite in parallel is equal to the time it takes to run your slowest test. In a parallel environment, focus on speeding up your slowest test — running it 30% faster means the entire test suite will run 30% faster.

Mapping out the ideal pipeline end state

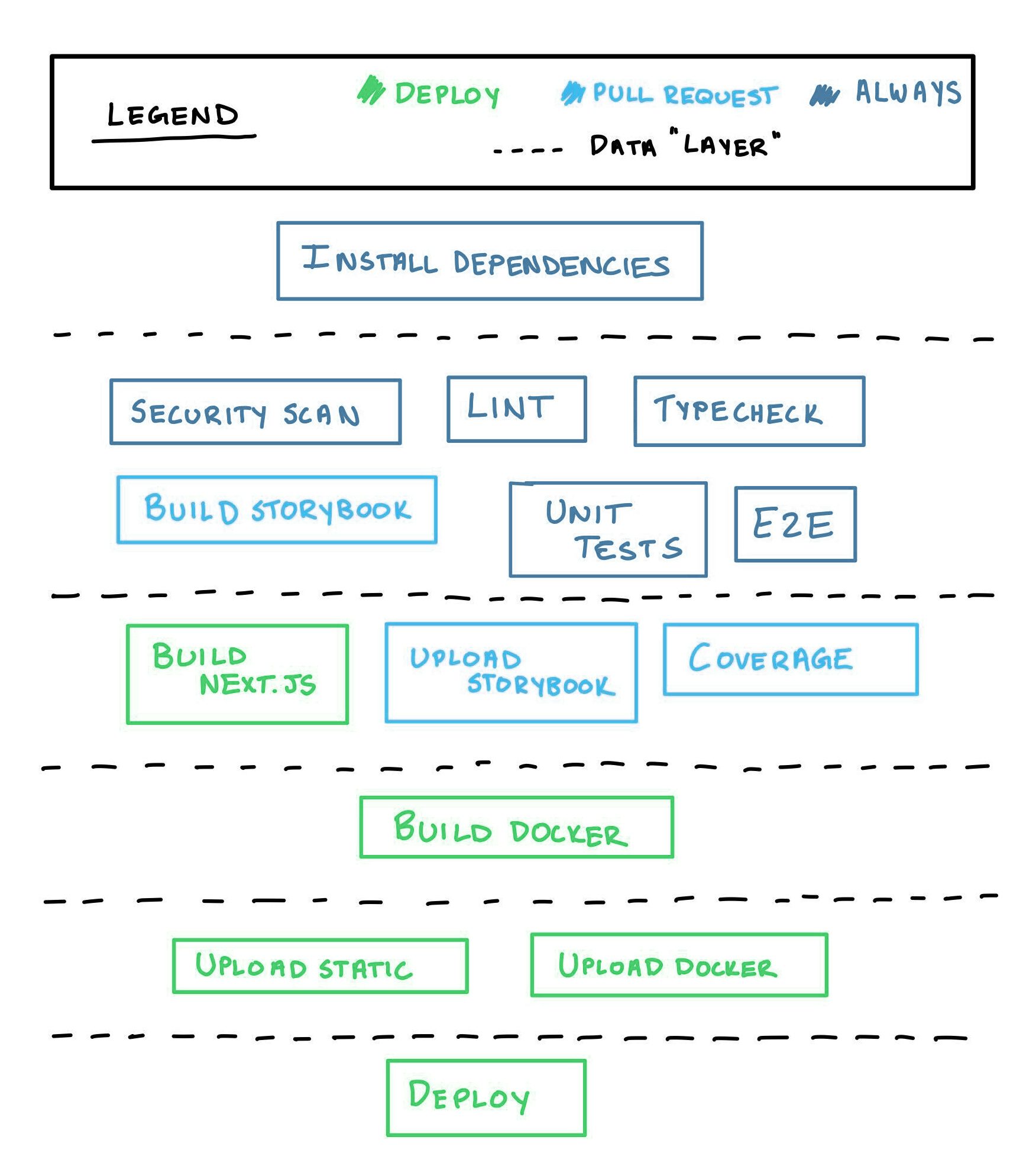

At this point, we had enough information to make the first meaningful change. The first thing we did here was to map out our desired end state — essentially a visualization of what our ideal pipeline run would look like.

I recommend drawing a diagram using pen and paper. Make a list of everything your workflow needs to do, and turn that into a list of atomic jobs. Which of those jobs will output data, and which will require data for input? You can use the data flow to group your jobs into “layers” — any given layer should contain only jobs that either depend on the previous layer or are required for the next layer (or both).

In our case, we had a lot of jobs that were trying to do too many things, and they needed to be broken up. We also realized that we weren’t using caching everywhere that could have benefited from it. We had to rearrange some of the jobs so that the data flow made sense.

This is the end result of that process for Insights:

Looking at this diagram, you may wonder why we didn’t choose to run the lint and typecheck jobs before the tests. There’s no point to running the tests if the typecheck fails. While this is true, there’s also no harm in running the tests in this scenario. It’s also a matter of efficiency, which ties in to the concept of the data layer. The tests don’t need anything that the typecheck might output, which is why they’re grouped in the same layer.

Let’s say that X is the number of seconds it takes to run the lint and typecheck jobs. If we were to push our tests further down and make them depend on those jobs, we would save X - Y seconds (where Y is the number of seconds it took to fail) in the event of a failure. The downside to this method is that on every successful run, we could have saved X seconds, but didn’t. The takeaway here is that if you value efficiency, it’s important to trust the data flow instead of trying to layer your jobs arbitrarily.

The “big rewrite”

First of all, please don’t actually rewrite your entire configuration in one go; that’s a recipe for disaster. It’s just that “The Big Rewrite” rolls off the tongue a bit better than “The Rewrite of Many Small, Validated Changes”.

The “rewrite of many small, validated changes”

We made a number of changes during this rewrite, and I won’t bore you with all of the finer details. However, I do want to share some of the most impactful ones, in the hope that they can inspire you to find ways to optimize your own builds.

Skipping unnecessary Chromatic builds

Time savings: ~2 minutes on default branch

We use a tool called Chromatic to make sure that all visual UI changes are reviewed before being deployed. This is a really important step in our pipeline, but it takes around 2–3 minutes to run, and it isn’t always necessary. We only wanted to run this step when both of these conditions were met:

- There were meaningful changes to the source code. There’s no need to run a regression test on the UI if your PR only updates a Dockerfile. To skip Chromatic in this scenario, we grep the git diff to check if there are any changed files under

src/**/* - The build is not on the default branch. We’ve configured GitHub in such a way that if a UI change makes it to the default branch, that means it has already been reviewed and approved. On the default branch, we still upload the files to preserve a clean Chromatic history, but approval is automatic.

chromatic:

executor: ci-node

steps:

- checkout

- restore-yarn-cache

- run:

name: "Generate snapshots and upload to chromatic"

command: |

# Get the diff between this branch and the default branch, filtered down to src/

export CHANGED=$(git diff --name-only origin/main... | grep -E "src\/.*\.(ts|tsx)$")

main() {

# Skip completely if not default branch AND no changes

if [ -z "$CHANGED" ] && [ "$CIRCLE_BRANCH" != "main" ]; then

echo "No components changed; skipping chromatic"

yarn chromatic --skip

exit 0

fi

# Auto accept on default branch, they have already been approved

if [ "$CIRCLE_BRANCH" == "main" ]; then

yarn chromatic --auto-accept-changes

exit $?

fi

# Otherwise, run chromatic

yarn chromatic --exit-once-uploaded

}

main "$@"

Refactoring build-and-deploy-static and caching Next.js

Time savings: ~5 minutes per build

We use Next.js, which means we have to build the static files and upload them to a CDN to use server-side rendering. The Next.js build is then used to build a Docker image, which is scanned for CVEs. If we are deploying, this image is also uploaded to a container registry.

We only need to upload these assets if we are deploying, but we wanted to perform the security scan on every PR, so that we could catch vulnerabilities as fast as possible. Before the refactor, all these things were coupled together, and we were doing a lot of unnecessary work.

build-and-scan(every build): This built our assets from scratch and performed the security scan.build-and-deploy-static(deploys only): This job would build our assets from scratch (again), and upload them.

We were building twice, and uploading assets that didn’t need to be uploaded. To make things worse, we realized we weren’t caching the Next.js build.

You may be wondering how we overlooked something like this for so long. The answer is a mixture of “collaboration” and “agency”. At CircleCI, our engineering teams have a lot of freedom, including the freedom to configure their own pipelines however they see fit.

Before this refactor, we were using an orb that was maintained internally, but lacked clear ownership. Over time, the number of engineers contributing to the orb’s source grew, but the lack of ownership meant that the orb’s responsibilities were unclear. It ended up organically growing into something that tried to cater to too many teams, most of which had very different needs.

That same freedom I mentioned earlier also meant that we had the power to replace the orb with our own custom configuration. We took those two jobs that had been provided by the orb and replaced them with smaller, atomic jobs:

build-next-app(every build): We made sure to implement Next’s build caching feature here.build-and-scan-docker(every build): This job uses the Next build to create our docker image and perform a security scan.deploy-static(deploys only): Uploads the output from both of the previous jobs

If you’d like to learn more about caching, check out our documentation.

Parallelization of Cypress tests

Time savings: ~10 minutes per build

We use Cypress to run our frontend end-to-end tests. During the refactor, we realized two things: First of all, we weren’t caching the Cypress binary, which meant we had to reinstall it every time. Second, we weren’t running those tests in parallel!

We started using the Cypress orb to run our tests. Previously, they were running in a Docker container. Now, we cache the Cypress build, and use a parallelism value equal to the number of Cypress test files.

- cypress/run:

name: cypress

executor: cypress

parallel: true

parallelism: <<pipeline.parameters.cypress_parallelism>>

requires:

- dependencies

pre-steps:

- checkout

- restore-yarn-cache

- restore-cypress-cache

- run:

name: Split tests

command: 'circleci tests glob "cypress/e2e/*" | circleci tests split >

/tmp/tests.txt'

- run:

name: Install cypress binary

command: yarn cypress install

post-steps:

- save_cache:

paths:

- ~/.cache/Cypress/

key: cypress-{{arch}}-{{checksum "yarn.lock"}}

- store_test_results:

path: 'test-results/cypress.xml' # cypress.json

- store_artifacts:

path: cypress/artifacts # this is in cypress.json

no-workspace: true

yarn: true

start: yarn start

wait-on:

'-c .circleci/wait-on-config.json

http://localhost:3000/insights/healthcheck'

command: yarn cypress run --spec $(cat /tmp/tests.txt) --config-file cypress.json

Find more information about parallelization in our documentation.

Validating your new pipeline

Once you’re done with the process of rewriting your pipeline, you can use Insights to validate that the changes you’ve made have had a positive impact. If you were to visit Insights for the workflow that was rewritten, you’d see our P95 duration had trended significantly downward, and our success rate had improved as well.

It’s also possible to compare your project against other, similar projects within your organization. From the main Insights page, you can use the Project dropdown to select projects for comparison. The biggest piece of validation for this work was when we realized that Insights had more than double the runs compared to similar projects, while our total duration was less than half.

Conclusion

Hopefully, I’ve managed to convince you that it’s well worth your time to invest in your team’s pipeline configuration. It may seem daunting, but it’s very doable if you approach it in small chunks, using “many small, validated changes.”

Remember: use Insights to help you identify areas for improvement. From there, get to know your workflows, keep your jobs atomic, and control the data flow. If you keep these things in mind, you’ll find it’s very easy to make small, meaningful changes. At the end of the day, that’s what building software is all about.

PS: The Insights team’s goal is to empower your team to make better engineering decisions. If there’s something you’d like to see on Insights, we’d love for you to post it on our Canny board.