One difficult challenge in the software development cycle is increasing the speed of development while ensuring the quality of the code remains the same. The data world has adopted software development practices in recent years to test data changes before deployment. The testing process can be time-consuming and prone to unexpected errors.

For example, at CircleCI, our data team uses dbt at scale. Until recently we had been experiencing deployment bottlenecks caused by long test runs in dbt Cloud. To solve this, we set up CircleCI to automatically test and deploy our data changes so that we can deliver quality data model releases as fast as possible to our data consumers. In this post, we will walk you through how to use CircleCI and dbt to automatically test your data changes against a replica of production to ensure data integrity and improve your development velocity.

This tutorial assumes you are an active CircleCI user. If you are new to the platform, you can sign up for a free account and follow our quickstart guide to get set up.

What is dbt?

dbt is a data transformation tool that allows data folks to combine modular SQL with software engineering best practices to make data transformations that are reliable, iterative, and fast. You can interact with dbt through either dbt CLI (command line interface) or dbt Cloud. In the CLI version, you have full control of your data project configuration and the ability to publish documentation as needed, while dbt Cloud provides a user interface that sets up a few configurations for you and generates dbt documentation automatically.

Why is dbt useful in data engineering and analysis?

dbt is a powerful data tool that allows you to iterate through your table changes without manually modifying UPSERT statements. You can write your SQL in a modular way and configure data tests using parameterized queries or the native testing functions that dbt provides. It helps track your data dependencies and centralize your data transformations and documentation, ensuring a single source of truth for important business metrics. Additionally, it allows you to test your assumptions about the data to ensure data integrity before the data is published in production.

The problem of concurrency in dbt Cloud

You can use dbt Cloud to set up a continuous integration and continuous delivery (CI/CD) pipeline for your data testing by using the dbt slim CI function. You can have it connected to your GitLab or GitHub repository, and configure testing jobs to be triggered on each new pull request. The testing job status will be directly available in the pull request to help make your review process more efficient.

However, there are a few problems with the current dbt Cloud CI/CD process. First, the dbt Cloud CI/CD process currently allows only one job at a time, which can slow deployment speed if there are multiple pull requests merged into production. It would work for a small data team that only has one or two active dbt contributors, but it shows its limits when our analytics department starts scaling and analysts start contributing and deploying in dbt more frequently. For a large size data deployment, it can slow down all the other deployments for a full day.

Additionally, dbt Cloud testing can’t auto-cancel redundant workflows when there are multiple commits in the pull requests, which can influence the testing speed for analytics development.

How to use CircleCI to run dbt tests in parallel and to enable auto-canceling

Running parallel dbt tests against production data and auto-canceling redundant workflows are made feasible by using CircleCI, dbt, and Snowflake. At a high level, the steps are:

- Create a dbt profile for the dbt CI job to validate your data models and tests.

- Configure dbt to set up custom schemas to allow pull requests to run data models and data tests in their individual containers.

- Set up a Python environment in CircleCI to prepare for dbt testing.

- Set up dbt run and test in CircleCI to test modified data files.

Create a dbt profile

First, configure a dbt-ci profile in profiles.yml:

circleci:

# use user and password auth

type: snowflake

account: "nxa13674.us-east-1"

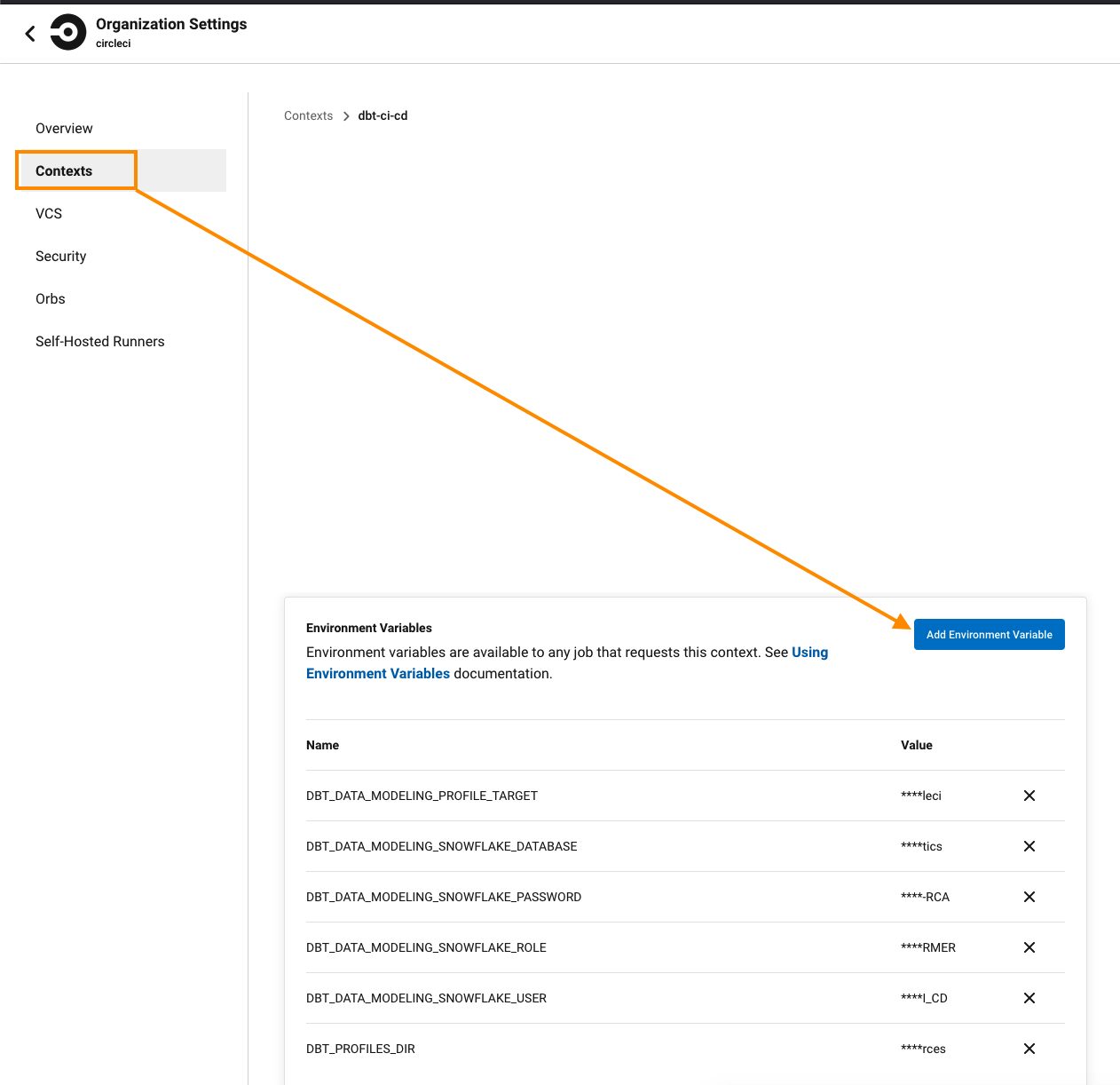

user: "{{ env_var('DBT_DATA_MODELING_SNOWFLAKE_USER', 'not_set') }}"

password: "{{ env_var('DBT_DATA_MODELING_SNOWFLAKE_PASSWORD', 'not_set') }}"

role: "{{ env_var('DBT_DATA_MODELING_SNOWFLAKE_ROLE', 'not_set') }}"

database: "{{ env_var('DBT_DATA_MODELING_SNOWFLAKE_DATABASE', 'not_set') }}"

warehouse: "XSMALL_WAREHOUSE"

schema: "public"

query_tag: "circleci_dbt"

After that, go to the CircleCI Organization Settings page to set up a CircleCI context to securely share the environment variables in your project. The context is named dbt-ci-cd so you can reference it in the CircleCI YAML file.

Set up custom schemas in dbt

Next, set up a dbt custom schema macro to allow pull requests to run data models and data tests in a containerized environment:

- Create

macros/get_custom_schema.sqlin the root directory of your dbt project to customize the schema configuration. - In the

get_custom_schema.sqlfile copy and paste the code below:

{% macro generate_schema_name(custom_schema_name, node) -%}

{%- set default_schema = target.schema -%}

{%- if target.name == "circleci" -%}

{# replace everything except letter and number in branch name to be underscore#}

{% set re = modules.re %}

{% set git_branch = modules.re.sub('[^a-zA-Z0-9]', '_', env_var('CIRCLE_BRANCH')).lower() %}

{%- if custom_schema_name is none -%}

z_pr_{{ git_branch }}_{{ default_schema }}

{%- else -%}

z_pr_{{ git_branch }}_{{default_schema}}_{{ custom_schema_name | trim }}

{%- endif -%}

{%- else -%}

{%- if custom_schema_name is none -%}

{{ default_schema }}

{%- else -%}

{{ custom_schema_name | trim }}

{%- endif -%}

{%- endmacro -%}

It will build dbt data models in the CircleCI CI process with a standard schema format as z_pr_<the branch name>_<default schema>, to containerize the data models generated from the CI process.

Configure your CircleCI pipeline for testing dbt data

Finally, configure CircleCI for dbt.

CircleCI’s workflow is defined in a YAML file under .circleci/config.yml in the root directory of your dbt project. By default, CircleCI automatically triggers the testing process each time a commit is pushed to your repository, or it can be triggered manually. Here are the steps to set up the process:

- Create

.circleci/config.ymlin the root directory of your dbt project - Copy and paste the YAML below to set up your dbt CI process. We use Poetry to manage our Python dependencies at CircleCI, but you can use whatever Python dependency management tool you prefer. The dbt CI process uses dbt’s state:modified run method to run and test only modified dbt data models using the production environment.

commands:

setup-python-dependencies:

description: Setup the python environment for testing and linting

steps:

- checkout:

path: ~/project

- restore_cache:

keys:

- v1-poetry-cache-{{ arch }}-{{ .Branch }}-{{ checksum "poetry.lock" }}

- v1-poetry-cache-{{ arch }}-{{ .Branch }}

- v1-poetry-cache

- run: echo "export PATH=$HOME/.poetry/bin:$PATH" >> $BASH_ENV

- run: curl -sSL https://raw.githubusercontent.com/python-poetry/poetry/master/get-poetry.py | python -

- run: poetry install

- save_cache:

key: v1-poetry-cache-{{ arch }}-{{ .Branch }}-{{ checksum "poetry.lock" }}

paths: /virtualenvs

jobs:

dbt-build-ci:

executor: python

steps:

- setup-python-dependencies

- run: poetry run dbt deps --project-dir resources

- run:

name: compile dbt manifest in master branch

# use --target prod so the --defer will work correctly

command: |

git checkout master

poetry run dbt debug --project-dir resources --target prod

poetry run dbt compile --project-dir resources --target prod

mv resources/target/manifest.json .

- run: git checkout ${CIRCLE_BRANCH}

# separate run and test because "dbt build" will fail all downstream if upstream tests fail. We still want to see all tests results if the test failure is from production

# currently there is a version bug with state:modified.body, we will add state:modified.body back once we update dbt to be 1.0.4;

- run: poetry run dbt run --models state:modified --defer --state ~/project --exclude tag:skip-ci --project-dir resources -x

- run: poetry run dbt test --models state:modified --defer --state ~/project --exclude tag:skip-ci --project-dir resources

workflows:

commit:

- dbt-build-ci:

context: dbt-ci-cd

filters:

branches:

ignore:

- master

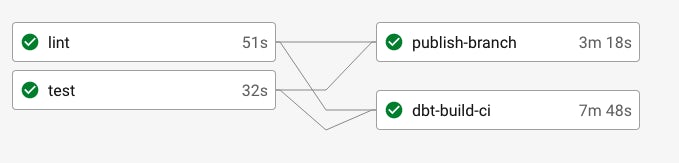

Run parallel dbt tests in your CI/CD pipeline

Once you push your change to your version control tooling, CircleCI will automatically kick off the testing process for you. Individual tasks can run in parallel.

Now you have a successful build!

Conclusion

You have successfully set up a data testing workflow in a continuous integration pipeline! As your data team starts scaling, testing your data changes before release to production is an important step. It helps data teams iterate faster and ensures stakeholder trust in the data. Running data model tests in a continuous integration pipeline can help your data team scale its engineering and analysis processes.

If you are interested in learning more about CircleCI and joining our data team to learn more about how we apply the software development process toward data, please check out the open roles on our hiring page.