CircleCI Server v4.x の概要

はじめに

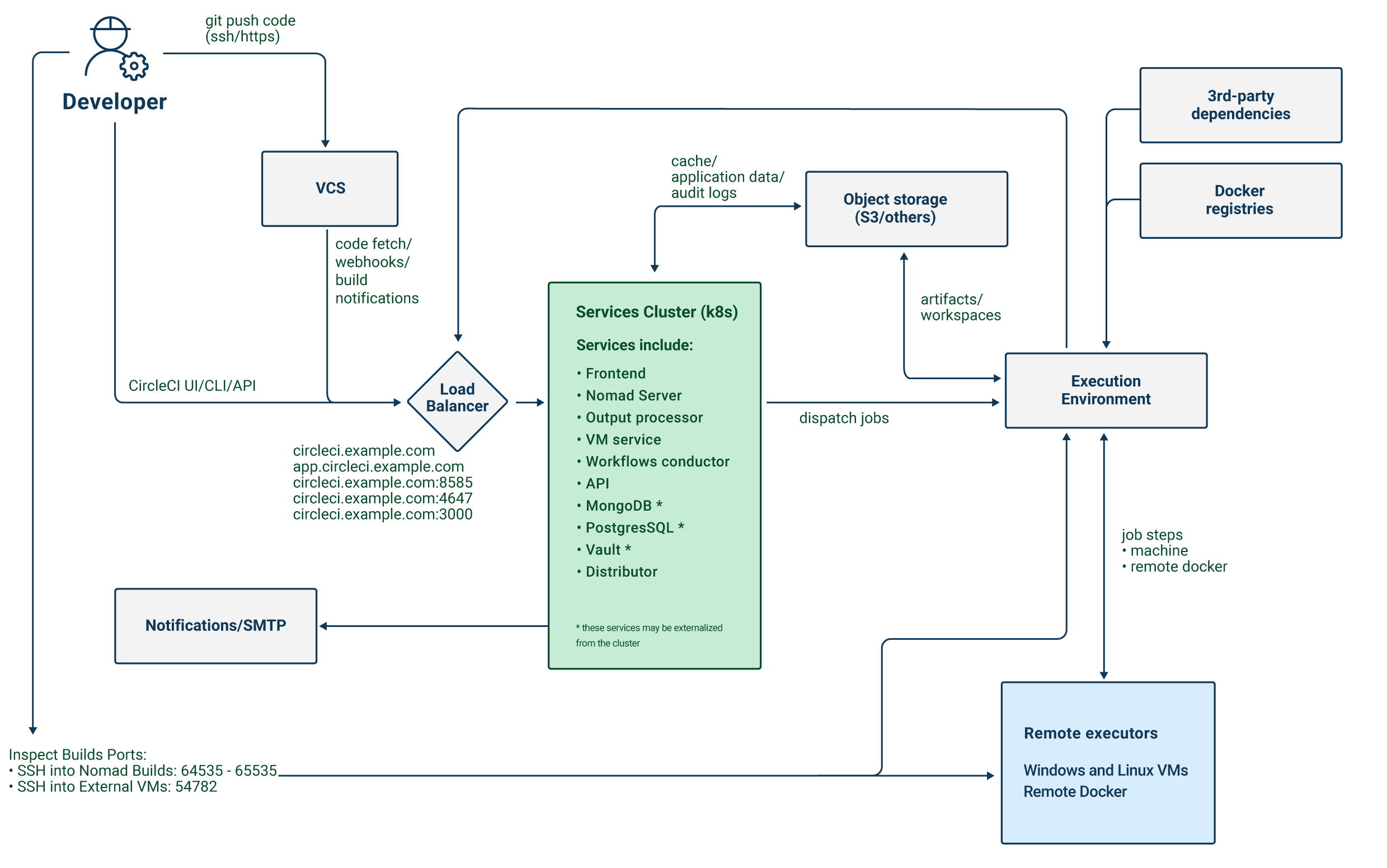

CircleCI Server は、コンプライアンスやセキュリティ上のニーズからファイアウォール内やプライベートクラウド、データセンターでの運用が求められる企業向けのオンプレミス型 CI/CD プラットフォームです。

CircleCI Server は、CircleCI のクラウドサービスと同じ機能を提供しますが、お客様の Kubernetes クラスタ内で動作します。

CircleCI Server アプリケーションは、1 つのロードバランサーを使って 4 つのサービスを公開しています。 必要に応じて、ロードバランサーを非公開にし、パブリックインターネットからのアクセスを遮断することも可能です。

| サービス | ポート | 説明 |

|---|---|---|

フロントエンド GUI プロキシおよび API | 80、443 | Web アプリケーションを公開します |

Nomad コントロールプレーン | 4647 | Nomad クライアントの RPC プロトコルを公開します |

出力プロセッサ | 8585 | Nomad ランナーの出力を取り込む |

VM サービス | 3000 | 仮想マシンをプロビジョニングします |

このアプリケーションでは、いくつかの外部ポートが公開されています。 これらのポートは、以下の表に記載されている様々な機能に使用されます。

| ポート番号 | プロトコル | 方向 | 送信元/送信先 | 用途 | 備考 |

|---|---|---|---|---|---|

80 | TCP | インバウンド | エンドユーザー | HTTP Web アプリケーショントラフィック | |

443 | TCP | インバウンド | エンドユーザー | HTTP Web アプリケーショントラフィック | |

22 | TCP | インバウンド | 管理者 | SSH | 踏み台ホストでのみ必要 |

64535-65535 | TCP | インバウンド | ビルドへの SSH 接続 | Nomad クライアントでのみ必要 |

CircleCI Server では、 Nomad スケジューラを使用して CI ジョブのスケジュールを設定します。 Nomad コントロールプレーンは Kubernetes 内で動作します。一方、スケジュールされた CircleCI ジョブの実行を担当する Nomad クライアントは、クラスタ外部にプロビジョニングされます。 CircleCI Server では、Nomad クライアント自体または専用の仮想マシン (VM) で Docker ジョブを実行できます。

ジョブのアーティファクトと出力は、Nomad ジョブからオブジェクトストレージ (S3、GCS、またはその他のサポートされているオプション) に直接送信されます。 オブジェクトストレージには、監査ログやアプリケーションのその他のアイテムも保存されます。そのため、Kubernetes クラスタと Nomad クライアントの両方がオブジェクトストレージにアクセスできる必要があります。

サービス

CircleCI Server v4.x は、以下のサービスで構成されています。 それぞれの説明と、各サービスで障害が発生した場合の影響を以下に示します。

| サービス | コンポーネント | 説明 | 障害発生時の影響 | 備考 |

|---|---|---|---|---|

api-service | アプリコア | GraphQL API を提供します。 この API は、Web フロントエンドのレンダリング データを提供します。 | 多くの UI 要素 (コンテキストなど) が完全に機能しなくなります。 | |

audit-log-service | アプリコア | 監査ログイベントを blob ストレージに長期保存します。 | 一部のイベントが記録されなくなります。 | |

branch-service | アプリコア | イベントストリームをリッスンするためのサービスです。 ブランチの削除、ジョブの更新、プッシュ、ワークフローの更新を検出します。 | ||

builds-service | アプリコア | www-api から取り込みを行い、plans-service、workflows-conductor、orbs-service に送信します。 | ||

circleci-mongodb | 実行 | プライマリ データストア | ||

circleci-postgres | マイクロサービス用データ ストレージ. | |||

circleci-rabbitmq | パイプラインと実行 | ワークフロー メッセージ、テスト結果、使用状況、cron、出力、通知、スケジューラーのキュー. | ||

circleci-redis | 実行 | リクエストのキャッシュおよびレート制限の計算のために、一時的なデータ (ビルドログなど) をキャッシュします。 | キャッシュを適切に行えない場合、VCS の呼び出しが多くなり VCS からレート制限を適用されることがあります。 | |

circleci-telegraf | Telegraf は StatsD メトリクスを収集します。 CircleCI サービスのホワイトボックスメトリクスはすべて、StatsD メトリクスを発行します。これらは Telegraf に送信されますが、他の場所 (Datadog や Prometheus など) にエクスポートするように設定することもできます。 | |||

circleci-vault | シークレット用にサービスとしての暗号化と復号化を実行する HashiCorp Vault | |||

contexts-service | アプリコア | 暗号化されたコンテキストを保存、提供します。 | コンテキストを使用するすべてのビルドが失敗するようになります。 | |

cron-service | パイプライン | スケジュールされたワークフローをトリガーします。 | スケジュールされたワークフローが実行されなくなります。 | |

dispatcher | 実行 | ジョブをタスクに分割し、実行用にスケジューラーに送信します。 | Nomad にジョブが送信されなくなります。 run キューのサイズは増加しますが、著しいデータ損失が起こることはありません。 | |

distributor-* | アプリコア | ビルドリクセストの受け入れや適切なキューへのジョブの配布を行います。 | ||

domain-service | アプリコア | CircleCI ドメイン モデルに関する情報を保存、提供します。 アクセス許可および API と連携しています。 | ワークフローを開始できなくなります。 一部の REST API 呼び出しが失敗し、CircleCI UI で 500 エラーが発生する可能性があります。 LDAP 認証を使用している場合、すべてのログインが失敗するようになります。 | |

frontend | フロントエンド | CircleCI Web アプリと www-api プロキシ です。 | UI と REST API が利用できなくなります。GitHub/GitHub Enterprise からジョブがトリガーされなくなります。 ビルドの実行はできますが、情報は更新されません。 | 1 秒あたりのリクエスト レート上限は 150、ユーザー 1 人あたりの瞬間リクエスト レート上限は 300 です。 |

insights-service | メトリクス | エクスポートおよび分析のためのビルドおよび使用状況のメトリクスを集約するサービスです。 | ||

Kong | アプリコア | API の管理 | ||

legacy-notifier | アプリコア | 外部サービス (Slack、メールなど) への通知を処理します。 | ||

NGINX | アプリコア/ フロントエンド | トラフィックのリダレクトと受信を処理します。 | ||

nomad-autoscaler | Nomad | AWS および GCP 環境での Nomad クラスタのスケーリングを管理します。 | ||

nomad-server | Nomad | Nomad クライアントの管理を行います。 | ||

orb-service | パイプライン | Orb レジストリと設定ファイルの間の通信を処理します。 | ||

output-processor | 実行 | ジョブの出力とステータスの更新を受け取り、MongoDB に書き込みます。 また、キャッシュとワークスペースにアクセスし、キャッシュ、ワークスペース、アーティファクト、テスト結果を保存するための API を実行中のジョブに提供します。 | ||

permissions-service | アプリコア | CircleCI のアクセス権インターフェイスを提供します。 | ワークフローを開始できなくなります。 一部の REST API 呼び出しが失敗し、CircleCI UI で 500 エラーが発生する可能性があります。 | |

scheduler | 実行 | 受信したタスクを実行します。 Nomad サーバーと連携しています。 | Nomad にジョブが送信されなくなります。 run キューのサイズは増加しますが、著しいデータ損失が起こることはありません。 | |

socketi | フロントエンド | Websockets サーバー | ||

telegraf | メトリクス | メトリクスの集まりです。 | ||

test-results-service | 実行 | テスト結果ファイルを解析してデータを保存します。 | ジョブのテストの失敗や時間に関するデータが生成されなくなります。 サービスが再起動するとバックフィルが行われます。 | |

vm-gc | コンピューティング管理 | 古いマシンやリモート Docker インスタンスを定期的に確認し、vm-service にそれらの削除をリクエストします。 | このサービスを再起動するまで、古い vm-service インスタンスが破棄されなくなる可能性があります。 | |

vm-scaler | マシン | マシンとリモート Docker ジョブの実行用にプロビジョニングするインスタンス数を増やすように、vm-service に定期的にリクエストします。 | マシンとリモート Docker 用の VM インスタンスがプロビジョニングされなくなり、容量不足でジョブとそれらの Executor を実行できなくなる可能性があります。 | EKS と GKE ではオーバーレイが異なります。 |

vm-service | マシン | 利用可能な vm-service インスタンスのインベントリ管理と、新しいインスタンスのプロビジョニングを行います。 | マシンまたはリモート Docker を使用するジョブが失敗するようになります。 | |

web-ui-* | フロントエンド | フロントエンド Web アプリケーションの GUI のレンダリングに使用するマイクロ フロントエンド (MFE) サービスです。 | 各サービス ページを読み込むことができなくなります。 たとえば、web-ui-server-admin で障害が発生した場合、CircleCI Server の管理者ページを読み込めなくなります。 | MFE は、app.<my domain here> での Web アプリケーションのレンダリングに使用されます。 |

webhook-service | アプリコア | ステータスの管理やイベントの処理など、すべての Webhook に対応するサービスです。 | ||

workflows-conductor-event-consumer | パイプライン | パイプラインを実行するために VCS から情報を取得します。 | VCS に変更があっても、新しいパイプラインが実行されなくなります。 | |

workflows-conductor-grpc | パイプライン | gRPC 経由での情報の変換を支援します。 |

プラットフォーム

CircleCI Server は、Kubernetes クラスタ内でのデプロイを想定しています。 仮想マシンサービス(VMサービス)により、独自の EKS や GKE を活用して VM イメージを動的に作成することができます。

EKS または GKE 以外でインストールする場合は、一部のマシンビルドと同じ機能を利用するために追加作業が必要です。 CircleCI ランナーを設定することで、VM サービスと同じ機能を、より幅広い OS およびマシンタイプ (MacOS など) で利用できるようになります。

CircleCI では、インストールするプラットフォームを幅広くサポートできるよう最善を尽くしています。 可能な限り環境に依存しないソリューションを使用しています。 ただし、すべてのプラットフォームやオプションをテストしているわけではありません。 そのため、テスト済み環境のリストを提供しており、継続的に拡大していく予定です。

| 環境 | ステータス | 備考 |

|---|---|---|

EKS | テスト済み | |

GKE | テスト済み | |

Azure | テスト未実施 | Minio の Azure ゲートウェイとランナーで動作する必要があります。 |

Digital Ocean | テスト未実施 | Minio Digital Ocean ゲートウェイとランナーで動作する必要があります。 |

OpenShift | テスト未実施 | 動作しないことが分かっています。 |

Rancher | テスト未実施 | Minio とランナーで動作する必要があります。 |