Debugging CI/CD pipelines with SSH access

Developer Advocate, CircleCI

In my interactions at industry events like AWS re:invent and KubeCon, I talk with a lot of developers. Devs often tell stories of things that prevent them from working quickly and efficiently. Many involve frustrating interactions with sys admins, SREs, or DevOps colleagues. One story I have heard several times involves a conversation like this:

dev: Hey, SRE team. My build is failing and I don’t know what’s happening with the app in the build node. It’s failing on the CI/CD platform, but the build scripts are working fine in my developer environment. Can I have SSH access to the build node on the platform so that I can debug in real time?

SRE: How about in one hour?

dev: One hour? I need to finish this so I can start hacking on new features for the next release. Can you grant me access to the build node so that I can debug on the actual resource where my build fails?

SRE: Our CI/CD platform doesn’t have SSH access, and giving you my admin credentials is a security violation.

Sometimes the conversation continues:

dev: What about the SSH plugin we installed? That let us send console commands to the builder node so we could capture responses in system logs.

SRE: Security flagged it as vulnerable under CVE-2017-2648 which enables Man-in-the-Middle attacks. Security banned its usage in our CI/CD platform. We don’t have SSH capabilities into the nodes to help you debug in real-time. Sorry.

It ends with the developer thinking:

dev: It’s going to take me forever to debug this build if I only use the unit test and stacktrace logs. I might as well be guessing.

Although this dialogue is a generalization, it is based on genuine interactions and situations that I’ve experienced in my career and heard about at events. It is very common scenario and most teams experience something like it.

When I hear this story from CircleCI users, I have a great solution for them: a useful and powerful debugging with SSH feature. This feature lets devs troubleshoot and debug builds on the resources where the build failed. Let me tell you about how it works.

Accessing pipeline jobs

Debugging code on resources and in environments outside of a developer’s normal development environment presents challenges that consume valuable time. Without features that enable a developer to access and troubleshoot failed builds, the CI/CD platform itself becomes a huge blocker for the developer and the SRE team. By not having SSH access to the build nodes, a developer has to resort to debugging the failed builds outside of the CI/CD environment where the build actually is failing. They have to try to replicate the CI/CD environment in their dev environment to accurately identify the issue, then attempt to resolve it using only application, stack trace, and system logs. These types of situations are a huge waste of time for everyone involved.

SSH debugging allows developers to easily and securely debug their failed builds on the resources where the failures are happening, in real time. With CircleCI, SSH debugging also provides self-serve mechanisms for developers to securely access the execution environments without having to depend on other teams. This is a huge time saver for developers and ops teams alike.

Troubleshooting a failed pipeline job

Accessing build jobs with SSH is easy when you are using CircleCI. I will run through a common build failure scenario to show how to troubleshoot a failed build job. Here is a sample config.yml for us to use in this scenario:

version: 2.1

workflows:

build_test_deploy:

jobs:

- build_test

- deploy:

requires:

- build_test

jobs:

build_test:

docker:

- image: cimg/python:3.10.0

steps:

- checkout

- run:

name: Install Python Dependencies

command: |

pip install --user --no-cache-dir -r requirements.txt

- run:

name: Run Tests

command: |

python test_hello_world.py

deploy:

docker:

- image: cimg/python:3.10.0

steps:

- checkout

- setup_remote_docker:

docker_layer_caching: false

- run:

name: Build and push Docker image

command: |

pip install --user --no-cache-dir -r requirements.txt

~/.local/bin/pyinstaller -F hello_world.py

echo 'export TAG=0.1.${CIRCLE_BUILD_NUM}' >> $BASH_ENV

echo 'export IMAGE_NAME=python-cicd-workshop' >> $BASH_ENV

source $BASH_ENV

docker build -t $DOCKER_LOGIN/$IMAGE_NAME -t $DOCKER_LOGIN/$IMAGE_NAME:$TAG .

echo $DOCKER_PWD | docker login -u $DOCKER_LOGIN --password-stdin

docker push $DOCKER_LOGIN/$IMAGE_NAME

The sample config.yml specifies a 2-job workflow pipeline that tests the code, builds a Docker image based on the app, and publishes that image to Docker Hub. To publish the new image to Docker Hub, the job requires Docker Hub credentials. These credentials are highly sensitive and are securely represented in the $DOCKER_LOGIN and $DOCKER_PWD secure environment variables. These variables are set as project level environment variables. If the $DOCKER_LOGIN and $DOCKER_PWD environment variables do not exist at the project level, this build will fail at the Build and push Docker image step.

Identifying our failed job

The failed job logs show that the failure occurs at the start of the Docker build process, as shown in this log entry.

invalid argument "/python-cicd-workshop" for "-t, --tag" flag: invalid reference format

See 'docker build --help'.

Exited with code 125

Now that we know where things are failing in the pipeline, we can easily rerun this failed build with SSH access. When rerunning jobs with SSH access, the pipeline will run again and fail, just as it did before. But this time, the runtime node stays active and gives the user details about how to access the failed build resource.

Rerunning the job with SSH

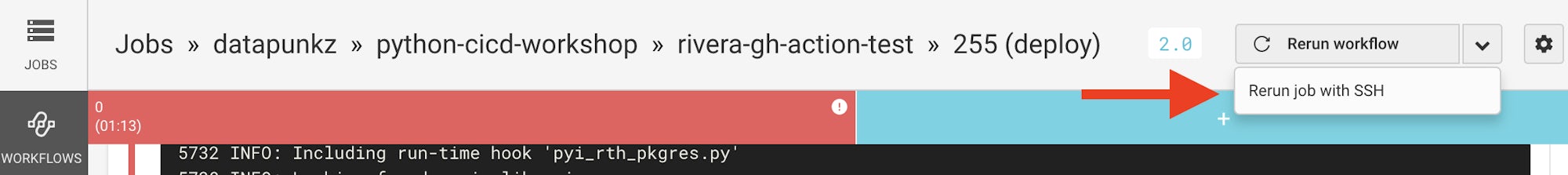

To gain SSH access to the failed build, rerun the failed job from within the CircleCI dashboard.

- Log into the CircleCI dashboard

- Click the FAILED job

- Click the down button on the top right portion of the dashboard, then select Rerun job with SSH

This image shows an example of the access details the developer must use to access the resource using SSH.

The SSH access details are provided at the end of the build:

Accessing the execution environment

Now that we have SSH access to the execution environment, we can troubleshoot our build. As mentioned before, the log entries indicate that the issue has something to do with the Docker build portion of our deploy steps. The invalid argument "/python-cicd-workshop" part of the error message shows me that the Docker username is missing from "/python-cicd-workshop".

The Docker image name is defined in this line of the config.yml file and is composed of environment variables:

We know that the Docker image build fails because of an inappropriate image name and we know that the name is composed of environment variables. This indicates that the failure is related to incorrect or nonexistent environment variables. Open a terminal and SSH into the execution environment. We want to run a command to test if our assumption about the environment variables is true:

The printenv |grep DOCKER_LOGIN command tells the system to show the $DOCKER_LOGIN environment variable and its value. The output of this command will tell us if the $DOCKER_LOGIN variable is set or not. If the command does not return a value, then we know that the system has not set the $DOCKER_LOGIN variable at the initial execution of the build. In this case, no value was returned. That is the cause of our failure.

Fixing the build

We have now verified that we are missing the $DOCKER_LOGIN environment variable. We can fix the build by adding both the missing $DOCKER_LOGIN and $DOCKER_PWD variables to the project using the CircleCI dashboard. Since the values for these variables are very sensitive, they must be defined and securely stored on the CircleCI platform. You can set the variables by following these instructions:

- Click Add Project on the CircleCI dashboard in the left menu

- Find and click the project’s name in the projects list, click Set Up Project

- Click the Project cog in the top right area of the CircleCI dashboard

- In the Build Settings section, click Environment Variables

- Click Add Variable

In the Add an Environment Variable dialog box, define the environment variables needed for this build:

- Name:

DOCKER_LOGINValue:Your Docker Hub User Name - Name:

DOCKER_PWDValue:Your Docker Hub Password

Setting these environment variables correctly is critical to the build successfully completing.

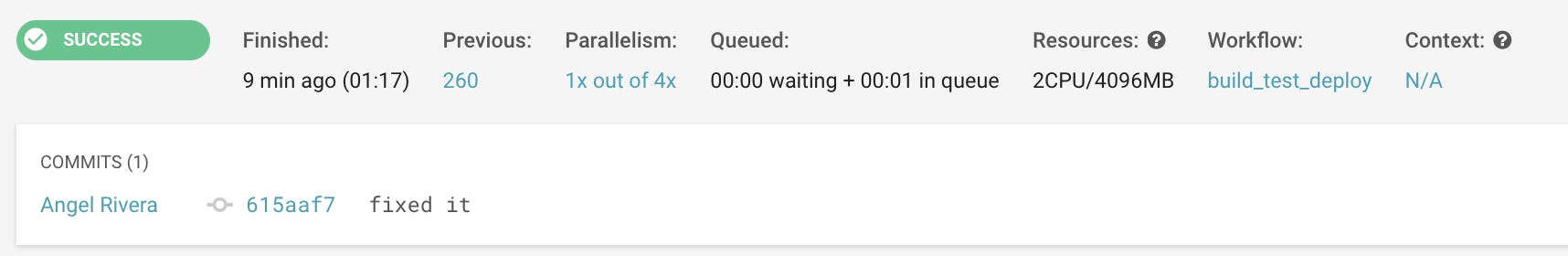

Rerunning the build

Now that we have set the required environment variables for our build, we can rerun the failed build to test that our changes work. Go to the CircleCI dashboard and click Rerun from Beginning to kick off a rebuild of the project. Wait for your build to successfully complete.

Wrapping up

In this post, I highlighted the need for SSH debugging of CI/CD pipelines. I demonstrated the power of CircleCI’s SSH feature and how any user can securely and easily access their execution environments and debug failed builds in real time. This feature empowers developers to quickly identify and fix their broken builds so that they can focus their time and attention to building the new and innovative features users want.