Rerun failed tests

On This Page

- Introduction

- Prerequisites

- Quickstart

- Migrate from circleci tests split to circleci tests run

- Before: example .circleci/config.yml file

- After: example .circleci/config.yml file

- Verify the configuration

- Additional examples

- Configure a job running Cypress tests

- Configure a job running Django tests

- Configure a job running Elixir tests

- Configure a job running Go tests

- Configure a job running JavaScript/TypeScript (Jest) tests

- Configure a job running Kotlin or Gradle tests

- Configure a job running PHPUnit tests

- Configure a job running Playwright tests

- Configure a job running Ruby (RSpec) tests

- Configure a job running Ruby (RSpec) tests across parallel CI nodes with Knapsack Pro

- Configure a job running Ruby (Cucumber) tests

- Configure a job running Ruby (minitest) tests across parallel CI nodes with Knapsack Pro

- Output test files only

- Known limitations

- Troubleshooting

- All tests are still being rerun

- No test names found in input source

- Test file names include spaces

- Parallel rerun failure

- Approval jobs

- Error: can not rerun failed tests: no failed tests could be found

- FAQs

Use rerun failed tests to only rerun a subset of tests, instead of an entire test suite, when a transient test failure arises.

Introduction

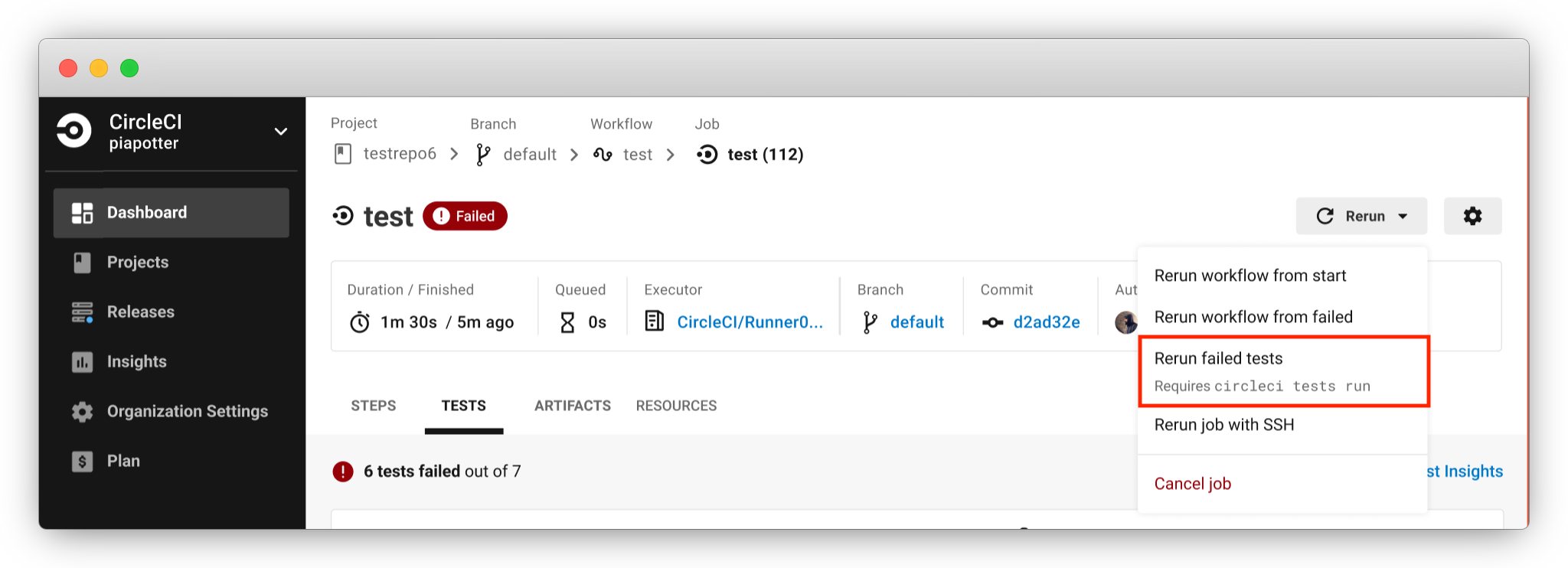

If a transient test failure arises in a pipeline, select Rerun failed tests to rerun a subset of tests (your failed tests) instead of rerunning the entire test suite. Rerun failed tests runs a new workflow, which appears within your original pipeline. The rerun is created from the same commit as the failed workkflow, not from subsequent commits.

Historically, when a testing job in a workflow had flaky tests, the only option to get to a successful workflow was to rerun your workflow from failed. Rerun from failed executes all tests, including tests that passed, which prolongs time-to-feedback and consumes credits unnecessarily. Having the option to rerun only failed tests is a more efficient way to rerun your testing workflows.

Set up your test jobs to use circleci tests run to make the rerun failed tests available.

Prerequisites

In order to use the rerun failed tests feature, your job must meet the following requirements:

-

Your testing job must be configured to upload test results to CircleCI using the

store_test_resultsstep. -

fileorclassnameattributes must be present in the JUnit XML output. -

The testing job must use

circleci tests runto execute tests. The next sections provide instructions to get this set up followed by examples for popular test frameworks.If your current job is using intelligent test splitting, you must change the

circleci tests splitcommand tocircleci tests runcombined with a--split-by=flag.

Quickstart

To use the rerun failed tests feature, run your tests using the circleci tests run command. The basic structure of your testing command should be:

-

Create a directory for your results

-

Glob your test files

-

Make your test files available as standard input (stdin)

-

Run tests with

circleci tests run. Your tests should be run with the--commandflag, usingxargsto get the tests from stdin. -

If you want to use intelligent test splitting, use the

--split-by=flag as shown in the examples on the Parallelism and test splitting page.

jobs:

test:

docker:

- image: cimg/python:3.7.12

steps:

- run:

name: Run tests

command: |

mkdir test-results

TEST_FILES=$(circleci tests glob "**/test_*.py")

echo "$TEST_FILES" | circleci tests run --command="xargs pytest -o junit_family=legacy --junitxml=test-results/junit.xml" --verbose

- store_test_results:

path: test-resultsFor a list of options for the circleci tests run command, see the parallelism and test splitting page. You can also access the CLI help for circleci tests run by running circleci tests run --help in a CircleCI job.

The next section provides a more detailed example of migrating from circleci tests split to circleci tests run to access the rerun failed tests feature.

Migrate from circleci tests split to circleci tests run

This section is intended to show how to configure your pipelines to enable the use of the rerun failed tests feature. The following two sections show a before and after version of a job configured to run pytest tests. This shows how to get from an existing configuration using circleci tests split to using circleci tests run, which is a requirement for using the rerun failed tests feature. Your starting point may be different, you might not already be using circleci tests split, in which case you can refer to the after example to see how to configure your job to use circleci tests run.

The example used in this section includes the use of intelligent test splitting to optimize testing time. Test splitting and parallelism are not required to use the rerun failed tests feature.

Before: example .circleci/config.yml file

jobs:

test:

docker:

- image: cimg/python:3.7.12

parallelism: 4 #use parallelism and test splitting to run tests across parallel execution environments

steps:

- run:

name: Run tests

command: |

mkdir test-results

TEST_FILES=$(circleci tests glob "**/test_*.py" | circleci tests split --split-by=timings)

pytest -o junit_family=legacy --junitxml=test-results/junit.xml $TEST_FILES

- store_test_results:

path: test-resultsThis example snippet is from a CircleCI configuration file that runs the following:

-

Executes Python test files that end in

.py. -

Splits tests by previous timing results (you can follow this tutorial on intelligent test splitting).

-

Stores the test results in a new directory called

test-results. -

Uploads test results to CircleCI using

store_test_results.

-o junit_family=legacy is present to ensure that the test results being generated contain the file attribute. |

After: example .circleci/config.yml file

In the snippet below, the previous example has been updated to use the circleci tests run command to allow for rerunning only failed tests.

jobs:

test:

docker:

- image: cimg/python:3.7.12

parallelism: 4 #use parallelism and test splitting to run tests across parallel execution environments

steps:

- run:

name: Run tests

command: |

mkdir test-results

TEST_FILES=$(circleci tests glob "**/test_*.py")

echo "$TEST_FILES" | circleci tests run --command="xargs pytest -o junit_family=legacy --junitxml=test-results/junit.xml" --verbose --split-by=timings #--split-by=timings optional, only use if you are using CircleCI's test splitting

- store_test_results:

path: test-resultsThis example snippet, which has been altered to use circleci tests run, runs the following:

-

Make a directory called

test-resultsto store your test results. -

TEST_FILES=$(circleci tests glob "*/test_.py")Use CircleCI’s glob command to put together a list of test files. In this case, we are looking for any test file that starts with

test_and ends with.py. Ensure that the glob string is enclosed in quotes. -

echo "$TEST_FILES" |Pass the list of test files to the

circleci tests runcommand as standard input (stdin). -

circleci tests run --command="xargs pytest -o junit_family=legacy --junitxml=test-results/junit.xml" --verbose --split-by=timings-

Invoke

circleci tests runand specify the original command (pytest) used to run tests as part of the--command=parameter. This is required.xargsmust be present as well. -

--verboseis an optional parameter forcircleci tests runwhich enables more verbose debugging messages. -

Optional:

--split-by=timingsenables intelligent test splitting by timing forcircleci tests run.The --split-by=timingsflag is not a requirement of usingcircleci tests run. If your testing job is not using CircleCI’s test splitting, you can safely omit this parameter.

-

You can specify the timing type similar to how you can when using circleci tests split --split-by=timings --timings-type=, using a --timings-type= flag. You can pass file, classname, or name (splits by test name) to --timings-type= as a flag to circleci tests run. For pytest, --timings-type=name is the most straightforward to integrate with the way that pytest outputs its JUnit XML out-of-the-box.

Verify the configuration

After updating your configuration, run the job that runs tests again and make sure that the same number of tests are being executed as before the config.yml change.

Then, the next time you encounter a test failure on that job, select Rerun failed tests. If the --verbose setting is enabled, you should see output similar to the following the next time you select Rerun failed tests with this job on CircleCI:

Installing circleci-tests-plugin-cli plugin.

circleci-tests-plugin-cli plugin Installed. Version: 1.0.5976-439c1fc

DEBUG[2023-05-18T22:09:08Z] Attempting to read from stdin. This will hang if no input is provided.

INFO[2023-06-14T23:52:50Z] received failed tests from workflow *****

DEBUG[2023-05-18T22:09:08Z] 2 test(s) failed out of 56 total test(s). Rerunning 1 test file(s)

DEBUG[2023-06-14T23:52:50Z] if all tests are being run instead of only failed tests, ensure your JUnit XML has a file or classname attribute.

INFO[2023-05-18T22:09:08Z] starting execution

DEBUG[2023-05-18T22:09:08Z] Received: ****If you see rerunning failed tests present in the step’s output, the functionality is configured correctly. You will also see output that shows the total number of failed tests from the original job run.

The job should only rerun tests that are from a classname of file that had at least one test failure when the Rerun failed tests button was selected. If you are seeing different behavior, comment on this Discuss post for support.

Additional examples

Configure a job running Cypress tests

-

Use the

cypress-circleci-reporterpackage (note this is a 3rd party tool that is not maintained by CircleCI). You can install in your.circleci/config.ymlor add to yourpackage.json. Example for adding to.circleci/config.yml:#add required reporters (or add to package.json) -run: name: Install coverage reporter command: | npm install --save-dev cypress-circleci-reporter -

Use the

cypress-circleci-reporter,circleci tests run, and upload test results to CircleCI:-run: name: run tests command: | mkdir test_results cd ./cypress npm ci npm run start & circleci tests glob "cypress/**/*.cy.js" | circleci tests run --command="xargs npx cypress run --reporter cypress-circleci-reporter --spec" --verbose --split-by=timings #--split-by=timings is optional, only use if you are using CircleCI's test splitting - store_test_results: path: test_results -

Ensure you are using

xargsin yourcircleci tests runcommand to pass the list of test files/classnames via stdin to--command. -

Update the

globcommand to match your specific use case. If your current job is using CircleCI’s intelligent test splitting, you must change thecircleci tests splitcommand tocircleci tests runwith the--split-by=timingsparameter. If you are not using test splitting,--split-by=timingscan be omitted.

Cypress may output a warning saying Warning: It looks like you’re passing --spec a space-separated list of arguments:. This can be ignored, but it can be removed by following the guidance from our community forum.

Configure a job running Django tests

Django takes as input test filenames with a format that uses dots (.), however, it outputs JUnit XML in a format that uses slashes /. To account for this, get the list of test filenames first, change the filenames to be separated by dots . instead of slashes /, and pass the filenames into the test command.

Ensure you are using xargs in your circleci tests run command to pass the list of test files/classnames via stdin to --command.

- run:

name: get tests

command: |

# Get the test file names, write them to files.txt, and split them by historical timing data

circleci tests glob "**/test*.py" | circleci tests run --command=">files.txt xargs echo" --verbose --split-by=timings #split-by-timings is optional

[ -s files.txt ] || circleci-agent step halt #if a re-run and there are no tests to re-run for this parallel run, stop execution

- run:

name: Run tests

command:

# Change filepaths into format Django accepts (replace slashes with dots). Save the filenames in a TESTFILES variable

cat files.txt | tr "/" "." | sed "s/\.py//g" | sed "s/tests\.//g" > circleci_test_files.txt

cat circleci_test_files.txt

TESTFILES=$(cat circleci_test_files.txt)

# Run the tests (TESTFILES) with the reformatted test file names

pipenv run coverage run manage.py test --parallel=8 --verbosity=2 $TESTFILES

- store_test_results:

path: test-resultsConfigure a job running Elixir tests

-

Modify your test command to use

circleci tests run:- run: name: Run tests command: | circleci tests glob 'lib/**/*_test.exs' | circleci tests run --command='xargs -n1 echo > test_file_paths.txt' mix ecto.setup --quiet cat test_file_paths.txt | xargs mix test - store_test_results: path: _build/test/my_app/test-junit-report.xml when: always -

Ensure you are using

xargsin yourcircleci tests runcommand to pass the list of test files/classnames via stdin to--command. -

Update the

globcommand to match your use case.

Configure a job running Go tests

-

Modify your test command to use

circleci tests run:- run: command: go list ./... | circleci tests run --command "xargs gotestsum --junitfile junit.xml --format testname --" --split-by=timings --timings-type=name - store_test_results: path: junit.xml -

Ensure you are using

xargsin yourcircleci tests runcommand to pass the list of test files/classnames via stdin to--command. -

If your current job is using CircleCI’s intelligent test splitting, you must change the

circleci tests splitcommand tocircleci tests runwith the--split-by=timingsparameter.. If you are not using test splitting,--split-by=timingscan be omitted.

Configure a job running JavaScript/TypeScript (Jest) tests

-

Install the

jest-junitdependency. You can add this step in your.circleci/config.yml:- run: name: Install JUnit coverage reporter command: yarn add --dev jest-junitYou can also add it to your

jest.config.jsfile by following these usage instructions. -

Modify your test command to look something similar to:

- run: command: | npx jest --listTests | circleci tests run --command="JEST_JUNIT_ADD_FILE_ATTRIBUTE=true xargs npx jest --config jest.config.js --runInBand --" --verbose --split-by=timings environment: JEST_JUNIT_OUTPUT_DIR: ./reports/ - store_test_results: path: ./reports/ -

Ensure you are using

xargsin yourcircleci tests runcommand to pass the list of test files/classnames via stdin to--command. -

Update the

npx jest --listTestscommand to match your use case. See the Jest section in the Collect Test Data document for details on how to output test results in an acceptable format forjest. If your current job is using CircleCI’s intelligent test splitting, you must change thecircleci tests splitcommand tocircleci tests runwith the--split-by=timingsparameter. If you are not using test splitting,--split-by=timingscan be omitted.JEST_JUNIT_ADD_FILE_ATTRIBUTE=trueis added to ensure that thefileattribute is present.JEST_JUNIT_ADD_FILE_ATTRIBUTE=truecan also be added to yourjest.config.jsfile instead of including it in.circleci/config.yml, by using the following attribute:addFileAttribute="true".

Configure a job running Kotlin or Gradle tests

-

Modify your test command to use

circleci tests run:-run: command: | cd src/test/java # Get list of classnames of tests that should run on this node. circleci tests glob "**/*.java" | cut -c 1- | sed 's@/@.@g' | sed 's/.\{5\}$//' | circleci tests run --command=">classnames.txt xargs echo" --verbose --split-by=timings --timings-type=classname #if this is a re-run and it is a parallel run that does not have tests to run, halt execution of this parallel run [ -s classnames.txt ] || circleci-agent step halt-run: command: | # Format the arguments to "./gradlew test" GRADLE_ARGS=$(cat src/test/java/classnames.txt | awk '{for (i=1; i<=NF; i++) print "--tests",$i}') echo "Prepared arguments for Gradle: $GRADLE_ARGS" ./gradlew test $GRADLE_ARGS - store_test_results: path: build/test-results/test -

Update the

globcommand to match your use case. If your current job is using CircleCI’s intelligent test splitting, you must change thecircleci tests splitcommand tocircleci tests runwith the--split-by=timingsparameter.. If you are not using test splitting,--split-by=timingscan be omitted.

Configure a job running PHPUnit tests

-

Edit your test command to use

circleci tests run:# Use phpunit-finder to output list of tests to stdout for a test suite named functional # Pass those tests as stdin to circleci tests run - run: name: Run functional tests command: | TESTS_TO_RUN=$(/data/vendor/bin/phpunit-finder -- functional) echo "$TESTS_TO_RUN" | circleci tests run --command="xargs -I{} -d\" \" /data/vendor/bin/phpunit {} --log-junit /data/artifacts/phpunit/phpunit-functional-$(basename {}).xml" --verbose --split-by=timings - store_test_results: path: artifacts/phpunit when: always -

Ensure you are using

xargsin yourcircleci tests runcommand to pass the list of test files/classnames via stdin to--command. -

Note that this example uses a utility named

phpunit-finderwhich is a third party tool that is not supported by CircleCI, use at your own risk. If your current job is using CircleCI’s intelligent test splitting, you must change thecircleci tests splitcommand tocircleci tests runwith the--split-by=timingsparameter.**. If you are not using test splitting,--split-by=timingscan be omitted.

Configure a job running Playwright tests

-

Modify your test command to use

circleci tests run:- run: command: | mkdir test-results #can also be switched out for passing PLAYWRIGHT_JUNIT_OUTPUT_NAME directly to Playwright pnpm run serve & TESTFILES=$(circleci tests glob "specs/e2e/**/*.spec.ts") echo "$TESTFILES" | circleci tests run --command="xargs pnpm playwright test --config=playwright.config.ci.ts --reporter=junit" --verbose --split-by=timings - store_test_results: path: results.xml -

Ensure you are using

xargsin yourcircleci tests runcommand to pass the list of test files/classnames via stdin to--command. -

Update the

globcommand to match your use case. If your current job is using CircleCI’s intelligent test splitting, you must change thecircleci tests splitcommand tocircleci tests runwith the--split-by=timingsparameter.. If you are not using test splitting,--split-by=timingscan be omitted. Note: you may also use Playwright’s built-in flag (PLAYWRIGHT_JUNIT_OUTPUT_NAME) to specify the JUnit XML output directory.Ensure that you are using version 1.34.2 or later of Playwright. Earlier versions of Playwright may not output JUnit XML in a format that is compatible with this feature.

Configure a job running Ruby (RSpec) tests

-

Add the following gem to your Gemfile:

gem 'rspec_junit_formatter' -

Modify your test command to use

circleci tests run:- run: mkdir ~/rspec - run: command: | circleci tests glob "spec/**/*_spec.rb" | circleci tests run --command="xargs bundle exec rspec --format progress --format RspecJunitFormatter -o ~/rspec/rspec.xml" --verbose --split-by=timings - store_test_results: path: ~/rspec -

--format RspecJunitFormatermust come after any other--formatRSpec argument -

Ensure you are using

xargsin yourcircleci tests runcommand to pass the list of test files/classnames via stdin to--command. -

Update the

globcommand to match your use case. See the RSpec section in the Collect Test Data document for details on how to output test results in an acceptable format forrspec. If your current job is using CircleCI’s intelligent test splitting, you must change thecircleci tests splitcommand tocircleci tests runwith the--split-by=timingsparameter. If you are not using test splitting,--split-by=timingscan be omitted.

Configure a job running Ruby (RSpec) tests across parallel CI nodes with Knapsack Pro

Knapsack Pro dynamically distributes your tests based on up-to-date test execution data to achieve the perfect split.

To use Knapsack Pro with the CircleCI rerun failed tests feature, follow these steps:

-

Install Knapsack Pro in your project.

-

Add the following gem to your Gemfile:

gem 'rspec_junit_formatter' -

Modify your test command to use

circleci tests:- run: name: RSpec with Knapsack Pro command: | mkdir -p /tmp/test-results export KNAPSACK_PRO_RSPEC_SPLIT_BY_TEST_EXAMPLES=true export KNAPSACK_PRO_TEST_FILE_LIST_SOURCE_FILE=/tmp/tests_to_run.txt # Retrieve the tests to run (all or just the failed ones), and let Knapsack Pro split them optimally. circleci tests glob "spec/**/*_spec.rb" | circleci tests run --index 0 --total 1 --command ">$KNAPSACK_PRO_TEST_FILE_LIST_SOURCE_FILE xargs -n1 echo" --verbose bundle exec rake "knapsack_pro:queue:rspec[--format documentation --format RspecJunitFormatter --out /tmp/test-results/rspec.xml]" - store_test_results: path: /tmp/test-results - store_artifacts: path: /tmp/test-results destination: test-results -

You may also enable Split by Test Examples to parallelize tests across CI nodes by individual

it/specify. This is useful when you have slow test files but do not want to manually split test examples into smaller test files.

Configure a job running Ruby (Cucumber) tests

-

Modify your test command to look something similar to:

- run: mkdir -p ~/cucumber - run: command: | circleci tests glob "features/**/*.feature" | circleci tests run --command="xargs bundle exec cucumber --format junit,fileattribute=true --out ~/cucumber/junit.xml" --verbose --split-by=timings - store_test_results: ~/cucumber -

Ensure you are using

xargsin yourcircleci tests runcommand to pass the list of test files/classnames via stdin to--command. -

Update the

globcommand to match your use case. See the Cucumber section in the Collect Test Data document for details on how to output test results in an acceptable format forCucumber. If your current job is using CircleCI’s intelligent test splitting, you must change thecircleci tests splitcommand tocircleci tests runwith the--split-by=timingsparameter. If you are not using test splitting,--split-by=timingscan be omitted.

Configure a job running Ruby (minitest) tests across parallel CI nodes with Knapsack Pro

Knapsack Pro dynamically distributes your tests based on up-to-date test execution data to achieve the perfect split.

To use Knapsack Pro with the CircleCI rerun failed tests feature, follow these steps:

-

Install Knapsack Pro in your project.

-

Add the following gem to your Gemfile:

gem 'minitest-ci' -

Modify your test command to use

circleci tests:- run: name: Minitest with Knapsack Pro command: | export KNAPSACK_PRO_TEST_FILE_LIST_SOURCE_FILE=/tmp/tests_to_run.txt # Retrieve the tests to run (all or just the failed ones), and let Knapsack Pro split them optimally. circleci tests glob "test/**/*_test.rb" | circleci tests run --index 0 --total 1 --command ">$KNAPSACK_PRO_TEST_FILE_LIST_SOURCE_FILE xargs -n1 echo" --verbose bundle exec rake "knapsack_pro:queue:minitest[--verbose --ci-report --no-ci-clean]" - store_test_results: path: test/reports

Output test files only

If your testing setup on CircleCI is not compatible with invoking your test runner in the circleci tests run command, you can opt to use circleci tests run to receive the file names, output the file names, and save the file names to a temporary location. You can then subsequently invoke your test runner using the generated file names.

Example:

- run:

command: |

circleci tests glob "src/**/*js" | circleci tests run --command=">files.txt xargs echo" --verbose --split-by=timings #split-by=timings is optional

[ -s tmp/files.txt ] || circleci-agent step halt #if a re-run and there are no tests to re-run for this parallel run, stop execution

- run:

name: Run tests

command: |

mkdir test-results

... #pass files.txt into your test command

- store_test_results:

path: test-results-

The snippet above will write the list of test file names to

files.txt. -

On a non-rerun, this list will be all of the test file names.

-

On a "rerun", the list will be a subset of file names (the test file names that had at least 1 test failure in the previous run).

-

You can pass the list of test file names from

files.txtinto, for example, your custommakefile. -

If using parallelism, CircleCI spins up the same number of containers/VMs as the parallelism level that is set in

.circleci/config.yml. However, not all parallel containers/VMs will execute tests. For the parallel containers/VMs that will not run tests,files.txtmay not be created. Thehaltcommand ensures that in the case where a parallel run is not executing tests, the parallel run is stopped.

Known limitations

-

If your testing job uses parallelism and test splitting, the job will spin up the number of containers/virtual machines (VMs) that are specified with the

parallelismkey. However, the step that runs tests for each of those parallel containers/VMs will only run a subset of tests, or no tests, after the tests are split across the total number of parallel containers/VMs.For example, if parallelism is set to eight, there may only be enough tests after the test splitting occurs to "fill" the first parallel container/VM. The remaining seven containers/VMs will still start up, but they will not run any tests when they get to the test execution step.

In most cases, you can still observe substantial time and credit savings despite spinning up containers/VMs that do not run tests.

If you would like to maximize credit savings, you can check for whether the parallel container/VM will execute tests as the first step in a job, and if there are no tests to run, terminate job execution. With this approach, ensure that the logic to run tests happens in a subsequent step. For example:

steps: - checkout - run: | mkdir -p ./tmp && \ >./tmp/tests.txt && \ circleci tests glob "spec/**/*_spec.rb" | circleci tests run --command=">./tmp/tests.txt xargs echo" --split-by=timings #Get the list of tests for this container/VM [ -s tmp/tests.txt ] || circleci-agent step halt #if there are no tests, terminate execution after this step - run: name: Run tests command: | mkdir test-results ... - store_test_results: path: test-resultsSee Parallel rerun failure for a workaround to avoid failures if you are also using

persist_to_workspace.The haltcommand will execute the rest of the current step, regardless of whethertests.txthas content or not. Make sure to place the command to execute tests in the following step. -

Orbs that run tests may not work with this new functionality at this time.

-

If a shell script is invoked to run tests,

circleci tests runshould be placed in the shell script itself, and not.circleci/config.yml. Alternatively, see the section above to pipe the list of test files to be run to a.txtfile and then pass the list of test file names to your shell script. -

Jobs that are older than the retention period for workspaces for the organization cannot be rerun with "Rerun failed tests".

-

Jobs that upload code coverage reports:

To ensure that code coverage reports from the original job run are persisted to an artifact in addition to the report that is generated on a re-run, see the following example for a sample Go project:

jobs: test-go: # Install go modules and run tests docker: - image: cimg/go:1.20 parallelism: 2 steps: - checkout # Cache dependencies - restore_cache: key: go-mod-{{ checksum "go.mod" }} - run: name: Download Go modules command: go mod download - save_cache: key: go-mod-{{ checksum "go.mod" }} paths: - /home/circleci/go/pkg/mod - run: name: Run tests with coverage being saved command: go list ./... | circleci tests run --timings-type "name" --command="xargs gotestsum --junitfile junit.xml --format testname -- -coverprofile=cover.out" # For a rerun that succeeds, restore the coverage files from the failed run - restore_cache: key: coverage-{{.Revision}}-{{.Environment.CIRCLE_NODE_INDEX}} # Save the coverage for rerunning failed tests. CircleCI will skip saving if this revision key has already been saved. - save_cache: key: coverage-{{.Revision}}-{{.Environment.CIRCLE_NODE_INDEX}} paths: - cover.out when: always # Needed to rerun failed tests - store_test_results: path: junit.xml when: always # Upload coverage file html so we can show it includes all the tests (not just rerun) - run: name: Save html coverage command: go tool cover -html=cover.out -o cover.html when: always - store_artifacts: path: cover.html when: always workflows: test: jobs: - test-goThe snippet above uses the built-in

.Revisionkey to store a coverage report for the current VCS revision. On a successful rerun, the original job run’s coverage report will be restored to include the coverage from the skipped (passed) tests. It can then be used in a downstream job for aggregation or analysis.A similar method can be used to ensure that the job following a re-run uses timing for test splitting from both the original job run & the re-run. Instead of storing and restoring

cover.outin the cache, store and restore the test results XML. If a similar method is not used, the job following a re-run may be slightly less efficient if using test splitting by timing. -

Rerun failed tests is not currently supported for the Windows execution environment.

-

If your job runs two different types of tests in the same job, the feature may not work as expected. In this scenario, it is recommended that the job is split into two jobs, each running a different set of tests with

circleci tests run.

Troubleshooting

All tests are still being rerun

After configuring circleci tests run, if you see all tests are rerun after clicking Rerun failed tests, check the following:

-

Ensure that the

--verbosesetting is enabled when invokingcircleci tests run. This will display which testscircleci tests runis receiving on a "rerun". -

Use

store_artifactsto upload the JUnit XML that contains test results to CircleCI. This is the same file(s) that is being uploaded to CircleCI withstore_test_results. -

Manually inspect the newly uploaded JUnit XML via the Artifacts tab and ensure that there is a

file=attribute or aclassnameattribute. If neither are present, you will see unexpected behavior when trying to rerun. Follow the instructions on this page to ensure that the test runner you are using is outputting its JUnit XML test results with afile(preferred) orclassnameattribute. Comment in our community forum if you are still stuck. -

Ensure that

xargsis present in the--command=argument.

No test names found in input source

If you are seeing the following message: WARN[TIMESTAMP] No test names found in input source. If you were expecting test names, please check your input source.

Ensure that you are passing a list of test filenames (or classnames) via stdin to circleci tests run. The most common approach to do this is to use a glob command: circleci tests glob "glob pattern" | circleci tests run --command="xargs test command" --verbose

Test file names include spaces

circleci tests run expects input to be space or newline delimited. If your test file names have spaces in them, this may pose a problem. For example, if you are using pytest which may generate names with whitespace. One possible workaround is to use specific IDs for the tests with whitespace in their names using the instructions from the official pytest documentation.

Parallel rerun failure

If your job runs tests in parallel and persists files to a workspace, you may see a parallel run on a rerun that fails because the persist_to_workspace step could not find any contents in the directory that was specified. This may happen because the parallel run will not always execute tests on a rerun if there are not enough tests to be distributed across all parallel runs.

To avoid such a failure, add a mkdir command before you run any tests to set up the directory (or directories) that will be persisted to a workspace.

steps:

- checkout

- run: mkdir no_files_here

- run: #test command with circleci tests run that populates no_files_here if tests are run

- store_test_results:

path: ./test-results

- store_artifacts:

path: ./test-results

- persist_to_workspace:

root: .

paths:

- no_files_hereOn a rerun, if the parallel run is running tests, no_files_here will be populated. If it is not running any tests, the persist_to_workspace step will not fail because the no_files_here directory exists.

Approval jobs

If your workflow has an approval job, and a failed job containing failed tests that you wish to rerun, you will not be able to select Rerun failed tests until the workflow has terminated. This means that you must cancel the approval job before you can select Rerun failed tests.

Error: can not rerun failed tests: no failed tests could be found

If your job that has failed uploads test results but there are no failed tests and circleci tests run was used, the "rerun failed tests" button will be clickable. However, upon clicking, the new workflow will fail with the following message: Error: can not rerun failed tests: no failed tests could be found.

To resolve this error, use "Rerun workflow from failed" to rerun all tests. "Rerun failed tests" will only work if there are failed tests reported in the "Tests" tab.

FAQs

Question: I have a question or issue, where do I go?

Answer: Leave a comment on the Discuss post.

Question: Will this functionality rerun individual tests?

Answer: No, it will rerun failed test classnames or test filenames (file) that had at least one individual test failure.

Question: When can I use the option to Rerun failed tests?

Answer: The job must be uploading test results to CircleCI and using circleci tests run.

Question: I don’t see my test framework on this page, can I still use the functionality?

Answer: Yes, as long as your job meets the prerequisites outlined above. The rerun failed tests functionality is test runner and test framework-agnostic. You can use the methods described in the Collect test data document to ensure that the job is uploading test results. Note that classname and file is not always present by default, so your job may require additional configuration.

From there, follow the Quickstart section to edit your test command to use circleci tests run.

If you run into issues, comment on the Discuss post.

Question: Can I see in the web UI whether a job was rerun using "Rerun failed tests"?

Answer: Not currently.

Question: My maven surefire tests are failing when I try to set this feature up?

Answer: You may need to add the -DfailIfNoTests=false flag to ensure the testing framework ignores skipped tests instead of reporting a failure when it sees a skipped test on a dependent module.

Question: Can I specify timing type for test splitting using circleci tests run?

Answer: Yes, you can specify the timing type as when using circleci tests split --split-by=timings --timings-type= using a --timings-type= flag. You can pass file, classname, or name (name splits by test name) as a flag to circleci tests run.

Question: Are tests that my test runner reported as "Skipped" or "Ignored" rerun when I select Rerun failed tests?

Answer: No, only test files/classnames that have at least one test case reported as "Failed" will be rerun.

Question: I use Cypress and my job seems to be uploading test results just fine, but the failed test re-run is still running all of the tests instead of just the failed tests

Answer: It is possible that the JUnit XML files do not have the proper metadata for the failed tests rerun. Try using the cypress-circleci-reporter package to ensure the test results being uploaded have the proper JUnit XML format.

Question: What is the oldest job that can use "rerun failed tests"?

Answer: Rerunning failed tests is currently available for workflows that are less than 15 days old.

Question: Can I use the --record functionality with Cypress and "rerun failed tests"?

Answer: Yes, you can pass the --group flag to Cypress and the --ci-build-id flag to group the results of CircleCI’s parallelization. Because the name passed to --group must be unique within the run, you can use CircleCI’s built-in environment variables:

circleci tests glob ".cypress/**/*.spec.js" | circleci tests run --command="xargs npx cypress run --record --group "$CIRCLE_BUILD_NUM-$CIRCLE_NODE_INDEX" --ci-build-id $CIRCLE_BUILD_NUM --reporter cypress-circleci-reporter --spec" --verbose --split-by=timingsQuestion: Can I use timings-type=testname?

Answer: In order to use timings-type=testname, the test runner/framework must be able to take in as input a list of test names (as opposed to classnames or file names). Most test frameworks (including RSpec) do not accept as input a list of test names. If the test framework accepts as input a list of test names, pipe the test names into circleci tests run instead of piping in test file/class names via circleci tests glob. This will enable CircleCI to find the historical test data for individual test names and split the test names into logical groups.