Scheduling load tests and persisting output with k6

Software Engineer

In this k6 series I have covered HTTP request testing with k6 and performance testing with k6. I designed these tutorials to introduce you to k6 and to show you how to use k6 for performance testing of microservices. As the third tutorial in the k6 series, this will cover how you can store your k6 test results locally and also how to schedule your load tests using CircleCI’s scheduled pipelines feature.

Prerequisites

To follow this tutorial, you will need:

- Basic knowledge of JavaScript

- Basic knowledge of HTTP requests and testing them

- Node.js (version >= 10.13) installed on your system

- A CircleCI account

- A GitHub account

If you have not yet worked through the k6 performance testing tutorial, I recommend doing that before you start this one.

This tutorial builds directly on what we built in the “Performance testing with k6” tutorial. Your first step is to clone the GitHub repository for that tutorial.

You can clone the GitHub repository with this command:

git clone https://github.com/CIRCLECI-GWP/api-performance-testing-with-k6.git

Great! You are ready to start learning!

Persisting k6 results output

In the tutorial for performance testing with k6, I covered how to output k6 metrics and logs to the cloud for analysis and visibility by your whole team. In this tutorial, I will lead you through persisting the test output locally in the machine where the tests were run.

k6 presents multiple options for exporting test results for you to review later. Services that support k6 exports include Amazon CloudWatch, Apache Kafka, Influx DB, New Relic, Prometheus, Datadog, and others. This tutorial focuses on how to get the same using JSON output.

To run external test results outputs on k6, you need to define the --out flag. You did this in the previous tutorial in a slightly different way. In this case, you will run a simple load test for a Heroku API in the repository you cloned earlier.

The load test runs multiple virtual users over a period of 30 seconds. In 30 seconds, the test:

- Creates multiple

todoitems using the API. - Ensures that every single Todo item is what was created using the application endpoints.

Here is a code snippet for this test:

// create-todo-http-requests.js file

import http from 'k6/http';

import { check, group } from 'k6';

import { Trend } from 'k6/metrics';

const uptimeTrendCheck = new Trend('/GET API uptime');

const todoCreationTrend = new Trend('/POST Create a todo');

export let options = {

stages: [

{ duration: '0.5m', target: 3 }, // simulate ramp-up of traffic from 0 to 3 users over 0.5 minutes.

],

};

export default function () {

group('API uptime check', () => {

const response = http.get('https://todo-app-barkend.herokuapp.com/todos/');

uptimeTrendCheck.add(response.timings.duration);

check(response, {

"status code should be 200": res => res.status === 200,

});

});

let todoID;

group('Create a Todo', () => {

const response = http.post('https://todo-app-barkend.herokuapp.com/todos/',

{ "task": "write k6 tests" }

);

todoCreationTrend.add(response.timings.duration);

todoID = response.json()._id;

check(response, {

"status code should be 200": res => res.status === 200,

});

check(response, {

"response should have created todo": res => res.json().completed === false,

});

})

}

The first group() block in the create-todo-http-requests.js file runs an uptime check to verify that the API is responding. The other block creates the todo items and verifies that they have been created correctly.

You could run this test with the command k6 run test-file. Because we want more from this test, we will use a different command. In addition to verifying proper execution, we want to see the logs of the execution in a JSON file.

To do this, invoke the --out outputFile.json flag. This flag ensures that your test responses are recorded in JSON format. Execute this command:

k6 run create-todo-http-request.js --out json=create-todo-http-request-load.json

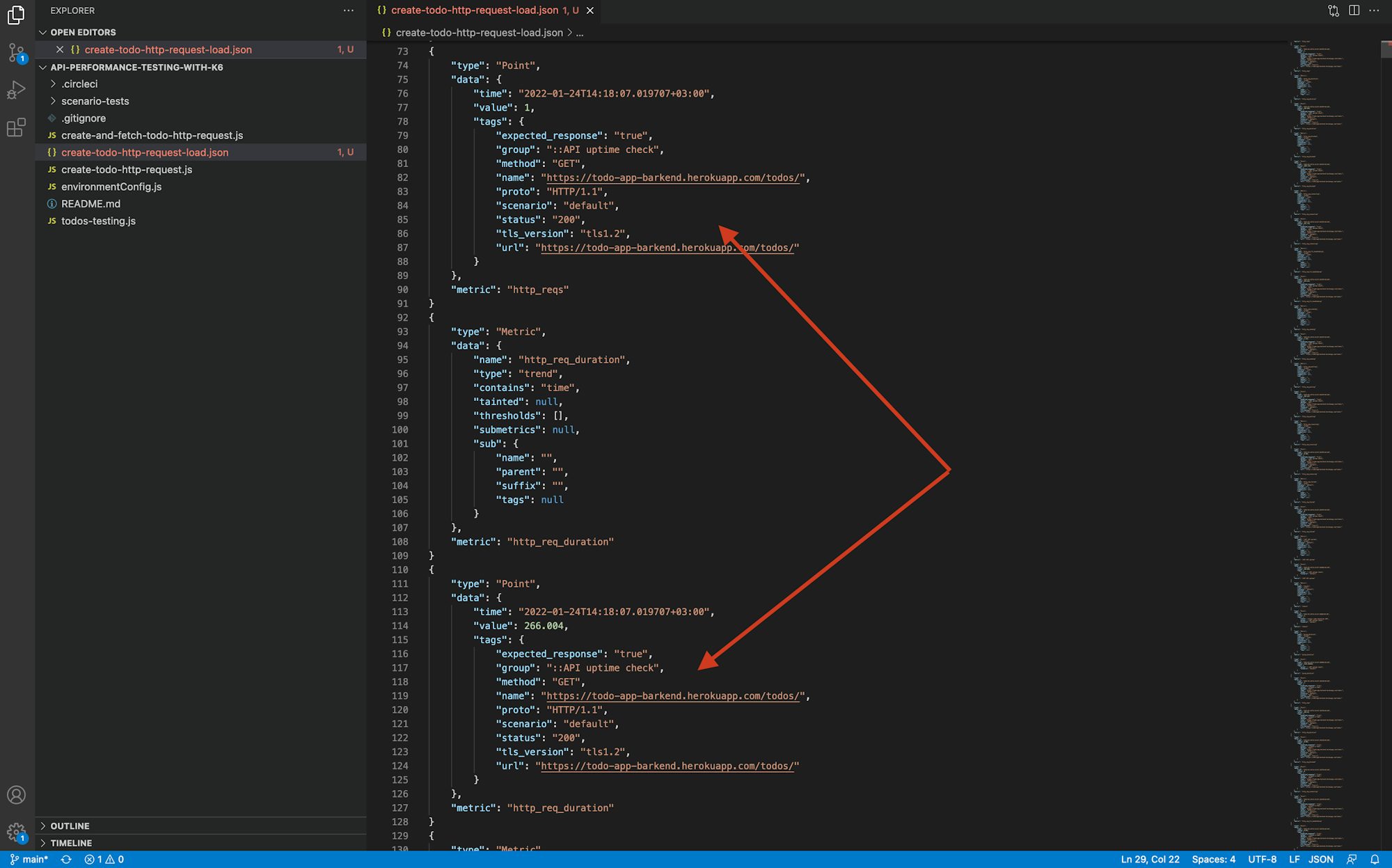

This test executes normally but also creates a file: create-todo-http-request-load.json. This new file contains the execution information for all the tests in that run.

Once k6 is executed with a test metrics output defined, it records that output and displays it upon test execution.

After the test executes you can open the output file and see the results output in your JSON file.

The JSON output file contains metrics that k6 uses to generate the data. You can also review:

- All requests made when the load tests were running

- Timestamps of the requests

- The time it took for every request to be executed

Using this data, you can determine the slowest and the fastest responses and identify bottlenecks. Not only does this give you a better understanding of your tests, but the result output files generated are permanent. This makes the data export an efficient way to persist the data to be reviewed later and to compare the run with other test runs. Now that you have tried exporting JSON, you can experiment with exporting other file types like CSV or XML.

Scheduling load tests with CircleCI scheduled pipelines

Load tests take time to execute and can tie up a lot of system resources. You may want to run load tests only when you do not have users accessing your system or when you know there will be little to no disruption for application users. An alternative would be to set up a parallel load testing environment that mimics your production environment.

As a CI/CD practitioner, you know that resources will always be a constraint and quality will always be a priority. That makes setting up scheduled pipelines to run your load tests a great option.

Using scheduled pipelines, you can automate the process of running load tests so they happen only at times when you anticipate a limited number of users in your system.

Setting up scheduled pipelines on CircleCI

To configure your pipeline to run on a schedule, go to the CircleCI dashboard and select Project Settings. Select the project. In this case, use the api-performance-testing-with-k6 project. Click the ellipsis (…) located next to the project.

Click Triggers.

On the Triggers page, click Add Scheduled Trigger to display the trigger form.

Fill in the form to add a trigger to execute your load tests when you want them to be executed. For this tutorial, configure the trigger to execute only a single time on Sunday every week at 22:00 UTC. Save the scheduled pipeline.

You now have a pipeline that will be run once every week to make sure that your load tests work. You can edit the schedule as needed. You may forget to execute your load tests, but CircleCI will always do it for you, at exactly the time you choose. To learn more about how scheduled pipelines can help you optimize your development time and resource use, read Benefits of scheduling continuous integration pipelines.

Conclusion

In this tutorial, we revisited how to run load tests with k6 and added exporting the test metrics to an external destination. In this case it was a JSON file, but you can export it to CSV or XML too. We also covered the process of creating a scheduled pipeline to execute load tests. We were able to specifically curate the conditions and the times when the load tests should be executed by the CircleCI pipeline. I hope that you find this information useful for the work your team are doing.

Build on what you have learned by completing the other two tutorials in this series: