Containers vs virtual machines (VMs): What is the difference?

Senior Technical Content Marketing Manager

Containers and virtual machines (VMs) are two approaches to virtualization, or the creation of virtual — as opposed to a physical — versions of computer hardware platforms, storage devices, and network resources.

While containers are isolated processes designed to run a single service or application, VMs emulate entire operating systems, offering a more comprehensive environment for running multiple applications and managing complex workloads.

Developers often use VMs and containers in combination, particularly in CI/CD pipelines. Containers provide portability and consistency across different CI/CD stages, while VMs offer deeper isolation and the ability to replicate complex environments. This hybrid approach allows teams to optimize resource utilization, maintain security, and ensure that applications perform reliably in a variety of scenarios.

In this article, you will learn key differences between containers and VMs, including when you should use each and how to incorporate them into your development pipelines.

What is a container?

A container is a lightweight, self-contained unit that runs one or more processes, keeping them separate from other programs on the same computer. It uses the system’s shared kernel (the part of the operating system [OS] that controls resources and operations) to create this isolation.

Containers operate similarly to regular applications on the host OS, but with restricted access to hardware. While the host OS interacts directly with hardware, containers use virtualized resources. This allows them to run independently while ensuring isolation and portability.

There are two types of containers: application containers and system containers. System containers are much less common now, but were popular before Docker made application containers commonplace in 2015. When discussing containers, people most often mean application containers.

Containers are built from read-only templates called images that are pulled from a central repository to run on a host machine.

Typically, containers run on Linux systems within separate namespaces, ensuring they don’t directly interact with other processes. Communication between containers and other processes happens through a software interface. Containers on other operating systems follow a similar structure.

Uses of containers

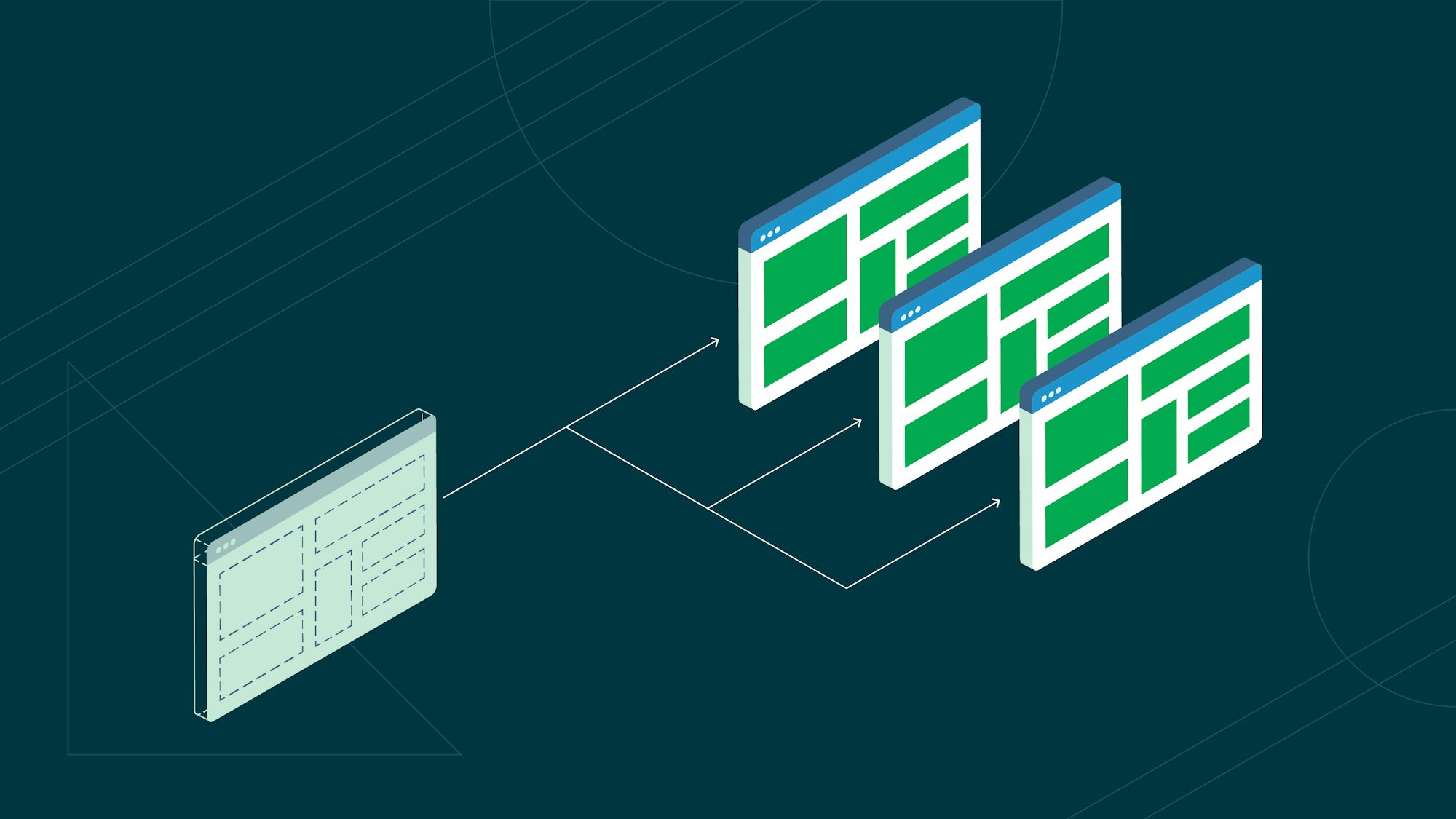

Containers are useful because they are highly portable. Most common container engines run in multiple environments and are lightweight in resource use. Containers package all the dependencies they need, so they run consistently no matter what compatible system you implement them on. That means that once you build an application from one or multiple containers, you can run it on many different systems.

This portability is particularly valuable for large organizations that need to deploy identical software across multiple machines or networks. Containers can also run multiple instances of the same task simultaneously or divide a larger application into smaller, independent microservices.

Beyond deployment, containers are commonly used to create temporary environments for development, testing, and CI/CD pipelines. These ephemeral environments can be quickly spun up and torn down, providing a clean, isolated setup for automated testing, building applications, and performing security checks. This allows developers to test changes in an environment that closely mirrors production without the hassle of managing long-term infrastructure.

Container tools

Containers require an engine to run, and several tools make managing containers easier. Docker, the most popular container orchestration system, uses a daemon to create and manage containers.

Other container systems like Buildah and Kaniko offer daemon-less architecture. Daemon-less architecture doesn’t need root access for full functionality, which may offer greater security. However, these tools alone cannot run or manage containers. Developers may prefer tools like Docker and Podman because they let users build images and run and configure containers.

What is a VM?

Like a container, a VM is a software-based environment used for virtualization. Unlike containers, which share the host system’s operating system, VMs are built on a software layer called a hypervisor. The hypervisor allows each VM to run its own separate operating system, enabling multiple VMs to operate independently on the same physical server.

Because each VM runs its own complete and separate operating system, VMs have a higher level of isolation and are more versatile than containers. They can handle a wider range of tasks, including running applications, services, or even multiple processes at once, as if they were a standalone physical computer.

While both VMs and containers allow multiple instances to run on the same server, VMs require more resources because each instance includes its own OS. Containers, on the other hand, share the host system’s kernel, making them lighter and faster to spin up, but with slightly less isolation.

Uses of VMs

Because VMs create a complete computing environment, you can use them for a wider set of use cases. You can install new software on them and change their code down to the OS level. You can even snapshot the VM in a given state to roll it back to that configuration should there be issues later. Like containers, VMs are separate from other software on the same piece of hardware, making them perfect environments for software testing.

VMs allocate resources below the level of a guest OS, which limits the effect a malicious application can have. Malicious code that compromises one VM is unlikely to affect the host OS or access the machine’s firmware. That keeps other VMs running on the same machine safe. And, because your VM can use a different OS than its host device, you can use VMs to test software in different environments.

VM tools

There are many tools available to build and manage VMs. The most essential are hypervisors, which govern access to the underlying hardware resources (like CPU, memory, and storage) for one or more VMs. There are two types of hypervisors:

-

Type 1 hypervisors (also known as *bare-metal hypervisors) run directly on the physical hardware without an underlying operating system. Examples include VMware ESXi, Microsoft Hyper-V, and Xen. These are typically used in enterprise data centers and cloud environments because they offer high performance and efficiency.

-

Type 2 hypervisors (also called hosted hypervisors) run on top of an existing operating system. Examples include Oracle VirtualBox and VMware Workstation. These are more commonly used on personal computers or in smaller environments for development and testing.

Other tools help users create and manage many VMs simultaneously. For example, cloud platforms like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud offer services that allow developers to quickly spin up and manage large fleets of VMs with ease. Some developers use pre-configured VMs to ensure they are set up correctly and have all the basic programs they need.

Containers vs VMs: Which should you use?

VMs and containers are both powerful technologies with specific use cases. Both provide isolated environments to run processes securely, but they differ in their strengths and purposes.

When to use containers

A containerized application has more direct access to hardware than an application running in a VM. This access makes containers well-suited for lightweight use cases.

Suppose you want to run a single process in multiple separate instances, or run many different processes in isolation from each other. In that case, it makes sense to go with containers. Their small resource footprints make them easy to start up quickly and run at scale.

You can securely examine a container image to know what it will do before creating and running the container itself. That transparency makes containers easy to scan for security flaws, but it comes at a price. You must scan shared containers for vulnerabilities to avoid replicating those vulnerabilities in the systems where they are used.

It is also easy to update every instance of a containerized application. To do so, you make an updated version of the container image and create new containers from that updated image. Then you can delete out-of-date and less secure containers without affecting other processes. You can even automate the update process and rely on the container’s fast start-up time to make sure updates happen quickly every time.

Containers can make some complex tasks simpler. For example, you can build a CI/CD pipeline with a Docker image and a CircleCI config file. The pipeline allows you to quickly test an image and then push it to Docker Hub or another container registry. That lets you move quickly through the CI/CD pipeline from building to deployment.

Containers also facilitate the use of microservices. Microservices split larger applications into bite-sized processes, giving greater flexibility to users and securely separating those processes. You would need to spin up a separate VM for every microservice, which is an inefficient allocation of resources. Containers run multiple services on the same VM, which doesn’t have the benefit of isolation.

When to use VMs

Despite their popularity, containers have not replaced VMs completely. In many cases, containers complement the use of VMs. In fact, VMs offer key advantages in certain scenarios. For example, VMs are ideal when you need to run multiple services on different operating systems while sharing the same hardware.

Since each VM has its own complete operating system, they also provide better isolation and security, making them less vulnerable to potential attacks. If malicious code compromises a container, it could affect the entire system, but with VMs, such risks are isolated to the specific virtual machine.

VMs are also more flexible for performing system-level tasks. For instance, making OS-level changes, such as modifying the system kernel with a sysctl command, is straightforward in a VM. In contrast, doing the same within a container requires elevated privileges, which could weaken the container’s security benefits.

For tasks that require complete OS control or more robust isolation, VMs remain a reliable choice, especially in environments where security and system-level access are critical.

Using containers and VMs in CI/CD pipelines

A major benefit of using VMs and containers in CI/CD pipelines is the standardization of environments. This allows multiple developers to contribute code to a project without encountering environment-related issues. Both VMs and containers ensure consistency, which minimizes errors due to configuration differences.

You can also run containers inside VMs to leverage the benefits of both: containers ensure consistency across environments, while VMs provide control over resources below the OS level. Cloning build environments in this way lets you increase the amount of automation in your pipelines, reducing the number of error-prone manual steps in your development process.

Containers are particularly useful in CI/CD automation because they make it easy to move and deploy code between machines. You can build a piece of code as a container image and deploy it as a readable file to a repository or hub. Companies like Dollar Shave Club integrate containers in CI/CD to automate their testing and deployment pipeline for maximum efficiency.

CI/CD and containers also work well for vulnerability management. For example, you can use containers as part of an automated, ongoing process of frequent and incremental patch testing. That can be more efficient than releasing extensive updates with less well-understood effects on all parts of your system. Managing vulnerabilities becomes fast, smooth, and distributable among team members.

VMs and containers contribute to a CI/CD pipeline differently. One way they can work together is by using a VM as a machine executor to run more demanding containers. People already using a Docker executor may need to migrate from Docker to a VM-based machine executor. The machine executor’s more isolated environment and greater access to system resources can make this a worthwhile effort.

Conclusion

Containers and virtual machines are essential tools for virtualizing your applications, each offering unique strengths based on your needs. Rather than choosing one or the other, you can use both to create a more flexible, efficient, and secure infrastructure.

By combining the security of virtual machines and the efficiency of containers, you can take advantage of all the benefits of virtualization. Using VMs to secure your applications and containers to move and deploy code between different machines combines the strength of both of their technologies.

Ready to improve your build, test, and deployment workflows with the power of virtualization? Sign up for a free CircleCI account and start leveraging VMs and containers in your pipelines today.