Continuous integration for a production-ready Dockerized Django application

Software Developer

Continuous integration has become a widely accepted practice for software projects. As more technologies are introduced in both continuous integration and software development, developers are looking for practical ways to benefit from them. Basic tutorials that cover toy examples are not always enough for real-life practitioners. As an actual user of Django, Docker, and CircleCI, this was certainly a pain point for me. That is why I wrote this tutorial.

In this guide, you will learn from a fellow practitioner how set up a continuous integration pipeline for a production-ready Dockerized Django 3.2 application.

So that you can get started easily, I created a demo project for you to clone from GitHub.

When you have finished the tutorial, every push to your project’s GitHub repo will automatically trigger a build and upload coverage docs to a code-coverage cloud provider like Codecov.io. You will even learn how to use CircleCI’s parallel mode to run your tests faster and achieve a shorter iterative cycle.

Prerequisites

To get the most from this tutorial, you will need:

- A Dockerized Django 3.2 project demo in a GitHub repository.

- A GitHub account.

- A CircleCI account.

- Docker Desktop installed on your local machine. You can follow Docker’s own tutorial for Windows or macOS.

- Git installed on your local machine. You can follow GitHub’s own tutorial to install it.

- A Codecov account.

- A Docker Hub account.

Our tutorials are platform-agnostic, but use CircleCI as an example. If you don’t have a CircleCI account, sign up for a free one here.

Because every Django project is different, I will lead you through the steps of setting up a continuous integration pipeline using the demo project.

I recommend that you follow this tutorial start-to-finish twice. The first time, use the demo project. For the second go through, use your own Django project, repeating the same steps conceptually, but tweaking the process as necessary.

I will provide some tips for tweaking the steps for your own project later on in the tutorial. For now, focus on following along with the demo project, so you develop an intuition about how the whole process works from end-to-end.

Cloning the demo project

In this step, you will git clone the codebase and make sure you are satisfied that the codebase can run the tests locally. Essentially, this codebase follows the same classic seven-part tutorial in Django’s documentation. There are some differences in the demo project codebase.

The main differences are that:

- The demo project maintains function-based views instead of class-based views used in the tutorial.

- The demo project has a

configfolder with asettingssub-folder that holdsbase.py,local.py, andproduction.pyinstead of justsettings.py. - The demo project has a

dockerized_django_demo_circlecifolder with auserssub-folder.

Git clone the codebase:

$ git clone https://github.com/CIRCLECI-GWP/dockerized-django-demo.git

Here is the output that results:

[secondary_label Output]

Cloning into 'dockerized-django-demo-circleci'...

remote: Enumerating objects: 325, done.

remote: Counting objects: 100% (325/325), done.

remote: Compressing objects: 100% (246/246), done.

remote: Total 325 (delta 83), reused 293 (delta 63), pack-reused 0

Receiving objects: 100% (325/325), 663.35 KiB | 679.00 KiB/s, done.

Resolving deltas: 100% (83/83), done.

Now that you have cloned the demo project, you are ready to test the local version of the Dockerized demo project.

Running the test locally

In this step, you will continue from the previous step to make sure tests can run successfully on your local machine.

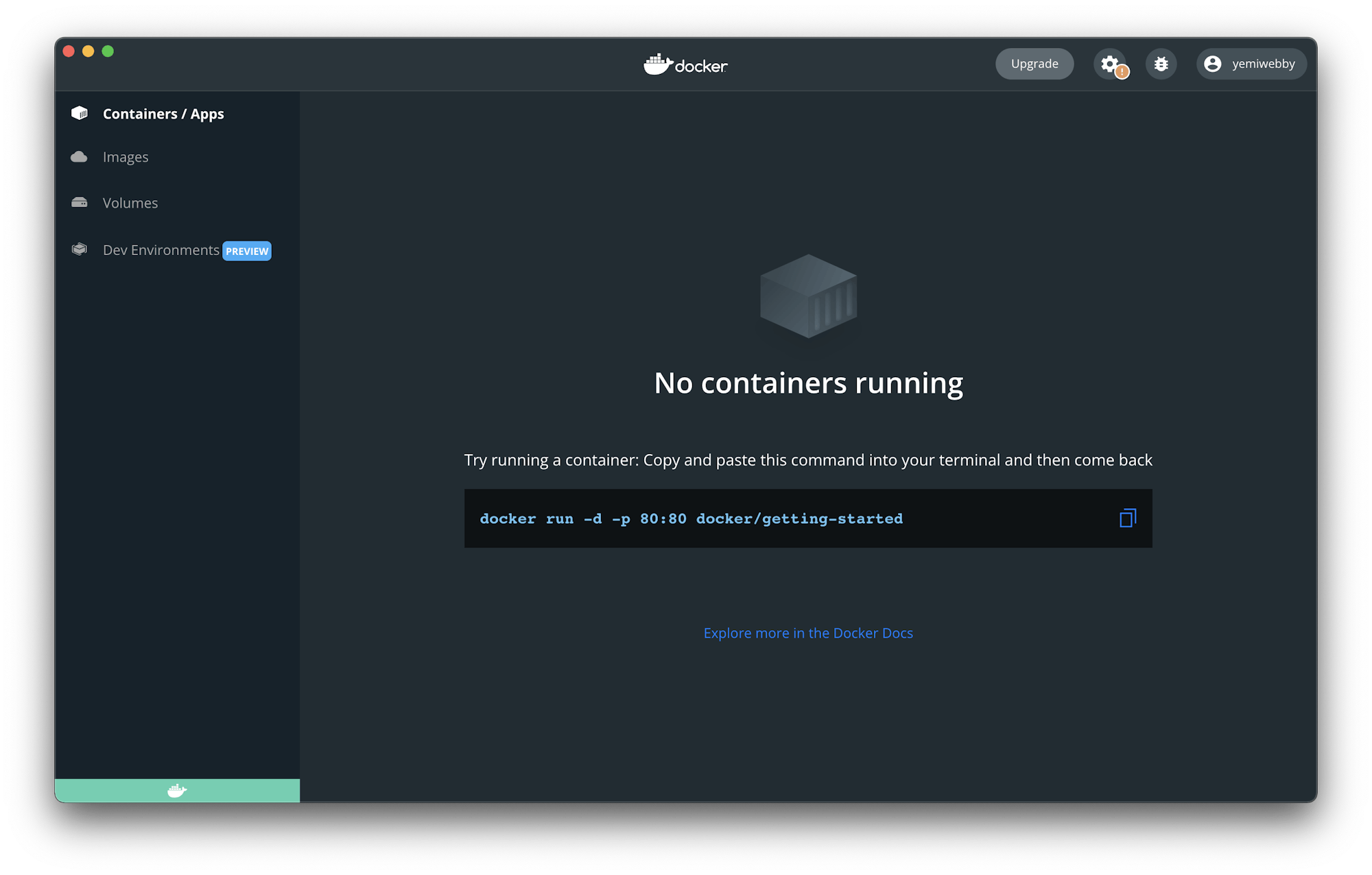

Launch your Docker Desktop app. Refer to this tutorial if you need to review how.

Go into the bash of your Docker container for the Django and run tests:

$ cd dockerized-django-demo

$ docker-compose -f local.yml run web_django bash

Following this output, enter into the bash shell of the Django Docker container.

[secondary_label Output]

Creating network "dockerized-django-demo_default" with the default driver

Creating dockerized-django-demo_db_postgres_1 ... done

Creating dockerized-django-demo_web_django_run ... done

Going to use psycopg2 to connect to postgres

psycopg2 successfully connected to postgres

PostgreSQL is available

root@abc77c0122b3:/code#

Now, run the tests.

root@abc77c0122b3:/code# python3 manage.py test --keepdb

The output will be:

[secondary_label Output]

Using existing test database for alias 'default'...

System check identified no issues (0 silenced).

..........

----------------------------------------------------------------------

Ran 10 tests in 0.454s

OK

Preserving test database for alias 'default'...

This output means that your tests are ok. This should be the same result when you push this to CircleCI.

To exit your bash shell, type exit

root@abc77c0122b3:/code# exit

Go back to your host OS.

[secondary_label Output]

exit

$

To shut down the Docker properly:

$ docker-compose -f local.yml down --remove-orphans

Here is the output:

[secondary_label Output]

Stopping dockerized-django-demo_db_postgres_1 ... done

Removing dockerized-django-demo_web_django_run_8eeefb5e1d1a ... done

Removing dockerized-django-demo_db_postgres_1 ... done

Removing network dockerized-django-demo_default

You can now safely stop Docker Desktop.

By now, your Django project and test cases should work to your satisfaction. It is now time for you to push the code to GitHub.

Connecting your own GitHub repository to CircleCI

If you have been using the demo project, and you have created your own GitHub repo, then you need to rename the remote that you cloned the code from. Add your GitHub repo as remote origin instead.

Replace all mentions of greendeploy-io/test-ddp with your repo’s actual name - org-name/repo-name

$ git remote rename origin upstream

$ git remote add origin git@github.com:greendeploy-io/test-ddp.git

$ git push -u origin main

[secondary_label Output]

Enumerating objects: 305, done.

Counting objects: 100% (305/305), done.

Delta compression using up to 10 threads

Compressing objects: 100% (222/222), done.

Writing objects: 100% (305/305), 658.94 KiB | 2.80 MiB/s, done.

Total 305 (delta 70), reused 297 (delta 67), pack-reused 0

remote: Resolving deltas: 100% (70/70), done.

To github.com:greendeploy-io/test-ddp.git

* [new branch] main -> main

Branch 'main' set up to track remote branch 'main' from 'origin'.

Adding the CircleCI configuration file

In this step, you will create a CircleCI configuration file and write the script to configure a continuous integration pipeline for your project. To begin, create a folder named .circleci and within it, a config.yml file. Now, open the newly created file and enter this content:

# Python CircleCI 2.0 configuration file

#

# Check https://circleci.com/docs/language-python/ for more details

#

version: 2.1

# adding dockerhub auth because dockerhub change their policy

# See https://discuss.circleci.com/t/authenticate-with-docker-to-avoid-impact-of-nov-1st-rate-limits/37567/23

# and https://support.circleci.com/hc/en-us/articles/360050623311-Docker-Hub-rate-limiting-FAQ

docker-auth: &docker-auth

auth:

username: $DOCKERHUB_USERNAME

password: $DOCKERHUB_PAT

orbs:

codecov: codecov/codecov@3.2.2

jobs:

build:

docker:

# specify the version you desire here

# use `-browsers` prefix for selenium tests, e.g. `3.7.7-browsers`

- image: cimg/python:3.8.12

# use YAML merge

# https://discuss.circleci.com/t/updated-authenticate-with-docker-to-avoid-impact-of-nov-1st-rate-limits/37567/35?u=kimsia

<<: *docker-auth

environment:

DATABASE_URL: postgresql://root@localhost/circle_test?sslmode=disable

USE_DOCKER: no

# Specify service dependencies here if necessary

# CircleCI maintains a library of pre-built images

# documented at https://circleci.com/docs/circleci-images/

- image: cimg/postgres:14.1 # database image for service container available at `localhost:<port>`

# use YAML merge

# https://discuss.circleci.com/t/updated-authenticate-with-docker-to-avoid-impact-of-nov-1st-rate-limits/37567/35?u=kimsia

<<: *docker-auth

environment: # environment variables for database

POSTGRES_USER: root

POSTGRES_DB: circle_test

working_directory: ~/repo

# can check if resource is suitable at resources tab in builds

resource_class: large

# turn on parallelism to speed up

parallelism: 4

steps: # a collection of executable commands

# add deploy key when needed esp when requirements point to github url

# - add_ssh_keys:

# fingerprints:

# - "ab:cd:ef..."

- checkout # special step to check out source code to the working directory

# using dockerize to wait for dependencies

- run:

name: install dockerize

command: wget https://github.com/jwilder/dockerize/releases/download/$DOCKERIZE_VERSION/dockerize-linux-amd64-$DOCKERIZE_VERSION.tar.gz && sudo tar -C /usr/local/bin -xzvf dockerize-linux-amd64-$DOCKERIZE_VERSION.tar.gz && rm dockerize-linux-amd64-$DOCKERIZE_VERSION.tar.gz

environment:

DOCKERIZE_VERSION: v0.4.0

# the actual wait for database

- run:

name: Wait for db

command: dockerize -wait tcp://localhost:5432 -timeout 1m

- restore_cache: # restores saved dependency cache if the Branch key template or requirements.txt files have not changed since the previous run

key: deps1-{{ .Branch }}-{{ checksum "requirements/base.txt" }}-{{ checksum "requirements/local.txt" }}

- run: # install and activate virtual environment with pip

command: |

python3 -m venv venv

. venv/bin/activate

pip install --upgrade setuptools && pip install wheel

pip install --upgrade pip==22.0.4

pip install --upgrade pip-tools

pip-sync requirements/base.txt requirements/local.txt

- save_cache: # special step to save dependency cache

key: deps1-{{ .Branch }}-{{ checksum "requirements/base.txt" }}-{{ checksum "requirements/local.txt" }}

paths:

- "venv"

- run:

name: run collectstatic

command: |

. venv/bin/activate

python3 manage.py collectstatic --noinput

- run: # run tests

name: run tests using manage.py

command: |

# get test files while ignoring __init__ files

TESTFILES=$(circleci tests glob "*/tests/*.py" | sed 's/\S\+__init__.py//g' | sed 's/\S\+factories.py//g')

echo $TESTFILES | tr ' ' '\n' | sort | uniq > circleci_test_files.txt

TESTFILES=$(circleci tests split --split-by=timings circleci_test_files.txt | tr "/" "." | sed 's/\.py//g')

. venv/bin/activate

# coverage's --parallel-mode will generate a .coverage-{random} file

# usage: https://docs.djangoproject.com/en/3.2/topics/testing/advanced/#integration-with-coverage-py

# add `--verbosity=3` between $TESTFILES --keepdb if need to debug

coverage run --parallel-mode manage.py test --failfast $TESTFILES --keepdb

# name: run tests using pytest

# command: |

# . venv/bin/activate

# pytest -c pytest.ini -x --cov-report xml --cov-config=.coveragerc --cov

- store_test_results:

path: test-results

- store_artifacts:

path: test-results

destination: tr1

# save coverage file to workspace

- persist_to_workspace:

root: ~/repo

paths:

- .coverage*

fan-in_coverage:

docker:

# specify the version you desire here

# use `-browsers` prefix for selenium tests, e.g. `3.7.7-browsers`

- image: cimg/python:3.8.12

# use YAML merge

# https://discuss.circleci.com/t/updated-authenticate-with-docker-to-avoid-impact-of-nov-1st-rate-limits/37567/35?u=kimsia

<<: *docker-auth

working_directory: ~/repo

resource_class: small

parallelism: 1

steps:

- checkout

- attach_workspace:

at: ~/repo

- restore_cache: # restores saved dependency cache if the Branch key template or requirements.txt files have not changed since the previous run

key: deps1-{{ .Branch }}-{{ checksum "requirements/base.txt" }}-{{ checksum "requirements/local.txt" }}

- run: # install and activate virtual environment with pip

command: |

python3 -m venv venv

. venv/bin/activate

pip install --upgrade setuptools && pip install wheel

# because of https://github.com/jazzband/pip-tools/issues/1617#issuecomment-1124289479

pip install --upgrade pip==22.0.4

pip install --upgrade pip-tools

pip-sync requirements/base.txt requirements/local.txt

- save_cache: # special step to save dependency cache

key: deps1-{{ .Branch }}-{{ checksum "requirements/base.txt" }}-{{ checksum "requirements/local.txt" }}

paths:

- "venv"

- run:

name: combine coverage and generate XML report

command: |

. venv/bin/activate

coverage combine

# at this point, if combine succeeded, we should see a combined .coverage file

ls -lah .coverage

# this will generate a .coverage.xml file

coverage xml

- codecov/upload:

# xtra_args: '-F'

upload_name: "${CIRCLE_BUILD_NUM}"

workflows:

main:

jobs:

- build

- fan-in_coverage:

requires:

- build

To easily access Docker registry from this script, you set up a docker-auth field in a context and provided your Docker Hub credentials. These credentials will be included later in the tutorial as environment variables.

Next, the Codecov orb was pulled in from the CircleCI Orbs registry. This orb helps upload your coverage reports to Codecov without any complex configurations.

Two separate jobs build the project, run the test on CircleCI, and deploy the coverage reports to Codecov:

buildfan-in_coverage

The configured workflow will ensure that the build job is run completely before fan-in_coverage begins since its output will be uploaded to Codecov.

Job structure and workflow

As described in the previous section, there are two jobs: build and fan-in_coverage. I have collapsed them here to make it easier to review.

jobs: build:...

fan-in_coverage:...

workflows:

main:

jobs:

- build

- fan-in_coverage:

requires:

- build

Notice how the fan-in_coverage requires the output from the build job and how fan-in_coverage uploads the coverage artifact to codecov.io. Using two jobs allows you to use parallelism and still allow uploading of artifacts.

Parallelism

Why use parallelism? The answer is simple: parallelism speeds up the builds.

# turn on parallelism to speed up

parallelism: 4

To allow parallelism, you need to split up the tests. To split up the tests, CircleCI needs to know the full list of test files.

Parallelism by splitting up tests

The first line tries to find all the relevant test files, skipping __init__ and factories.py. The list of files is then written into circleci_test_files.txt.

TESTFILES=$(circleci tests glob "*/tests/*.py" | sed 's/\S\+__init__.py//g' | sed 's/\S\+factories.py//g')

echo $TESTFILES | tr ' ' '\n' | sort | uniq > circleci_test_files.txt

TESTFILES=$(circleci tests split --split-by=timings circleci_test_files.txt | tr "/" "." | sed 's/\.py//g')

Then, CircleCI will run the test using coverage.py help in parallel mode. If you need to debug, add a --verbosity=3 flag.

# add `--verbosity=3` between $TESTFILES --keepdb if need to debug

coverage run --parallel-mode manage.py test --failfast $TESTFILES --keepdb

Storing artifacts and files

Finally, the config tells CircleCI where to store the artifacts and other coverage related files to be used in fan-in_coverage:

- store_test_results:

path: test-results

- store_artifacts:

path: test-results

destination: tr1

# save coverage file to workspace

- persist_to_workspace:

root: ~/repo

paths:

- .coverage*

Commit all the changes and update your project repository on GitHub.

Connecting your project to CircleCI

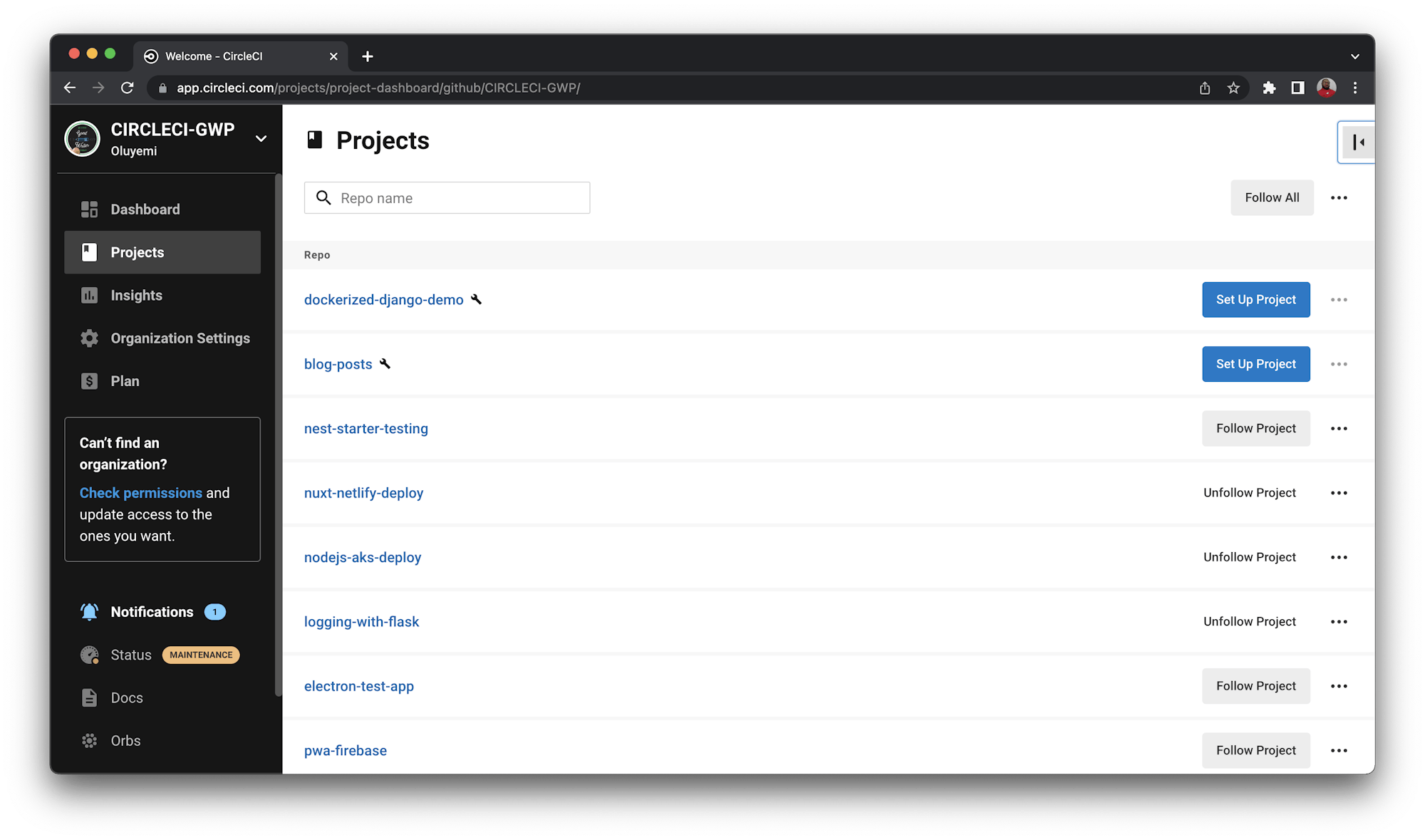

To connect your project to CircleCI, log in to your CircleCI account. If you signed up with your GitHub account, all your repositories will be available on your project’s dashboard. Locate your project from the list; in this case it is dockerized-django-demo. Click Set Up Project.

![Project settings]

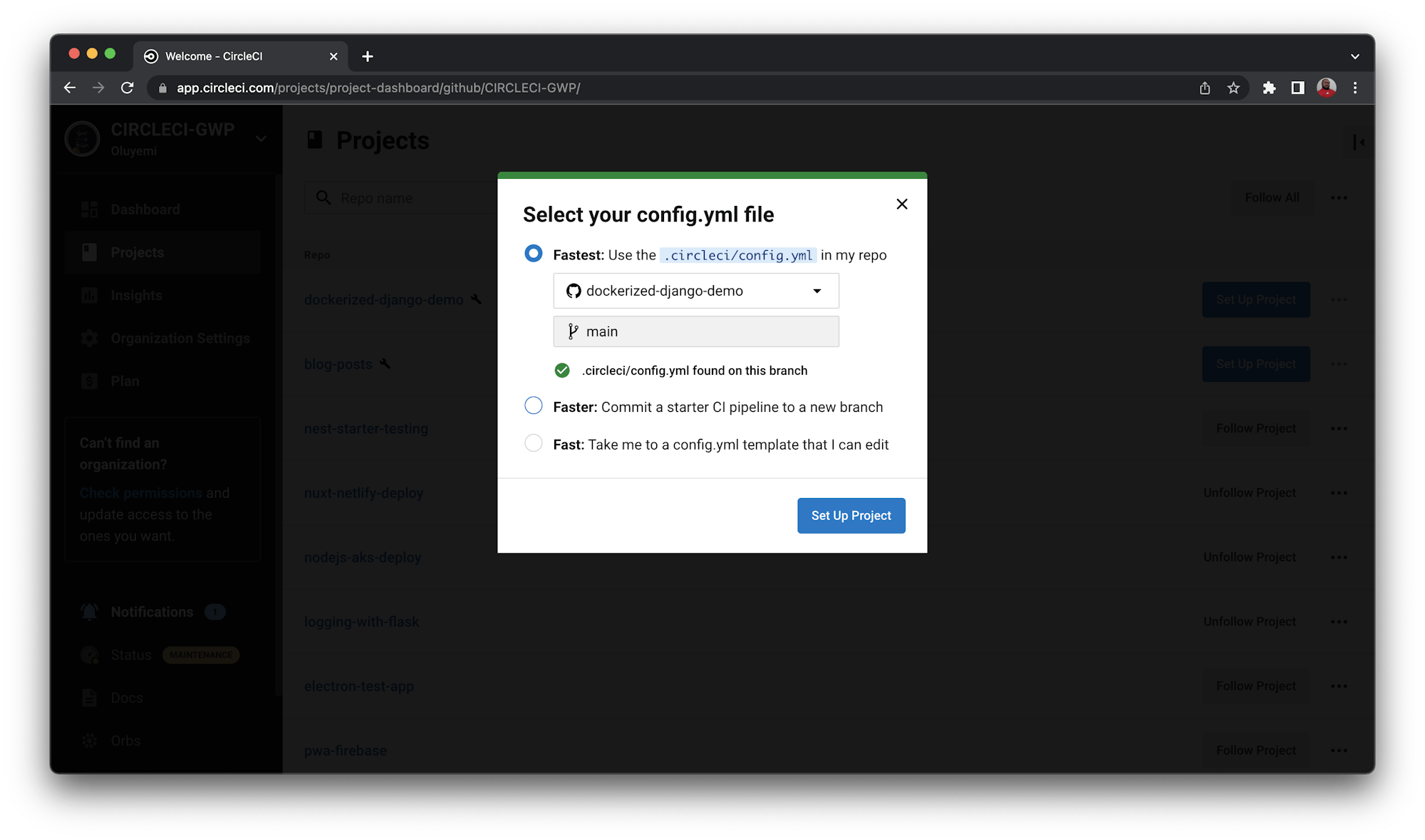

You will be prompted to select the .circleci/config.yml file within your project. Then, enter the name of branch and click Set Up Project.

Your workflow will start and build successfully.

Adding Docker Hub credentials to project settings as environment variables

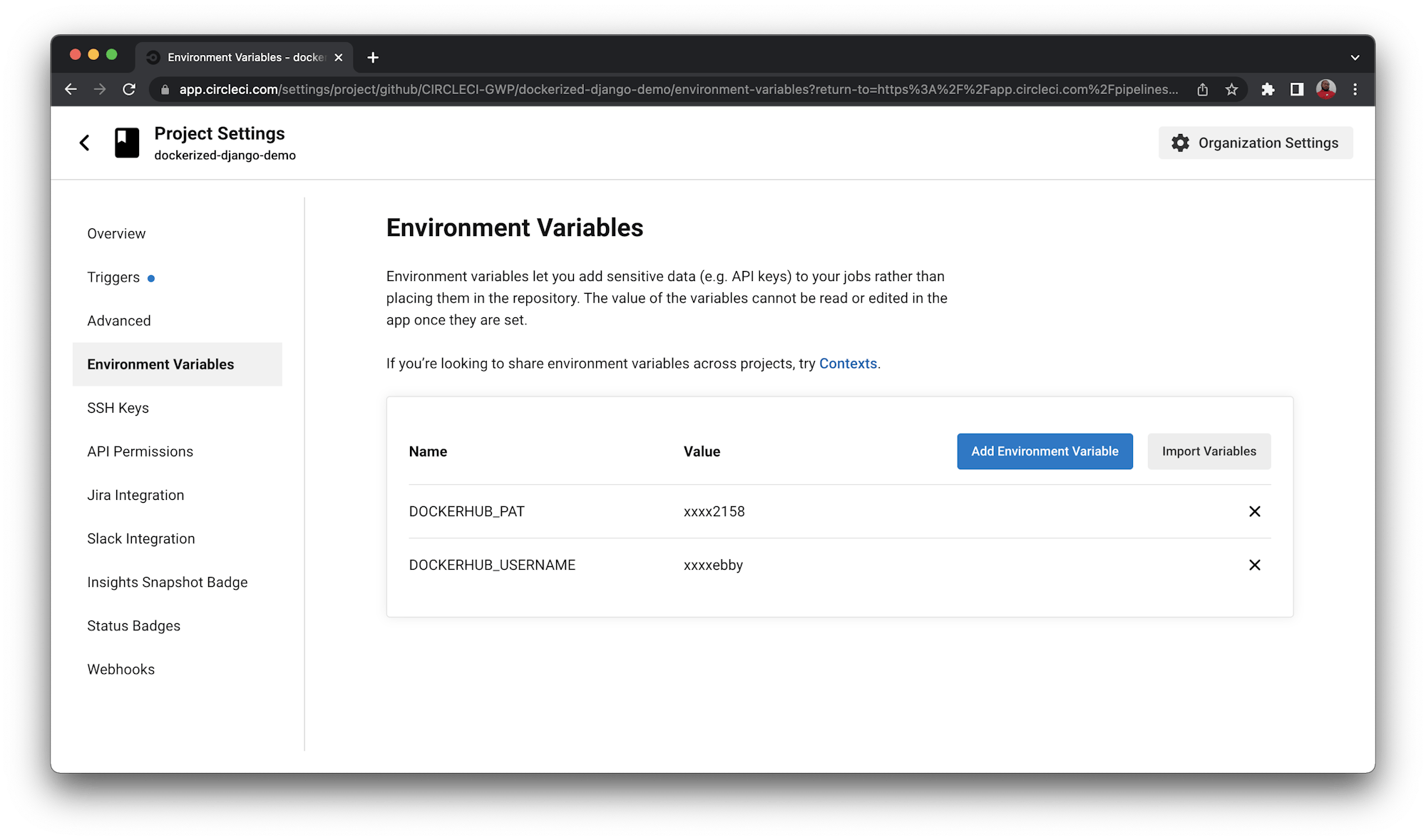

Next, you need to add your Docker Hub username and personal access token as environment variables into the project settings.

Go to the CircleCI project page for your GitHub repo and click Project Settings. Select Environment Variables from the left menu sidebar.

Create the two environment variables: DOCKERHUB_PAT and DOCKERHUB_USERNAME. Use your Docker Hub account as a source for the values.

When you are done, every push to your GitHub repository or pull request should result in a build on your CircleCI.

Because the demo project is a public repo, the CircleCI project build page and Codecov coverage page are public also.

Check out the CircleCI project build page for the demo project to learn how your build page should look once you have completed all the steps.

Conclusion

In this article you cloned the demo project for a production-ready, Dockerized Django 3.2 demo project. Now you can do the same for your own Dockerized Django 3.2 project. Even more importantly, in the second half of the article, I went into deep detail about what should be in the config.yml file.

As mentioned earlier, I recommend that you follow the tutorial using the demo project the first try. The second time, when using your own project, just replace every mention of demo Django project with your Django project.

I emphasized certain parts of the explanation so that you know how they help speed up your CI/CD builds. Using parallelism speeds up builds without sacrificing key pieces like Docker and uploading to code coverage providers. One thing to note is that the set up here is for continuous delivery, not continuous deployment. Visit this page to learn more about the differences.