Serverless vs containers: Which is best for your application

Senior Technical Content Marketing Manager

To keep ahead of the curve, many organizations are evolving their technical processes to accelerate IT infrastructure development. Fast and robust deployments to the latest platforms are key to achieving the low lead times that enable this evolution.

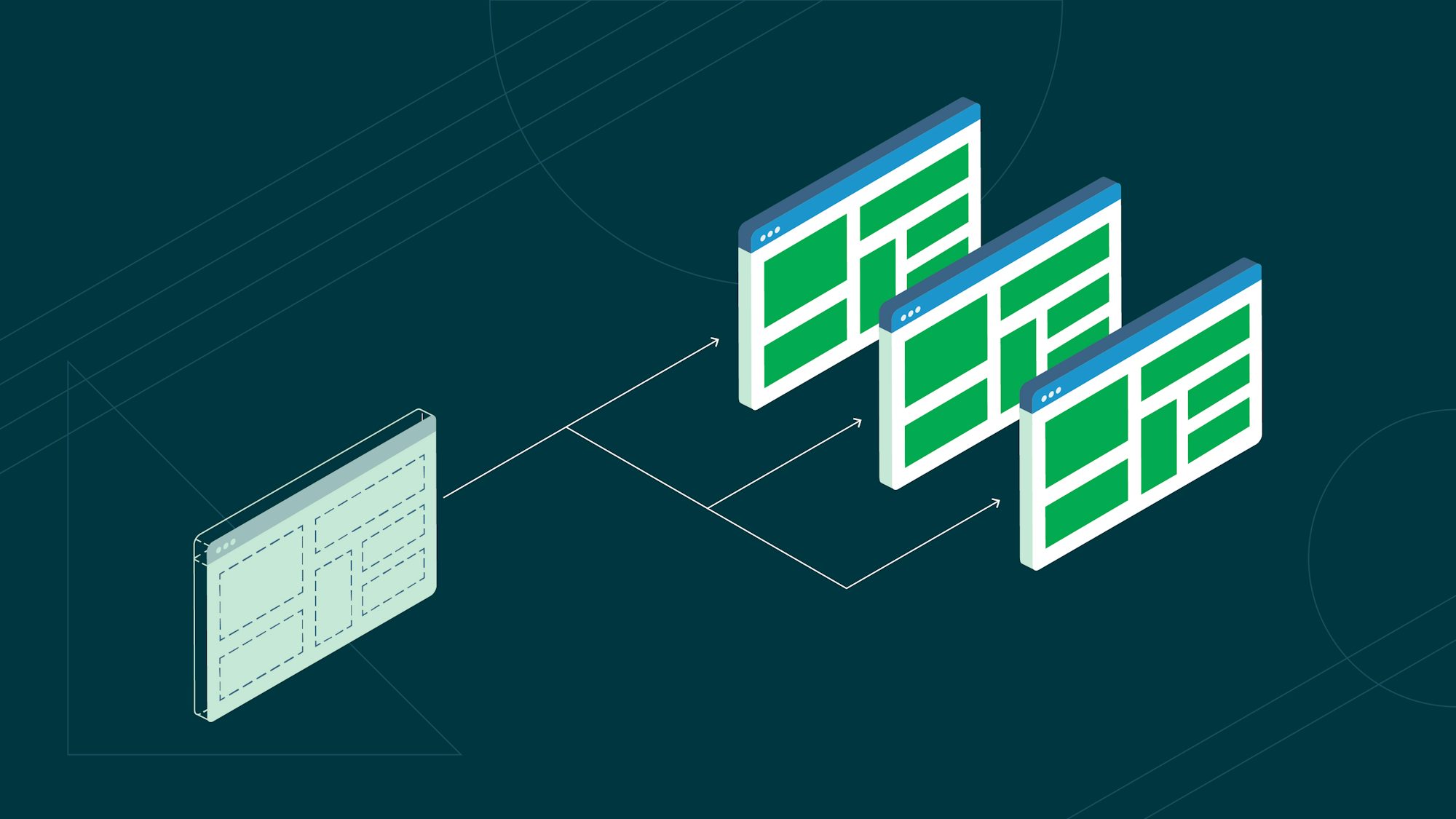

Two of the most widely used technologies to host these deployments are serverless functions and containers. What are they, how do they differ, and how do you decide which is best for your application? In this comparison, we will look at some important differences between serverless computing and containers and help you decide which to use for your next project.

What is serverless?

Serverless computing is an approach to cloud computing in which the cloud provider manages the execution of code by dynamically allocating resources.

The term “serverless” can be somewhat misleading as it implies there are no servers involved, which isn’t the case. Instead, it means that the complexity of managing and scaling servers is abstracted away from the developer. The provider runs the server and dynamically manages the allocation of machine resources.

Serverless functions are usually small, lightweight programmatic functions with a single purpose. This modular approach allows developers to build applications that are scalable and flexible. Each function is independently deployable and is triggered by specific events, such as HTTP requests, file uploads, or database changes. The cloud provider instantaneously allocates resources to execute the function only when needed, and the function is idle when not in use.

Most cloud providers offer serverless functions, which they may refer to as functions as a service (FaaS). The leading offerings are AWS Lambda, Azure Functions, and Google Cloud Functions, each with many integrations within the associated ecosystems. They are ideal for providing API endpoints or microservices.

What are containers?

Containers are a self-contained, lightweight virtualization technology that package an application and its dependencies — including libraries and other binaries, and configuration files — into a single executable unit. Rather than including a separate OS, containers use the host operating system’s kernel. This makes them faster and more efficient than full virtual machines, which need to emulate an entire hardware system and include their own operating system.

The classic metaphor for understanding containers is to imagine a container ship transporting cargo across large bodies of water. The ship itself represents the host OS and hardware, while the cargo containers are the containerized application and dependencies.

Containers can be deployed and run using services like Amazon’s Elastic Container Service (ECS) or Docker. However, DevOps teams most often deploy containers to Kubernetes clusters.

Containers are one of the best ways to transform an existing monolithic application into a cloud-native one. Decoupling the application into a microservice architecture allows each microservice to be developed, managed, and scaled independently within its own container.

Serverless vs containers: similarities and differences

There are several similarities between serverless and containerized applications. There are also some important differences.

How are serverless and containers similar?

In most cases, developers aren’t required to actively manage serverless functions or containers, or the infrastructure hosting their applications. The host hardware and operating system are compartmentalized away from the guest application and operating system. DevOps teams do not need to consider what hardware the serverless functions or containers use.

Both hosting options are scalable by simply provisioning better hardware such as a more powerful CPU, more memory, or faster networking ability.

The exception to this is when using containers with on-premises infrastructure. In that case, hardware provisioning is a manual process, often handled by a dedicated infrastructure team.

Demands like traffic may cause your team’s need to scale. Kubernetes, an open-source orchestration system, can horizontally scale containers in seconds. Similarly, many FaaS offerings can automatically scale out based on important metrics like the number of requests routed to the application.

Serverless functions and containers are both compatible with top CI/CD platforms, including CircleCI. For each build that completes successfully, CI/CD tools can deploy a new version of a container image or serverless function to your infrastructure provider.

How are serverless and containers different?

Despite the similarities between the two technologies, there are some inherent differences.

Orchestration requirements

Both serverless and containers are elastic, so they can scale in and out when required. However, DevOps teams that use containers need container orchestration software like Kubernetes to scale automatically based on given criteria. Meanwhile, many FaaS platforms provide this service out-of-the-box.

Functional scope

Serverless functions are often small, self-contained pieces of functionality with a single responsibility. They are usually short-lived, running for just a few minutes or seconds. This makes them ideal for handling bursty, sporadic workloads like responding to web requests or processing events. Meanwhile, containers work best for more extensive, long-running applications or applications with multiple responsibilities.

Billing

A significant difference is that your provider will bill you only for the time your serverless functions spend running. In contrast, you spin up container instances and keep at least some of them running 24/7, often leading to a higher cost. If your application is small and better suited to a serverless application, the wasted resources quickly generate unnecessary cost and lead to a larger environmental impact.

There are serverless offerings for containers. AWS Fargate is a platform used for hosting and running containers, and you are billed only for the time the container takes to finish running.

Vendor lock-in

Another difference is that vendor lock-in is more of a concern with serverless functions. You can easily become tied into a particular ecosystem because of the code needed to integrate with associated services.

In contrast, it is easier to stay vendor neutral using containers. A side effect of this neutrality is that containers support any language, while serverless applications are limited to a small selection of languages. This list of supported languages varies for each provider.

How to choose between serverless and containers for your application

When you are deciding whether serverless or containers is best for your application, it is best to take all the factors listed above into consideration. However, your application architecture’s size and structure should be the main influence on your decision.

You can deploy a small application or one that can be easily split into multiple smaller microservices as a serverless application. On the other hand, a larger, more complex application might be better suited as a containerized application. Sets of services that are tightly coupled, that cannot easily be broken down into small microservices, are strong candidates for containers.

Due to limitations on serverless offerings, containers may be a better choice for some applications. Examples of these are those written in unsupported languages or those that have long running times, such as machine learning applications.

It’s also important to note that you do not necessarily have to choose one or the other. Serverless and containers are not mutually exclusive. You can use containers where needed, combining with serverless where it makes sense, and enjoy the best of both worlds. As we mentioned earlier, there are even serverless offerings for hosting containers that aim to bridge the gap between the two options.

Conclusion

Both serverless and containers are great options for creating the scalable cloud-native applications that will allow you to innovate more quickly. To find out more about how to get started, check out these tutorials:

Whether you choose to use serverless or containers, you can use CircleCI’s CI/CD platform to automate building, testing, and deploying your application. Once you have determined how you can use serverless and containers to your advantage, sign up for a free CircleCI account and start optimizing your deployment workflows.