Building an automated unit testing pipeline for serverless applications

Software Engineer

The Serverless framework is an open-source framework written in Node.js that simplifies the development and deployment of AWS Lambda functions. It frees you from worrying about how to package and deploy the application to the cloud, so you can focus on your application logic.

Serverless applications are distributed by design, so good code coverage is vital, and should include unit testing. Unit test cases make it easier to detect bugs early during the development phase and guard against regression issues. They improve the overall design and quality of the application and allow you to refactor code with confidence.

Because your application could depend on a number of other AWS services, it is difficult to replicate the cloud environment locally. Unit testing lets you to test your application logic in isolation. Using event-based architecture, you can mock the events to add test cases and assert the expected behaviour based on the event received.

In this tutorial, you will learn how to build an automated unit testing pipeline using Jest testing framework for serverless applications. It builds on the learnings from the Deploying a serverless application blog post.

Prerequisites

For this tutorial, you will need to set up these items:

- Create a CircleCI account

- Install Node.js and NPM

- Set up the serverless framework

- Install AWS CLI

- Create an AWS account

- Set up AWS to use OpenID Connect Tokens

Our tutorials are platform-agnostic, but use CircleCI as an example. If you don’t have a CircleCI account, sign up for a free one here.

Creating a new serverless application

Create a new directory for your project. Run:

mkdir circleci-serverless-unit-testing

cd circleci-serverless-unit-testing

Go to the circleci-serverless-unit-testing directory. From there, you will create a new serverless application using the aws-nodejs template. (Make sure that you have the serverless framework set up on your system.) Run this command:

serverless create --template aws-nodejs

Executing this command creates a handler.js file with a basic Lambda handler and a serverless.yml file with the config for your application.

Creating a Node.js Lambda function

Next, you need to define an AWS Lambda function using Node.js. This function generates a dummy CSV file, uploads it to AWS S3 and adds an entry to the DynamoDB table. You will write unit test cases for the Lambda handler using Jest testing framework later in the tutorial.

Create a new package.json file in the root of the serverless application. You will need this to add the dependencies needed by the Lambda function. In the package.json file, add this code snippet:

{

"scripts": {

},

"dependencies": {

"csv-stringify": "^6.0.5",

"fs": "0.0.1-security",

"uuid": "^8.3.2"

},

"devDependencies": {

}

}

Next, update the existing handler.js file with this snippet:

"use strict"

const AWS = require('aws-sdk');

const { v4: uuidv4 } = require('uuid');

var fs = require('fs');

const { stringify } = require('csv-stringify/sync');

AWS.config.update({ region: 'us-west-2' });

var ddb = new AWS.DynamoDB();

const s3 = new AWS.S3();

const TABLE_NAME = process.env.TABLE_NAME

const BUCKET_NAME = process.env.BUCKET_NAME

module.exports.uploadCsvToS3Handler = async (event) => {

try {

const uploadedObjectKey = await generateDataAndUploadToS3()

const jobId = event['jobId']

var params = {

TableName: TABLE_NAME,

Item: {

'jobId': { S: jobId },

'reportFileName': { S: uploadedObjectKey }

}

};

// Call DynamoDB to add the item to the table

await ddb.putItem(params).promise();;

return {

statusCode: 200,

body: JSON.stringify(

{

"status": "success",

"jobId": jobId,

"objectKey": uploadedObjectKey

},

null,

2

),

};

} catch (error) {

throw Error(`Error in backend: ${error}`)

}

};

const generateDataAndUploadToS3 = async () => {

var filePath = '/tmp/test_user_data.csv'

const objectKey = `${uuidv4()}.csv`;

await writeCsvToFileAndUpload(filePath, objectKey)

return objectKey

}

const uploadFile = async (fileName, objectKey) => {

// Read content from the file

const fileContent = fs.readFileSync(fileName);

// Setting up S3 upload parameters

const params = {

Bucket: BUCKET_NAME,

Key: objectKey,

Body: fileContent

};

// Uploading files to the bucket

s3.upload(params, function (err, data) {

if (err) {

throw err;

}

console.log(`File uploaded successfully. ${data.Location}`);

});

return objectKey;

};

async function writeCsvToFileAndUpload(filePath, objectKey) {

var data = getCsvData();

var output = stringify(data);

fs.writeFile(filePath, output, function (err) {

if (err) {

console.log('file write error', err)

}

uploadFile(filePath, objectKey);

});

}

function getCsvData() {

return [

['1', '2', '3', '4'],

['a', 'b', 'c', 'd']

];

}

Here is what this snippet includes:

uploadCsvToS3Handleris the handler function invoked by the AWS Lambda. It calls thegenerateDataAndUploadToS3method to generate a dummy CSV file.- The dummy CSV file is then uploaded to AWS S3 using the

uploadFilemethod. - Then the

generateDataAndUploadToS3method returns the generated file key, which is used in the handler to insert a new item in the AWS DynamoDB table. - The handler returns a HTTP JSON response with the

uploadedObjectKey.

Note: The handler receives the AWS S3 bucket name and the AWS DynamoDB table name from the environment variables. AWS S3 bucket names should be unique across all AWS accounts in all the AWS Regions.

Updating the serverless config

The Lambda handler you defined earliers uses environment variables and interacts with AWS S3 and AWS DynamoDB. You can set this up by making these changes to the serverless.yml file:

- Provision new cloud resources by creating a new AWS S3 bucket and a new AWS DynamoDB Table.

- Update the handler name for the Lambda function and pass bucket name and table name as environment variables.

- Update the IAM role to grant the Lambda function permissions to the S3 bucket and DynamoDB table.

Note: Be sure to update the AWS S3 bucket name to something unique. Buckets cannot share the same name, and your deployment might fail if one with that name already exists on AWS.

To apply these changes, update the serverless.yml with the this code:

service: circleci-serverless-unit-testing

frameworkVersion: '3'

provider:

name: aws

runtime: nodejs12.x

# you can overwrite defaults here

region: us-west-2

# you can add statements to the Lambda function's IAM Role here

iam:

role:

statements:

- Effect: "Allow"

Action:

- "s3:ListBucket"

Resource: { "Fn::Join" : ["", ["arn:aws:s3:::", { "Ref" : "ServerlessDeploymentBucket" } ] ] }

- Effect: "Allow"

Action:

- "s3:PutObject"

Resource:

Fn::Join:

- ""

- - "arn:aws:s3:::"

- "Ref" : "ServerlessDeploymentBucket"

- "/*"

- Effect: "Allow"

Action:

- "s3:ListBucket"

Resource: { "Fn::Join" : ["", ["arn:aws:s3:::", { "Ref" : "ServerlessDeploymentBucket" } ] ] }

- Effect: "Allow"

Action:

- "s3:PutObject"

Resource: { "Fn::Join": ["", ["arn:aws:s3:::circle-ci-unit-testing-bucket", "/*" ] ] }

- Effect: "Allow"

Action:

- dynamodb:Query

- dynamodb:Scan

- dynamodb:GetItem

- dynamodb:PutItem

- dynamodb:UpdateItem

- dynamodb:DeleteItem

Resource: "arn:aws:dynamodb:us-west-2:927728891088:table/circle-ci-unit-testing-table"

functions:

UploadCsvToS3:

handler: handler.uploadCsvToS3Handler

environment:

TABLE_NAME: circle-ci-unit-testing-table

BUCKET_NAME: circle-ci-unit-testing-bucket

# you can add CloudFormation resource templates here

resources:

Resources:

S3Bucket:

Type: AWS::S3::Bucket

Properties:

BucketName: circle-ci-unit-testing-bucket

DynamoDB:

Type: AWS::DynamoDB::Table

Properties:

TableName: circle-ci-unit-testing-table

AttributeDefinitions:

- AttributeName: jobId

AttributeType: S

KeySchema:

- AttributeName: jobId

KeyType: HASH

ProvisionedThroughput:

ReadCapacityUnits: 1

WriteCapacityUnits: 1

Outputs:

S3Bucket:

Description: "S3 Bucket Name"

Value: "circle-ci-unit-testing-bucket"

DynamoDB:

Description: "DynamoDB Table Name"

Value: "circle-ci-unit-testing-table"

The updated config will take care of provisioning the cloud resources and setting the required IAM permissions.

Setting up unit testing

For this tutorial, you will use the Jest testing framework to write unit test cases for the AWS Lambda function. Jest works well with most JavaScript projects and provides great APIs for testing. You can generate code coverage reports without adding any additional configuration. Not only does it support mocking, it provides manual mocks implementations for modules. These features make Jest a great choice for testing serverless applications.

Note: If you are interested in using Mocha/Chai JS for unit testing, this tutorial shows you how to set that up.

Add library dependencies for unit testing

Add the jest NPM dependency by running:

npm install --save-dev jest

Creating a directory structure for unit tests

As a best practice, you should keep the same directory structure for your tests as the application file layout. For example, if you have controllers, views or models defined in your serverless application, you can add similar folders in your __tests__ directory for test cases. Here’s a sample layout for a serverless application:

.

├── __tests__

│ └── controllers

│ | └── myAppController.test.js

│ └── models

│ | └── user.test.js

│ └── views

└── userView.test.js

├── controllers

│ └── myAppController.js

├── handler.js

├── models

│ └── user.js

├── views

│ └── userView.js

├── node_modules

├── package-lock.json

├── package.json

└── serverless.yml

Writing unit tests for serverless functions

To add unit test cases for the serverless application, create a __tests__ directory in the root of your serverless application:

mkdir __tests__

Next, add a handler.test.js file. This file will contain tests for the handler function defined in handler.js.

- Create an empty

handler.test.jsinside the__tests__directory. - In the

handler.test.jsadd a manual mock implementation foraws-sdk. Jest allows you to define partial mocks for the modules so that you don’t need to mock each and every function of the module. - Create mock implementations for DynamoDB’s

putItemand S3 bucket’sputObjectfunctions.

Add this code snippet to the file:

jest.mock("aws-sdk", () => {

return {

config: {

update() {

return {};

},

},

DynamoDB: jest.fn(() => {

return {

putItem: jest.fn().mockImplementation(() => ({ promise: jest.fn().mockReturnValue(Promise.resolve(true)) })),

};

}),

S3: jest.fn(() => {

return {

upload: jest.fn().mockImplementation(() => ({ promise: jest.fn().mockReturnValue(Promise.resolve(true)) })),

};

}),

};

});

You have defined mock implementation to resolve the Promise successfully. Next, add a unit test for the Lambda handler. Call the Lambda handler with a test ID and assert that the status in the response body is set to success.

const handler = require('../handler');

describe('uploadCsvToS3Handler', () => {

beforeEach(() => {

jest.restoreAllMocks();

});

test('test uploadFile', async () => {

const response = await handler.uploadCsvToS3Handler({

jobId: 'test-job-id',

});

let body = JSON.parse(response.body);

expect(body.status).toBe('success');

expect(body.jobId).toBe('test-job-id');

});

});

You can run the npm run test command from the terminal to make sure that the test passes.

Next, add another test case for the failure scenario. Because you need to provide a different mock implementation for the failure scenario, add a new file named handler-fail-putItem.test.js inside the __tests__ directory. Add this code snippet to it:

jest.mock("aws-sdk", () => {

return {

config: {

update() {

return {};

},

},

DynamoDB: jest.fn(() => {

return {

putItem: jest.fn().mockImplementation(() => {

throw new Error();

}),

};

}),

S3: jest.fn(() => {

return {

upload: jest.fn().mockImplementation(() => ({ promise: jest.fn().mockReturnValue(Promise.resolve(false)) })),

};

}),

};

});

const handler = require('../handler');

describe('uploadCsvToS3Handler', () => {

beforeEach(() => {

jest.restoreAllMocks();

});

test('test uploadFile', async () => {

expect(handler.uploadCsvToS3Handler({

jobId: 'test-job-id',

})).rejects.toThrow(new Error('Error in backend'))

});

});

Notice that we are throwing an exception when the putItem method is called on AWS SDK’s DynamoDB module. Beause the putItem method throws an exception, the test will handler will also throw an exception. You assert the exception by matching the error string with the expected error.

The application structure should look like this:

.

├── README.md

├── __tests__

│ ├── handler-fail-putItem.test.js

│ └── handler.test.js

├── handler.js

├── jest.config.js

├── package-lock.json

├── package.json

└── serverless.yml

Running the tests locally

Now that you have added unit tests for your application, see how they can be executed. First, add scripts into the package.json file.

"scripts": {

"test": "jest",

"coverage": "jest --coverage"

}

With these scripts added, you can run all test cases using the npm run test command and generate code coverage reports using the npm run coverage command. Run these commands locally to ensure that all the test cases pass and to check if the code coverage reports are being generated as expected.

Once you run the npm run test command, it will execute all test suites and display the results as shown in the image.

Automating serverless application deployment using CircleCI

Now that you were able to deploy and test locally, automate the workflow so that the code coverage reports can be generated with every deployment.

Adding configuration script

Go to the root of the project containing the configuration file for the CI pipeline. Add a .circleci/config.yaml script. For this tutorial, you will use OIDC tokens to authenticate with AWS instead of using a static access key and secret. An OIDC token is a shortlived token that is freshly issued for each job. To implement this add:

version: 2.1

orbs:

aws-cli: circleci/aws-cli@3.0.0

serverless-framework: circleci/serverless-framework@2.0

commands:

aws-oidc-setup:

description: Setup AWS auth using OIDC token

parameters:

aws-role-arn:

type: string

steps:

- run:

name: Get short-term credentials

command: |

STS=($(aws sts assume-role-with-web-identity --role-arn << parameters.aws-role-arn >> --role-session-name "circleciunittesting" --web-identity-token "${CIRCLE_OIDC_TOKEN}" --duration-seconds 900 --query 'Credentials.[AccessKeyId,SecretAccessKey,SessionToken]' --output text))

echo "export AWS_ACCESS_KEY_ID=${STS[0]}" >> $BASH_ENV

echo "export AWS_SECRET_ACCESS_KEY=${STS[1]}" >> $BASH_ENV

echo "export AWS_SESSION_TOKEN=${STS[2]}" >> $BASH_ENV

- run:

name: Verify AWS credentials

command: aws sts get-caller-identity

jobs:

build:

executor: serverless-framework/default

steps:

- checkout

- aws-cli/install

- aws-oidc-setup:

aws-role-arn: "${CIRCLE_CI_WEB_IDENTITY_ROLE}"

- run:

name: Test AWS connection

command: aws s3 ls

- run:

name: Install Serverless CLI and dependencies

command: |

sudo npm i -g serverless

npm install

- run:

name: Run tests with code coverage

command: npm run coverage

- run:

name: Deploy application

command: sls deploy

workflows:

build:

jobs:

- build:

context:

- "aws-context"

You need a context defined in your CircleCI organization settings or the build will fail.

This script uses CircleCI’s aws-cli orb for installing AWS CLI. It uses the serverless-framework orb for deploying the application to AWS using Serverless framework.

This pipeline runs all the unit test cases and generates the code coverage report before deploying the application to AWS. Commit the changes and push them to the GitHub repository.

Creating a CircleCI project for the application

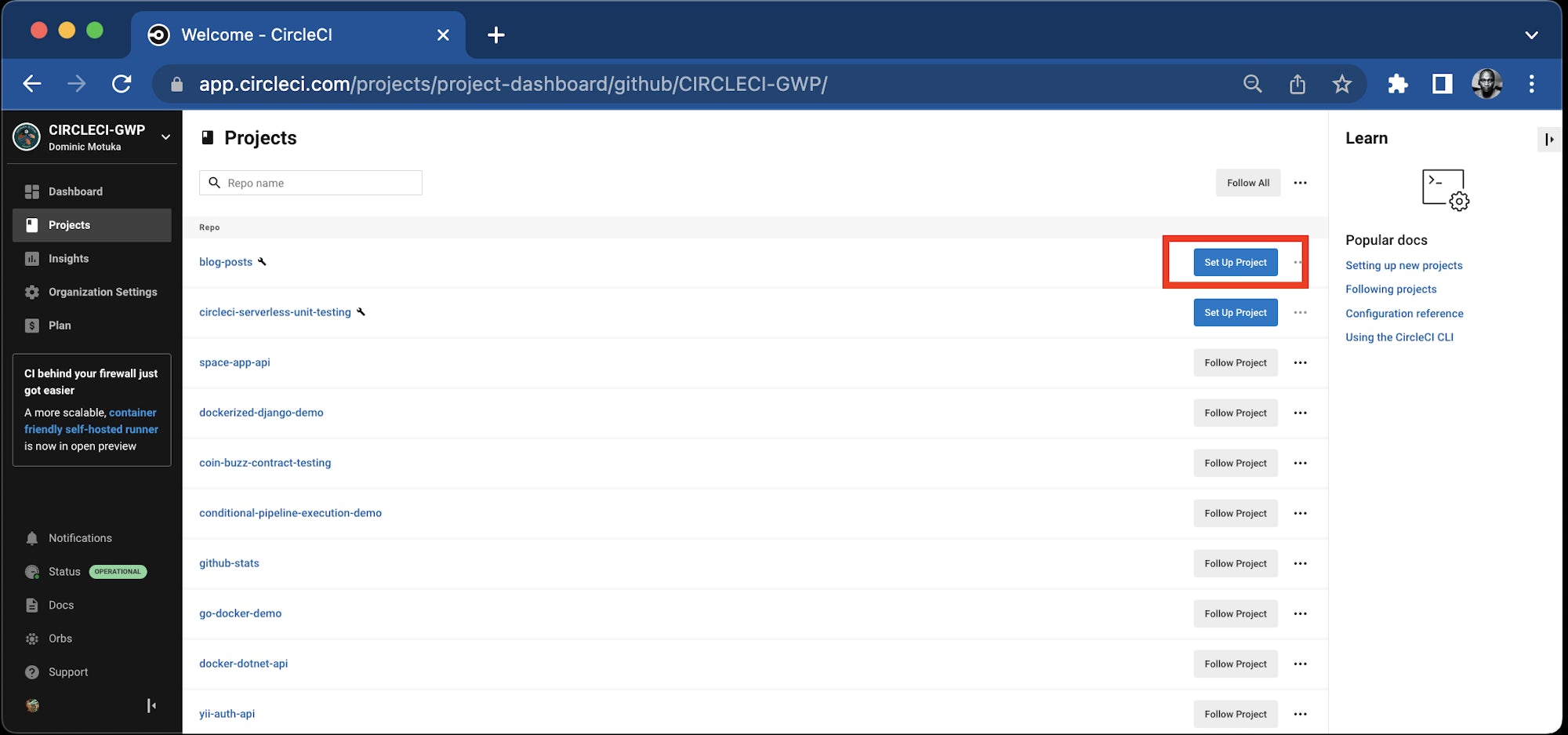

Next, set up the repository as a CircleCI project using the CircleCI console. On the Circle CI console, click Projects and search for the GitHub repo name. Click the Set Up Project button for your project.

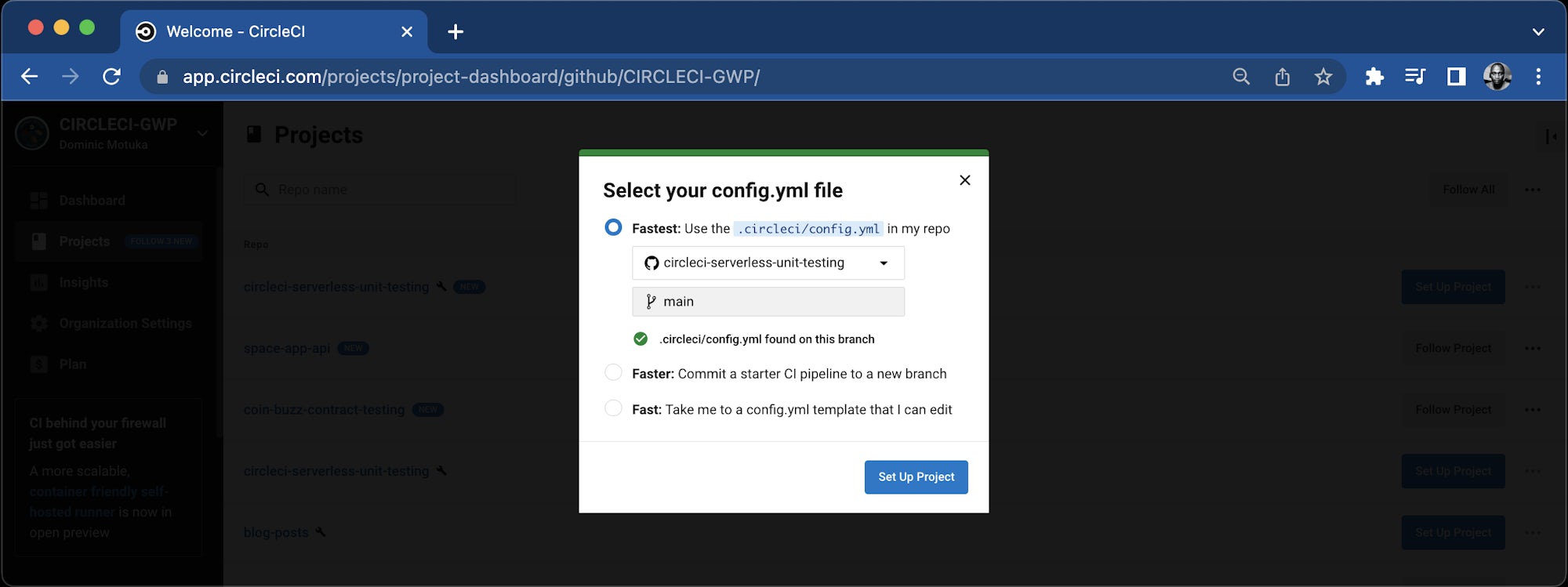

The codebase already contains a config.yaml file, which will be detected by CircleCI. Click Let’s go to continue.

Clicking the Set up project button triggers the pipeline. The pipeline will fail this time because you have not set the environment variables yet. You can set them up next.

Setting up environment variables

On the project page, click Project settings and go to the Environment variables tab. On the next form, click Add environment variable. Add these environment variables:

- Set

CIRCLE_CI_WEB_IDENTITY_ROLEto the role ARB obtained from the IAM role page in the AWS console. Make sure you followed the steps to Set up AWS to use OpenID Connect Tokens and created a IAM role as described in the guide.

Once you add the environment variables, it should show the key values on the dashboard.

Create CircleCI Context

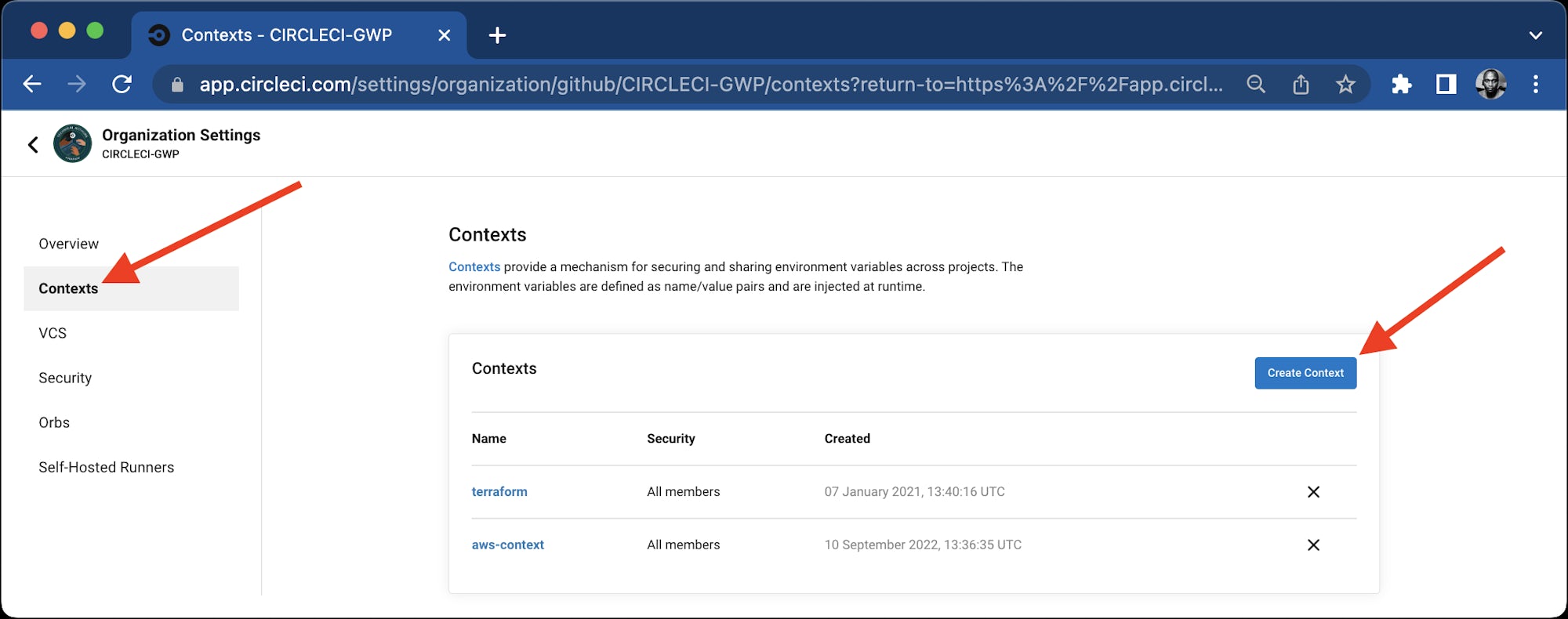

Because the OpenID Connect token is only available to jobs that use at least one context, make sure that each of the jobs that needs an OIDC token uses a context (the context may have no environment variables).

Go to Organization Settings.

Create a context to use in the CircleCI workflow. Call it aws-context.

Now that the context has been created and the environment variables have been configured, trigger the pipeline again. This time the build should succeed.

Conclusion

In this tutorial, you saw how to build an automated unit testing pipeline for serverless applications. The serverless framework simplifies the process of deploying an application to the cloud.To deploy with confidence, it is critial to have extensive code coverage that include unit tests. Using the Jest framework, you can easily add expressive unit tests for your application that include mocking, spying, and stubbing. Also in this tutorial, you used OIDC short-lived tokens to deploy to AWS instead of using static access key and secret. You can check out the full source code used in this tutorial on GitHub.