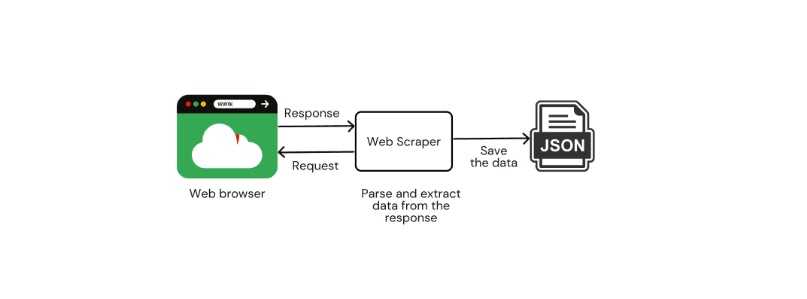

Web scraping is the process of extracting data from web pages by programmatically accessing the underlying HTML. There are a number of practical applications for web scraping, from research and analysis to archiving to training AI/ML models.

This article will cover web scraping with Cheerio, an HTML parsing library. You’ll start with an overview of web scraping and its uses, then go over how to set up and use Cheerio to build a web scraper that captures data from web pages and saves it in a JSON file. Finally, you’ll set up a continuous integration pipeline to automate the task of managing and maintaining web scraping scripts.

By the end of this tutorial, you will have a scraper that can extract usable data from a web page and an automated testing pipeline to keep it working properly as you update and refine its functionality. Let’s get this party started!

Prerequisites

To follow along with this article, you need to have the following:

- A GitHub account (GitLab users can also follow along)

- Node.js installed on your system

- Familiarity with the Express.js framework

- A CircleCI account

- Any HTTP client of choice. For example Postman

In the next section, you will learn what web scraping is, how to use it to extract data from websites, and why it is useful.

What is web scraping?

Web scraping, or web data extraction, is a type of data mining in which data is extracted from websites and converted into a format usable for further analysis. A user can do it manually or by using an automated script.

Manual web scraping entails navigating to a website and manually extracting the desired data using a web browser, which can be time-consuming, especially if the website is large and complex. When done programmatically, web scraping entails creating a script or bot that automates extracting data from websites.

Web scraping enables you to obtain data that would otherwise be difficult or impossible to get. It is especially useful for data unavailable via traditional APIs or databases. By automating the process, we can quickly and easily collect large amounts of data for further analysis and research.

Note: While web scraping offers numerous benefits, it should be conducted responsibly and within the bounds of legal and ethical guidelines. Respect website terms of service and robots.txt files, and be aware of the laws in your jurisdiction when conducting web scraping activities.

Using Cheerio and Node.js for web scraping

To implement a web scraper, you will use Axios to make HTTP requests to the site you wish to scrape, and Cheerio will then parse the HTML of the website. You will use Express.js to build a web application that requests the website we want to scrape and Node.js to run the web application.

Cheerio is a library that allows you to work with HTML more conveniently. You can load HTML into Cheerio and then use its methods to find elements and extract information. The scraper will store the data in JSON files as objects with properties. Each object will represent a product and will have different properties. In this case, you will have properties like title, price, and link as you will be scraping product lists from Amazon.com.

In the following section, you will build a scraper to scrape data off Amazon.com search results.

Building a web scraper

In this section, you will learn how to build a web scraper for obtaining data from a website and saving the data in a JSON file.

Initializing a Node.js project

To begin, create a directory for your project and, within it, start a Node.js project with the command below (repeatedly type a suitable entry and press Enter. Pressing Enter without an entry will set the default values):

npm init

Installing dependencies

In this application, you are going to use the following Node.js libraries:

- Axios: Promise-based HTTP client for the browser and Node.js

- Cheerio: Fast, flexible, and lean implementation of core jQuery designed specifically for the server

- Body-Parser: Node.js body parsing middleware

- Express: Fast, unopinionated, minimalist web framework for Node.js

Run the command below to install all of these libraries.

npm install axios cheerio body-parser express

Modifying package.json

Make the following changes to your package.json file:

...

"main": "server.js",

"scripts": {

"start": "node src/server.js"

},

...

Setting up the application structure

Add files and folders to meet the following directory structure:

.

├── package.json

├── package-lock.json

├── src

│ ├── scraper.js

│ ├── server.js

│ └── utils.js

└── __tests__

└── scraper.test.js

Setting up the scraper

Add the following lines of code to the file src/scraper.js.

// src/scraper.js

const cheerio = require("cheerio");

const axios = require("axios");

const router = require("express").Router();

const { generateFilename, saveProductJson, generateTitle } = require("./utils");

const baseUrl = "https://www.amazon.com";

router.post("/scrape", async (req, res) => {

// Get the URL from the request body

const { url } = req.body;

// Validate the URL

if (!url.includes(baseUrl)) {

console.log("Invalid URL");

return;

}

try {

// Get the HTML from the URL

axios.get(url).then((response) => {

// Load the HTML into cheerio

const $ = cheerio.load(response.data);

// Create an empty array to store the products

const products = [];

// Loop through each product on the page

$(".s-result-item").each((i, el) => {

const product = $(el);

const priceWhole = product.find(".a-price-whole").text();

const priceFraction = product.find(".a-price-fraction").text();

const price = priceWhole + priceFraction;

const link = product.find(".a-link-normal.a-text-normal").attr("href");

const title = generateTitle(product, link);

// If both title, price and link are not empty, add to products array

if (title !== "" && price !== "" && link !== "") {

products.push({ title, price, link});

}

});

// Call the saveProductJson function to save the products array to a JSON file

saveProductJson(products);

// return a success message with the number of products scraped and the filename

res.json({

products_saved: products.length,

message: "Products scraped successfully",

filename: generateFilename(),

});

});

} catch (error) {

res.statusCode(500).json({

message: "Error scraping products",

error: error.message,

});

}

});

// Export the router so it can be used in the server.js file

module.exports = router;

In the above implementation, you have a route /scrape that handles the scraping. Let’s take a detailed look at the entire file.

In the lines below, you import the dependencies needed for you project, including the utility functions we will discuss later in the article.

const cheerio = require("cheerio");

const axios = require("axios");

const router = require("express").Router();

const { generateFilename, saveProductJson, generateTitle } = require("./utils");

const baseUrl = "https://www.amazon.com";

...

Following that, you define a route to handle the scraping. The first step in the scraping process is to validate that the URL sent in the request body is valid.

...

router.post("/scrape", async (req, res) => {

// Get the URL from the request body

const { url } = req.body;

// Validate the URL

if (!url.includes(baseUrl)) {

console.log("Invalid URL");

return;

}

...

}

The scraper obtains the target website HTML using Axios and the URL provided. You use Cheerio to load the HTML loaded by the website and parse it into a usable format.

With this data ready, you can now loop through each product on the product page and extract the title, price, and link to the product.

...

try {

// Get the HTML from the URL

axios.get(url).then((response) => {

// Load the HTML into cheerio

const $ = cheerio.load(response.data);

// Create an empty array to store the products

const products = [];

// Loop through each product on the page

$(".s-result-item").each((i, el) => {

const product = $(el);

const priceWhole = product.find(".a-price-whole").text();

const priceFraction = product.find(".a-price-fraction").text();

const price = priceWhole + priceFraction;

const link = product.find(".a-link-normal.a-text-normal").attr("href");

const title = generateTitle(product, link);

// If both title, price and link are not empty, add to products array

if (title !== "" && price !== "" && link !== "") {

products.push({ title, price, link});

}

});

// Call the saveProductJson function to save the products array to a JSON file

saveProductJson(products);

// return a success message with the number of products scraped and the filename

res.json({

products_saved: products.length,

message: "Products scraped successfully",

filename: generateFilename(),

});

});

} catch (error) {

...

Sometimes the scrape returns empty titles. To solve this, you will generate a title from the product URL using generateTitle(), which you will see in the next section.

Take note of the following two snippets contained in the try section of the code snippet above. First, to avoid empty values, you check that all fields are not null before adding the products to a products array.

...

if (title !== "" && price !== "" && link !== "") {

products.push({ title, price, link});

}

...

Then you use saveProductJson() to save the products to a JSON file.

...

// Call the saveProductJson function to save the products array to a JSON file

saveProductJson(products);

// return a success message with the number of products scraped and the filename

res.json({

products_saved: products.length,

message: "Products scraped successfully",

filename: generateFilename(),

});

...

After a successful scrape, the server sends a JSON response to the user with the total number of saved products, a success message, and the filename where the products get stored via res.json().

Writing the utils methods

This file will store various utility functions used throughout the project. For this project, we will have three utility functions:

- generateTitle(): When the scraper returns an empty title, this function generates a title from the product link.

- generateFilename(): This function generates a unique file name.

- saveProductJson(): This function saves the products in the array to a JSON file.

Add the following lines of code to the file src/utils.js.

// src/utils.js

const fs = require("fs");

const generateTitle = (product, link) => {

// Get the product title

const title = product

.find(".a-size-medium.a-color-base.a-text-normal")

.text();

// If title is empty

if (title === "") {

const urlSegment = link;

if (typeof urlSegment === "string") {

const urlSegmentStripped = urlSegment.split("/")[1];

const title = urlSegmentStripped.replace(/-/g, " ");

return title;

}

}

return title;

};

// generate unique filenames

const generateFilename = () => {

const date = new Date();

const year = date.getFullYear();

const month = date.getMonth() + 1;

const day = date.getDate();

const hour = date.getHours();

const minute = date.getMinutes();

const second = date.getSeconds();

// return a string with the date and time as the filename

const filename = `${year}-${month}-${day}-${hour}-${minute}-${second}.json`;

return filename;

};

const saveProductJson = (products) => {

// create a new file with a unique filename using the generateFilename function

const filename = generateFilename();

// JSON.stringify() converts the products array into a string

const jsonProducts = JSON.stringify(products, null, 2);

// If env == test create a test data folder, else create a data folder

const folder = process.env.NODE_ENV === "test" ? "test-data" : "data";

// create a new folder if it doesn't exist

if (!fs.existsSync(folder)) {

fs.mkdirSync(folder);

}

// write the file to the data folder

fs.writeFileSync(`${folder}/${filename}`, jsonProducts);

// return the path to the file

return `./data/${filename}`;

};

// Export the functions

module.exports = {

generateFilename,

saveProductJson,

generateTitle,

};

Setting up the server

The server.js file is in control of configuring a web server. It handles HTTP requests and responses and includes middleware, routes, and starting up the server.

Create a server.js file in the src directory and add the following code to it.

// src/server.js

const express = require('express');

const app = express();

const port = process.env.PORT || 3000;

const bodyParser = require('body-parser');

const scrapeProducts = require('./scraper');

app.use(bodyParser.urlencoded({extended: true}));

app.use(bodyParser.json());

// middleware for routes

app.use('/', scrapeProducts);

// Server listening

server = app.listen(port, () => {

console.log(`Server is running on port ${port}`);

});

// Export server for testing

module.exports = server;

Starting the development server

To start the dev server in Node.js, open the terminal and run the following command:

npm start

The above command will start the server and print out the message Server is running on port 3000. You can now access the server at http://localhost:3000.

Testing the API endpoint

In this section, you will learn how to test the API endpoint. The endpoint is http://localhost:3000/scrape, and it is a POST request. The request body is a URL. You must provide a valid URL to evaluate the scraper’s functionality.

To do this, you need to send a POST request with the following request body to the /scrape endpoint. This is point you use any HTTP client of choice like Postman.

Note: This scraper scrapes products from the Amazon website; a valid URL links to an Amazon products’ page. For this demonstration, we will scrape Amazon for headphones using the link in the JSON object below.

Request body:

{

"url": "https://www.amazon.com/s?k=all+headphones&crid=2TTXQBOK238J3&qid=1667301526&sprefix=all+headphones%2Caps%2C284&ref=sr%5C_pg%5C_1"

}

Below is the result of a successful scrape. A new folder, data, is created in the project directory with the scrape results in a JSON file.

Hurray, it works!

Testing the web scraper

Now that you have validated that your scraper works, you can add unit tests to verify the functionality of the web scraper and ensure that new features do not break existing functionality.

The first step in testing the scraper functions is to install the testing libraries: Jest and Supertest. Jest is a JavaScript testing library that runs on top of Node.js, while Supertest is a testing library for Node.js. You’ll also use Jest-junit, a library that allows developers to use the Jest testing framework with the JUnit testing tool.

To install all three, you need to run the following command.

npm install jest supertest jest-junit --save-dev

Next create a test file scraper.test.js in the __tests__/ directory and add the following test code.

// __tests__/scraper.test.js

const request = require("supertest");

const fs = require("fs");

const { generateFilename, saveProductJson } = require("../src/utils");

const server = require("../src/server");

// Build it up, tear it down :)

const cleanTestData = () => {

// delete the test data directory

fs.rm("./test-data", { recursive: true }, (err) => {

if (err) {

console.log("Error deleting directory", err);

}

});

}

// scraper test suite

describe("scraper", () => {

// Test unique filename generator (type of test: unit)

test("generateFilename() returns a string", () => {

// Assert that the generateFilename function returns a string

expect(typeof generateFilename()).toBe("string");

// Assert that the generateFilename function returns a string with a .json extension

expect(generateFilename()).toMatch(/\.json$/);

});

// Test saveProductJson() function (type of test: unit)

test("saveProductJson() saves a file", async () => {

// Create a mock products array

const products = [

{

title: "Mock Product",

price: "99.99",

link: "https://www.amazon.com/Mock-Product/dp/B07YXJ9XZ8",

},

];

// Call the saveProductJson function with the mock products array

const savedProducts = await saveProductJson(products);

// Assert that the saveProductJson function returns a string

expect(typeof savedProducts).toBe("string");

// assert that is returns a path with ./data/ and .json

expect(savedProducts).toMatch(/^\.\/data\/.*\.json$/);

});

// Test the /scrape route (type of test: integration)

test("POST /scrape returns a 200 status code", async () => {

// Create a mock request body

testScrapUrl =

"https://www.amazon.com/s?k=all+headphones&crid=2TTXQBOK238J3&qid=1667301526&sprefix=all+headphones%2Caps%2C284&ref=sr_pg_1";

const body = {

url: testScrapUrl,

};

// Make a POST request to the /scrape route

const response = await request(server).post("/scrape").send(body);

// Assert that the response status code is 200

expect(response.statusCode).toBe(200);

// Assert that the response body has a products property

expect(response.body).toHaveProperty("products_saved");

});

});

// close the server and delete the test data directory after all tests have run

afterAll(() => {

cleanTestData();

server.close();

});

Before defining the test suite in the test file above, you first import the functions to test and the necessary Node packages.

Storing test data separately from the application data can help keep the main database clean and organized. And since the test data is used only for testing purposes, it is unnecessary to keep it after the tests are complete. The cleanTestData() function ensures that the test data directory gets deleted after all tests are complete. After the tests run, you call the function to clean the test data and close the server connection from within the afterAll() method.

Running web scraper tests using Jest

To set up Jest as your testing library, change the scripts section in the package.json file to look like this.

...

"scripts": {

"test": "jest --detectOpenHandles",

"start": "node src/server.js"

},

...

Then execute tests with the following command.

Note: Stop the running server first before executing the tests. The server and the test use the same port 3000

npm test

The tests pass successfully, and the expected output looks as shown.

> test

> jest

console.log

Server is running on port 3000

at Server.log (src/server.js:15:11)

PASS __tests__/scraper.test.js

scraper

✓ generateFilename() returns a string (2 ms)

✓ saveProductJson() saves a file (1 ms)

✓ POST /scrape returns a 200 status code (3388 ms)

Test Suites: 1 passed, 1 total

Tests: 3 passed, 3 total

Snapshots: 0 total

Time: 3.805 s

Ran all test suites.

Manually testing your code is a good start, but it can quickly become burdensome and error prone, especially as your applications grow in complexity and you add collaborators to your project. In the following section, you’ll learn how to configure CircleCI to automatically execute tests anytime you change the code and push to an online repository.

Automating tests with CircleCI

In this section, you’ll learn how to configure CircleCI for your project. Continuous integration (CI) ensures that adding more features does not break existing working features. CI can save you time and headaches by automatically running tests and checking for code errors or compatibility issues.

If you haven’t already, be sure to sign up for a free CircleCI account to access up to 6,000 free monthly build minutes.

Step 1: In the project’s root, create a .circleci/config.yml file and add the following configuration.

# .circleci/config.yml

version: '2.1' # Use 2.1 to use new features.

orbs: # An orb is a reusable package of CircleCI configuration that

# you may share across projects, enabling you to create encapsulated, parameterized commands, jobs, and executors that can be used across multiple projects.

node: circleci/node@5.1.0 # This is the latest version of the node orb.

workflows: # A workflow is a dependency graph of jobs.

test: # This workflow contains a single job called "test"

jobs:

- node/test # This is the test job defined in the node orb.

Step 2: Create .gitignore and update it to the following.

# Dependency directories

node_modules/

# scraper generated files

data/

# test generated files

test-data/

The current code structure looks like this:

.

├── .circleci

│ └── config.yml

├── __tests__

│ └── scraper.test.js

├── src

│ ├── scraper.js

│ ├── server.js

│ └── utils.js

├── .gitignore

├── package-lock.json

└── package.json

Step 3: Initialize Git, if you hadn’t done so already, commit the changes, and push to your online repository. If you’re unfamiliar with this process, refer to pushing a project to GitHub tutorial. GitLab users can refer to Pushing a project to GitLab.

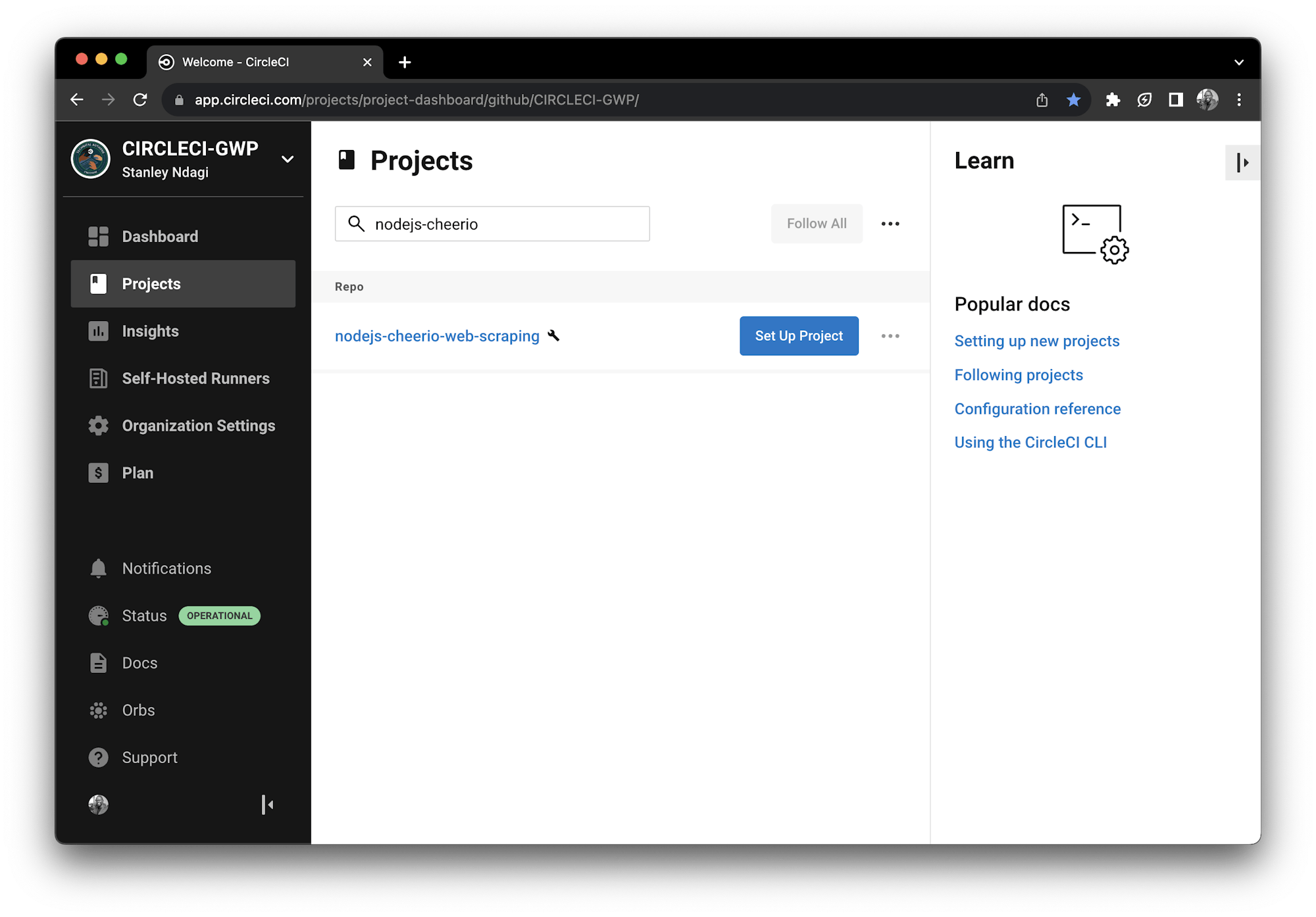

Step 4: Log in to CircleCI and go to the Projects page. Click Set Up Project against the name of the GitHub repository you just pushed.

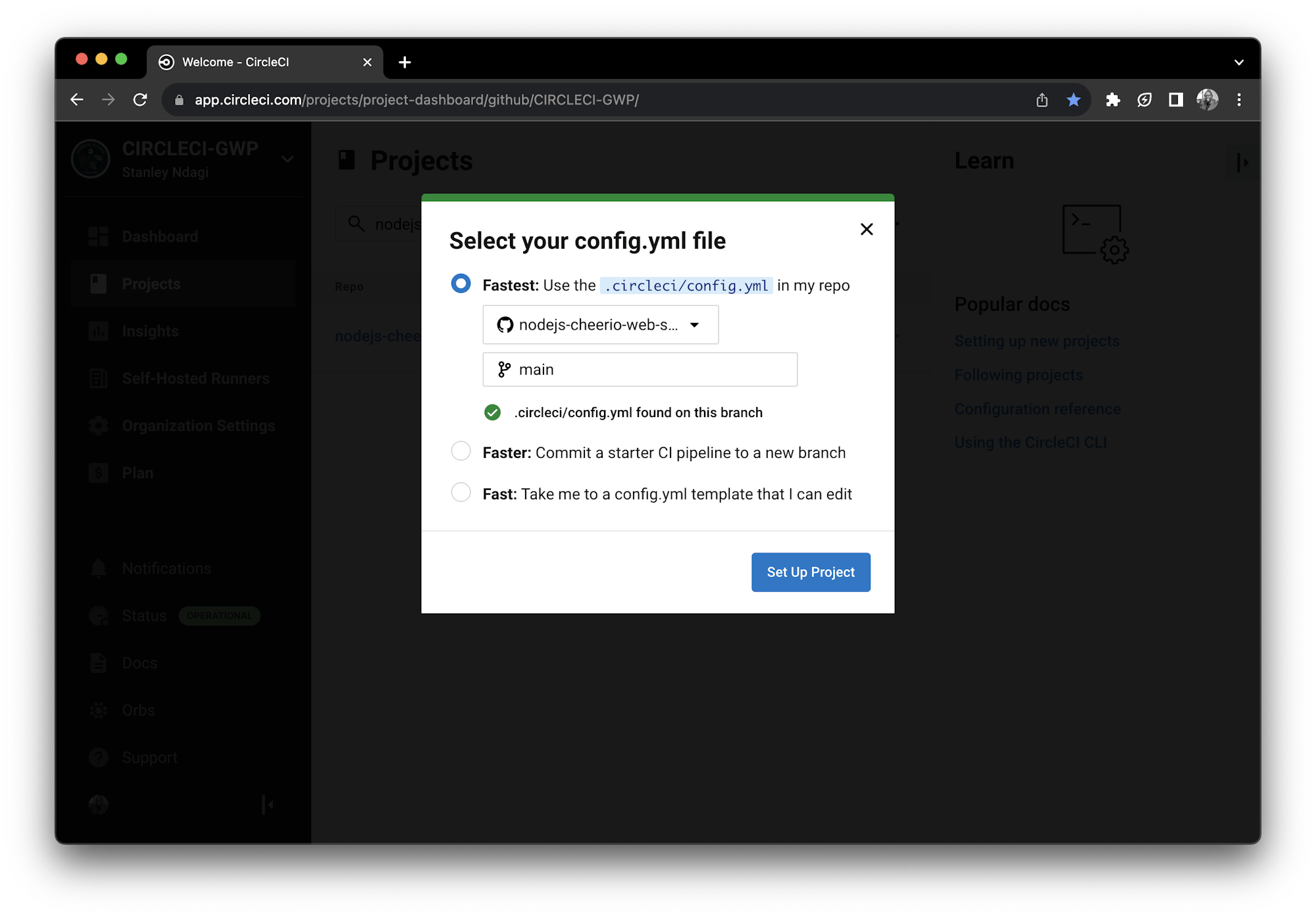

Step 5: Enter the branch name where the CircleCI configuration lives. Click Set Up Project.

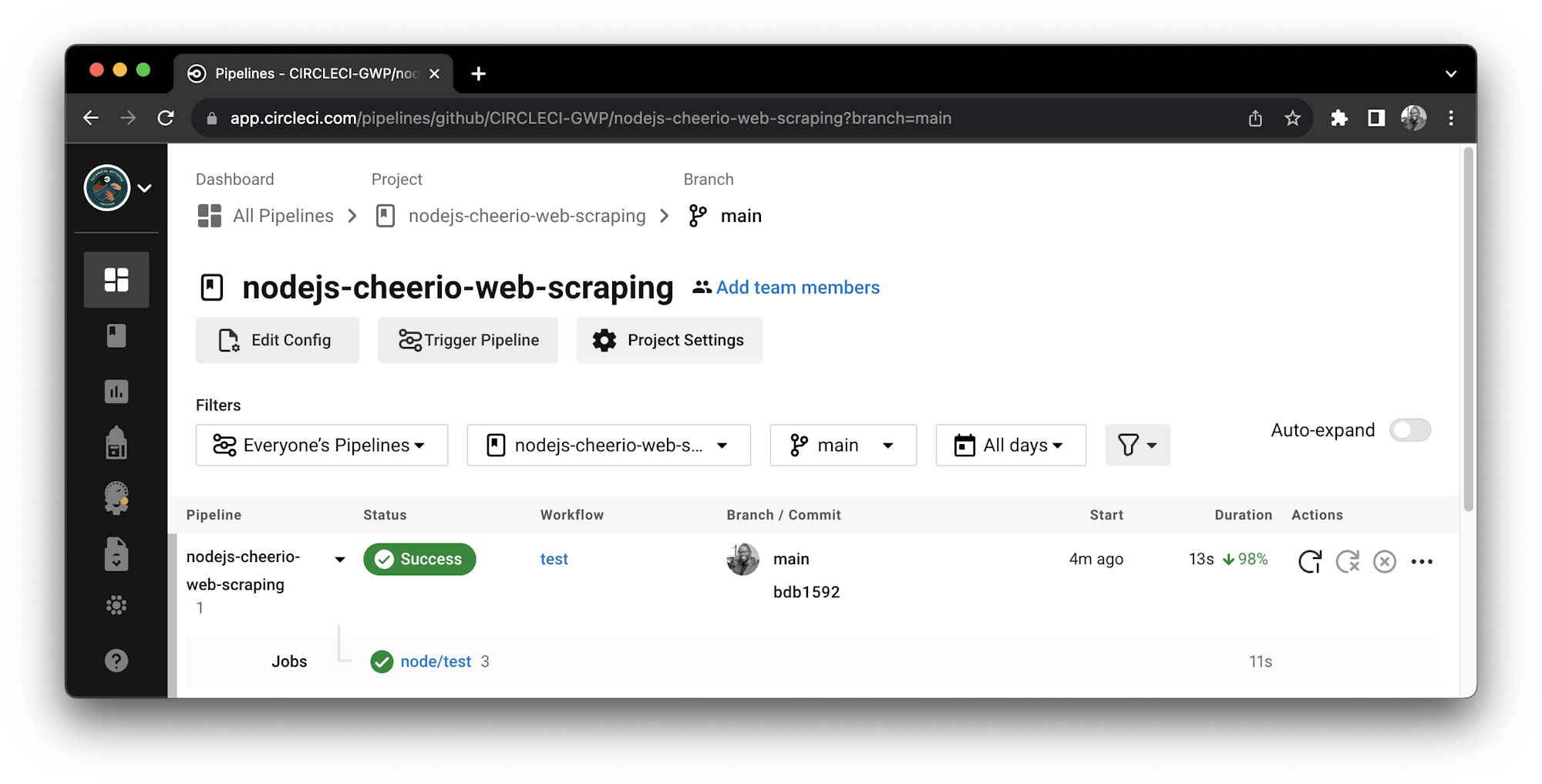

When you click the Set Up Project button, the workflow run initiates and should see passing tests as shown.

Congratulations! You have successfully configured a continuous integration pipeline to run automated tests on your code.

Conclusion

In this article, you learned what web scraping is and how to use it to obtain data. You built a web scraper to get data from Amazon and store it in a JSON file using Cheerio, Axios, and Nodejs. You also learned how to write unit tests for our web scraping functions and how to configure CircleCI to run automated tests whenever we make changes to the code.

In an increasingly data-driven world, web scraping allows you to access and analyze vast amounts of information that would be impossible to collect via manual processes. And by adding the power of continuous integration to your development pipeline, you’re now able to iterate and improve upon your web scraper with full confidence that your changes are thoroughly tested, resulting in higher code quality and faster development cycles.

The entire project is available on GitHub for reference.

I hope you had fun when reading this; until the next one, keep learning!

Waweru Mwaura is a software engineer and a life-long learner who specializes in quality engineering. He is an author at Packt and enjoys reading about engineering, finance, and technology. You can read more about him on his web profile.