LLM hallucinations: How to detect and prevent them with CI

Principal Engineer

Senior Technical Content Marketing Manager

An LLM hallucination occurs when a large language model (LLM) generates a response that is either factually incorrect, nonsensical, or disconnected from the input prompt. Hallucinations are a byproduct of the probabilistic nature of language models, which generate responses based on patterns learned from vast datasets rather than factual understanding.

Hallucinations are a major challenge for developers working with AI systems, adding a layer of unpredictability and complexity that can be more difficult to diagnose and fix than traditional software defects. While frequent human review of LLM responses and trial-and-error prompt engineering can help you detect and address hallucinations in your application, this approach is extremely time-consuming and difficult to scale as your application grows.

Fortunately, by combining the emerging field of LLM evaluations (evals) with the proven techniques of continuous integration (CI), you can create a more robust system in which every update to the LLM or its training data triggers a new round of automated evals. In this tutorial, you’ll learn how to set up model-graded evals — using an LLM to evaluate the output of another LLM — for a sample application and automate those evals in a CircleCI pipeline.

Prerequisites

To help demonstrate some of the concepts explained in this tutorial, you will build an AI-powered quiz generator using OpenAI, LangChain, and CircleCI.

Our application consists of the following:

- A test bank of questions containing facts about science, geography, and art

- A prompt to an LLM (ChatGPT 3.5 turbo) that takes in the facts in the test bank and writes a question customized to a student’s request based on the provided facts

- A Python CLI that takes in the user’s question and sends requests to OpenAI’s ChatGPT model

To complete this tutorial you will need:

We assume basic familiarity with Python, but no prior experience with AI models is needed.

You can find all the files used in this tutorial in our sample repository. Feel free to fork the repository and follow along.

Using the sample LLM application

The sample LLM application for the tutorial is defined in our app.py file. As described in the system_message prompt, the application is set up to identify the category of quiz requested by the user (art, science, or geography) and return a list of three questions based on the facts provided in the quiz bank.

To use the application, you’ll need to provide your OpenAI API key in a .env file stored in your project directory.

Let’s ask our quiz assistant about art:

python app.py "Write a fun quiz about Art"

Here’s the response we get:

Question 1: Who painted the Mona Lisa?

Question 2: What famous painting captures the east-facing view of van Gogh's room in Saint-Rémy-de-Provence?

Question 3: Where is the Louvre, the museum where the Mona Lisa is displayed, located?

Note that you will likely receive a slightly different set of questions. Remember that LLMs are probabilistic rather than deterministic, so generative applications will provide different responses even when given the same input prompt.

All of these questions are based on facts in our quiz bank, so this looks pretty good. But what happens when our applications hallucinates a response?

Example LLM hallucination

To demonstrate a common type of LLM hallucination, we can ask the assistant about a category that is not included in our quiz bank, like math:

python app.py "Write a quiz about math"

The questions we get back are:

Question 1: What is the value of pi (π) to two decimal places?

Question 2: Solve the equation: 2x + 5 = 15

Question 3: What is the square root of 64?

So, even though we didn’t give the application facts about math, it still generated questions based on data in the training set. This is an example of a hallucination.

In this case, these questions are actually solvable, but there are two issues here:

-

If we were creating a real quiz application, we wouldn’t want to give students questions that aren’t part of the curriculum.

-

In other applications — for example, an application that answers questions about documentation for a software tool — the application generating responses outside of the provided dataset could give users incorrect or useless information.

To prevent these types of hallucinations from reaching production and negatively affecting your users (and your company’s reputation), you want to run evaluations automatically whenever you make a change to your application. Let’s set up a continuous integration pipeline to do just that.

Automating LLM hallucination detection with CI

Continuous integration is a process that involves making small, frequent updates to a software application and automatically building and testing every change as soon as it happens. This approach helps catch errors early and ensures that defects are limited in scope, making them faster and easier to resolve than issues discovered later in the development process. For teams building with LLMs, CI can automate the testing of new data sets and model updates, ensuring that updates do not inadvertently introduce biases or degrade the model’s performance.

To set up a CI pipeline in CircleCI, you first need to create a config.yml file in a .circleci directory inside your project. We’ve provided a working config file in the sample repository. Let’s take a look at the contents:

version: 2.1

orbs:

python: circleci/python@2.1.1

workflows:

evaluate-commit:

jobs:

- run-commit-evals:

context:

- dl-ai-courses

jobs:

run-commit-evals:

docker:

- image: cimg/python:3.10.5

steps:

- checkout

- python/install-packages:

pkg-manager: pip

- run:

name: Run assistant evals.

command: python -m pytest --junitxml results.xml test_hallucinations.py

- store_test_results:

path: results.xml

Here are some important details about the config:

- The Python orb handles common tasks for python applications like installing dependencies and caching them between CI runs.

- The

evaluate-commitworkflow runs each time we push our code to the repository. It has one job namedrun-commit-evals. - The

run-commit-evalsjob uses a context calleddl-ai-courses. Contexts are a place to store secrets like API credentials. You can also use third-party secret stores in your jobs. - Our job has four steps:

checkoutclones our repo with the changes we pushed to GitHub.python/install-packageshandles installing our dependencies and caching them.python -m pytest --junitxml results.xml test_hallucinations.pyruns our tests and saves the results in junit xml.store_test_resultssaves the results of our tests so we can easily see what passed and failed.

To try this out in your own project, create a free CircleCI account and connect your repository.

Detecting hallucinations with CI

We defined a test in test_hallucinations.py so we can find out if our application is generating quizzes that aren’t in our test bank.

This is a basic example of a model-graded evaluation, where we use one LLM to review the results of AI-generated output from another LLM.

In our prompt, we provide ChatGPT with the user’s category, the quiz bank, and the generated quiz and ask it to check that the generated quiz only contains facts in the quiz bank. If the user asks about something outside of the provided categories, we want to make sure the quiz generator responds that it can’t generate a quiz about that subject.

Let’s run the pipeline as-is, without fixing the hallucination, to make sure our eval is working.

Great! Our model-graded evaluation flagged the output from our quiz generator for including information outside of our provided context (the quiz bank). We can use this information to quickly resolve the issue before pushing this change live to users.

Updating the prompt to address LLM hallucinations

To reduce hallucinations about unknown categories, we can make a small change to our prompt. Let’s add the following text:

If a user asks about another category respond: "I can only generate quizzes for Art, Science, or Geography"

Here’s the complete updated prompt:

Write a quiz for the category the user requests.

## Steps to create a quiz

Step 1:{delimiter} First identify the category user is asking about. Allowed categories are:

* Art

* Science

* Geography

If a user asks about another category respond: "I can only generate quizzes for Art, Science, or Geography"

Step 2:{delimiter} Based on the category, select the facts to generate questions about from the following list:

{quiz_bank}

Step 3:{delimiter} Generate a quiz with three questions for the user.

Use the following format:

Question 1:{delimiter} <question 1>

Question 2:{delimiter} <question 2>

Question 3:{delimiter} <question 3>

Let’s push our updated code to trigger a new pipeline run and see if that change addresses the issue.

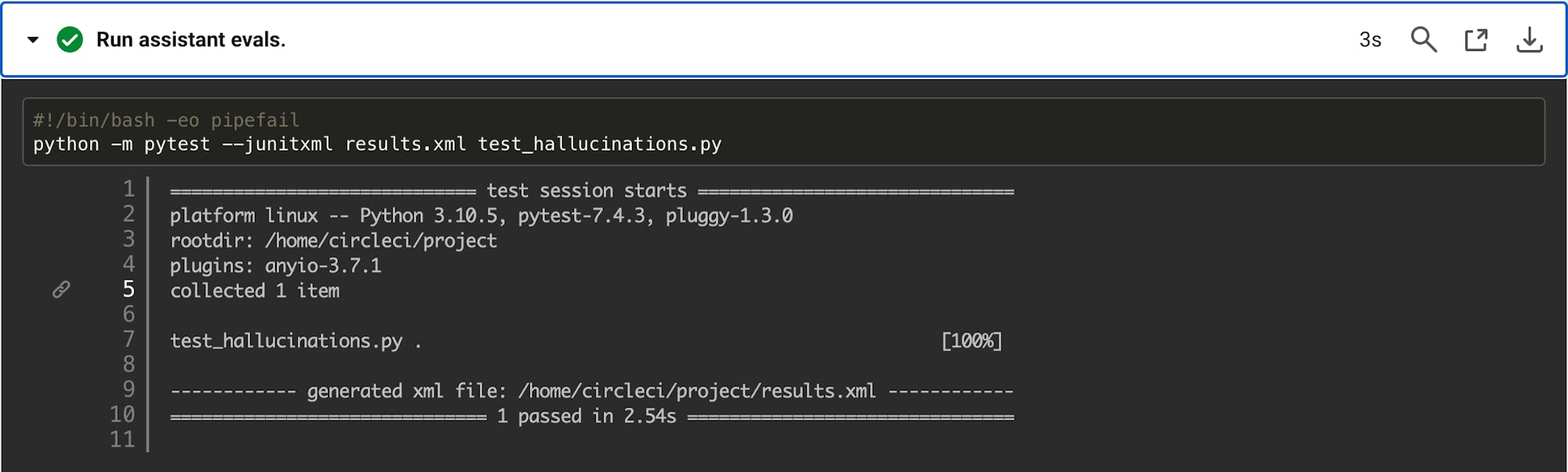

You can click into the details of the Run assistant evals step to see the results of our model-graded evaluation.

Excellent, our evaluations have passed and we have a green build. We can be confident that our change has eliminated the LLM hallucination issue and has made our quiz generator application more accurate and reliable.

Conclusion

In this tutorial, you learned the basics of LLM hallucinations and how to quickly detect and resolve them using model graded evaluations in a continuous integration pipeline. We covered how to use OpenAI’s ChatGPT model to build an application, how to write tests to tell if the model is hallucinating information, and running that test in an automatically to detect regressions in your application.

Automated testing with CI is an essential practice for teams building software of all types. When dealing with LLMs, it becomes especially important to consistently monitor and evaluate your application performance to safeguard against unexpected output that can be confusing, misleading, or even harmful to your users.

To learn more about how to implement robust testing strategies and improve your LLM application’s reliability, be sure to sign up for our free course built in partnership with Deeplearning.AI, Automated Testing for LLMOps.

Similar posts you may enjoy

Prompt engineering: A guide to improving LLM performance

Senior Technical Content Marketing Manager

Build and evaluate LLM-powered apps with LangChain and CircleCI

Senior Technical Content Marketing Manager

Risks and rewards of generative AI for software development

Senior Technical Content Marketing Manager