Optimizing open source project builds on CircleCI

Solutions Engineer

In a previous blog post, I wrote about how I was able to optimize React Native Camera’s builds on CircleCI. What we learned from this effort was that the maintainers were not even aware of these optimizations. Everything I did is available for reference in our documentation, but as the old adage goes, “You don’t know what you don’t know.” Now, we want to take an opportunity to promote the host of optimizations available for all projects on CircleCI.

Build minutes are precious, and this is especially the case if you’re an OSS project with a limited budget. We have a page in our documentation specifically for optimizations, but we’ve elected to write a blog post to highlight and bring awareness to the various features that set our platform apart from others.

Almost all of the following features are free and available to any user, whether on private or public projects. Premium features will be marked as such. As much as I’d love to open PRs to more projects, it isn’t practical. The next best thing that I can do is equip everyone with the knowledge and resources to do it themselves.

CircleCI’s optimization features

CircleCI has a number of features that developers can use to speed up their builds and deployments.

Dependency caching

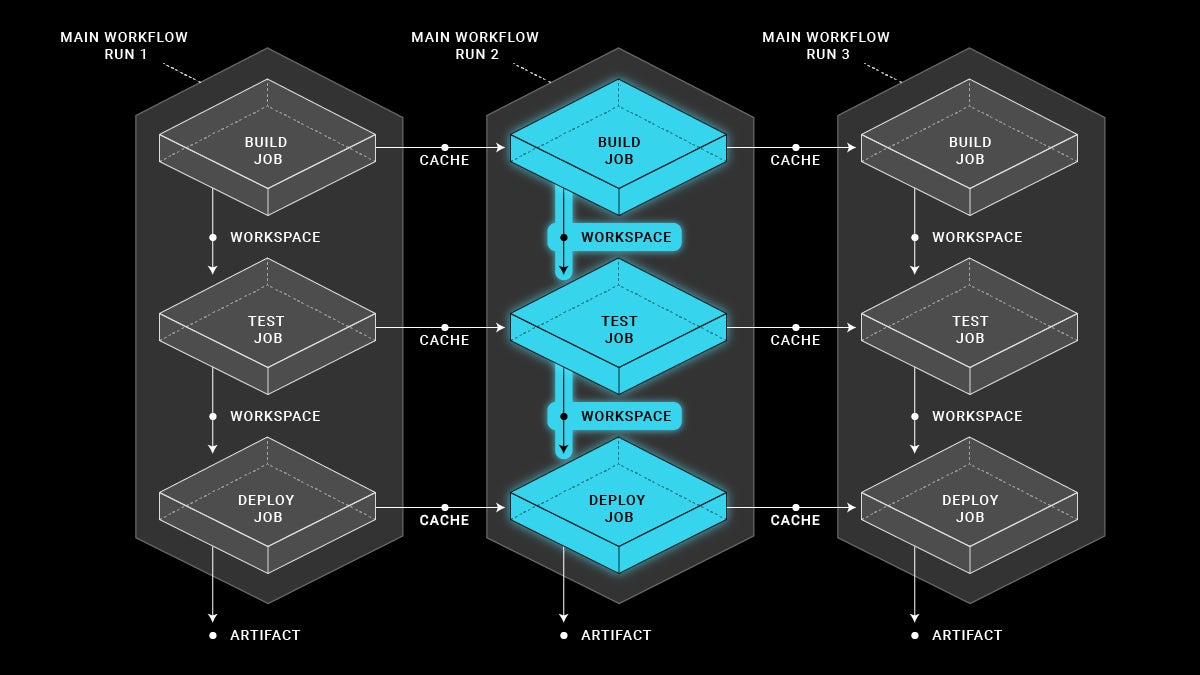

Every job in our system spins up in a new, fresh environment. This is to prevent contamination from previous jobs and unexpected behavior. But this means subsequent runs of the same job would download the same dependencies over again.

To solve this, we offer dependency caching. You can cache your project’s dependencies, dramatically speeding up the build process.

Example of dependency caching

If building, for example, a Node.js application, you would cache the node_modules. You store the cache as a key, and we have a number of templates you could use for the key.

In the sample below, I’m caching my node_modules folder to a key that includes the SHA256 of the package.json. This way, if my dependencies change, the cache key also changes, and subsequently a new cache will be created for the new dependencies. Some people might choose to use the checksum of their package.lock or yarn.lock files.

version: 2.1

jobs:

my-job:

executor: my-executor

steps:

- checkout

- restore_cache:

keys:

- v1-dependencies-{{ checksum "package.json" }}

- v1-dependencies- # Fallback cache

- run: yarn

- save_cache:

key: v1-dependencies-{{ checksum "package.json" }}

paths:

- node_modules

For more information and examples, visit our awesome documentation:

Sharing files and artifacts with workspaces

As mentioned in the section above, every job in our system spins up in a new, fresh environment. In order to share files and artifacts between jobs in a workflow, you can use workspaces. Workspaces allow you to easily transfer work from one job to the next to avoid duplicating effort.

Example of shared files across workspaces

In the example below, I’ve separated my Go binary build and my Docker image build into separate jobs. To avoid cloning the repository again and/or redoing the build, I’ve transferred the needed files via a workspace.

version: 2.1

jobs:

my-build-job:

executor: my-executor

steps:

- checkout

- run: go build -o APP_NAME cmd/**/*.go

- persist_to_workspace:

root: .

paths:

- Dockerfile

- cmd

- internal

- go.mod

- go.sum

my-docker-job:

executor: my-other-executor

steps:

- attach_workspace:

at: .

- run: docker build -t TAG_NAME .

For more information and examples, visit our awesome documentation:

Reusing previously-built Docker images

In particular, workspaces are useful for transferring built Docker images between jobs. Many of our customers are doing Docker builds on our platform, and in some cases, they separate building the Docker image and testing it into separate jobs.

You can transfer saved images via the usage of docker save and docker load commands.

Example of re-using previously-built Docker images

In this example, I build my Docker image in the first job and write it to docker-cache/image.tar before saving it to a workspace. The next job attaches that workspace and then loads the image before running both it and the test suite.

version: 2.1

jobs:

my-build:

executor: my-executor

steps:

- checkout

- setup_remote_docker

- run:

name: Build and Save Docker Image

command: |

docker build -t MY_APP:MY_TAG .

mkdir docker-cache

docker save -o docker-cache/image.tar MY_APP:MY_TAG

- persist_to_workspace:

root: .

paths:

- docker-cache

my-test:

machine: true

steps:

- attach_workspace:

at: .

- run:

name: Load, Run Docker Image and Tests

command: |

docker load < docker-cache/image.tar

docker run -d -p 8080:80 MY_APP:MY_TAG

mvn test -Dhost=http://localhost:8080

Creating custom Docker image executors

Our platform offers various execution environments, one of which is Docker. We have convenience images for a variety of frameworks and languages, and for most use cases, we recommend using these as a starting point. However, for very advanced or complex use cases, you could easily make your own custom Docker images to use as executors.

By using custom images, you could pre-bake environments with all of your required dependencies and any other setup you need. By default, we pull images from Docker Hub, but if you need to pull from other sources (with auth), you can easily change that in your config. Remember to specify the full URL:

version: 2.1

jobs:

build:

docker:

- image: quay.io/project/IMAGE:TAG

auth:

username: $QUAY_USER

password: $QUAY_PASS

For more information and examples, visit our awesome documentation:

Test splitting and parallelism

You also have the ability to use test splitting and parallelism in our platform. By splitting up your tests and running different workloads in parallel, you can dramatically cut the wall-clock time of your test suite. Open source organizations on our Free plan enjoy up to 30x concurrency for Linux, Docker, Arm, and Windows builds, and our Performance plan customers enjoy up to 80x concurrency for all executors. If you’re interested in more resources for your team, contact us today.

For more information and examples, visit our awesome documentation:

Configurable resources

By default, all execution environments run on the medium size which varies by the type of executor. However, our platform allows users the flexibility to configure different resources (vCPUs and RAM) for their builds.

Some jobs don’t require many resources, so you could opt for a small Docker container to save on compute and credits. But then there are other jobs which would benefit from a 2xlarge+ container. Regardless, CircleCI can be configured to meet your workload demands.

Example of using configurable resources

In this example, I’ve specified my build to use xlarge resource class, meaning I’ll have 8 vCPUs and 16GB RAM at my disposal. It’s only a single line change to the configuration.

version: 2.1

jobs:

build:

docker:

- image: circleci/node:10.15.1-browsers

resource_class: xlarge

steps:

# ... steps here

Docker layer caching

For customers building Docker images, they are able to utilize a nice feature called Docker layer caching. Just as you can cache dependencies of your applications, you can cache your Docker image layers to speed up subsequent builds of your Docker image.

To best make use of this feature, you will want to put the constantly-changing items (e.g., COPY or ADD of source code, etc.) near the bottom of the Dockerfile and the least-frequently changing items at the top.

Example of Docker layer caching

Using it is as simple as adding an additional key to the YAML. If using Docker executor with remote Docker:

jobs:

build:

executor: my-executor

steps:

- checkout

- setup_remote_docker:

docker_layer_caching: true

# ... other steps here

Or, if using our machine executor:

jobs:

build:

machine: true

docker_layer_caching: true

steps:

# ... steps here

For more information and examples, visit our awesome documentation:

Convenience features

On top of features for optimizing build times, we also have many convenience features that help boost productivity and improve overall developer experience.

Reusable config and orbs (w/ parameters)

A year ago we rolled out orbs and reusable configuration. Using the new 2.1 config, you’re able to define reusable jobs, executors, and commands, with parameterization, within a project, as well as across projects and organizations. This means a few things for you:

- You can define configuration once and reuse it: This is great for teams who want to define or establish shared executors, jobs, and commands, and maintain them in an orb as a single source of truth. The parameters allow you to plug different inputs into the same job or command logic for maximum reusability.

- You don’t have to re-invent the wheel: As an example, AWS is a widely-used cloud services provider. If you’re deploying to, say, AWS ECS, you are likely aware that others are doing the same. It doesn’t make sense for everyone to essentially rewrite the same configuration, and it turns out you don’t have to.

- You can maintain them like you maintain code: Orbs are just packaged YAML, so they can be versioned, tested, and released like any other code. That means this whole process can be automated and tracked, and you as developers don’t have to learn any new languages or frameworks to make use of Orbs.

Example orb usage

In the example below, I’m deploying my files to AWS S3. Instead of having to write many lines of configuration to update AWS CLI, configure this, do that, etc., I had to write only five: two lines to “import” the orb, then three lines to use it (with arguments).

orbs: # "Import" the orb

aws-s3: circleci/aws-s3@2.0.0

jobs:

deploy:

docker:

- image: circleci/python:2.7

steps:

- attach_workspace:

at: .

- aws-s3/sync: # Using said orb

from: .

to: my_awesome_bucket

For more information and examples, visit our awesome documentation:

- Authoring orbs

- Reusable configuration and parameters

- Orb registry

- Integrations page

- Configuration reference - orbs

- Configuration reference - commands

- Configuration reference - executors

Databases and services

Did you know you could spin up databases for your testing? Or other service images? Any image specified after the first under docker is considered a service image. It will spin up in the same network as the primary execution container.

Example of spinning up multiple services

In the example below, I’m spinning up a Postgres database for testing my application in my primary container. By default, containers are accessible through localhost if you don’t specify a name, but if you do specify a name, you can also use that.

jobs:

build:

docker:

# Primary Executor

- image: cimg/openjdk:11.0.9

# Dependency Service(s)

- image: postgres:10.8

name: postgres # Can access via postgres:5432

environment:

POSTGRES_USER: postgres

POSTGRES_PASSWORD: postgres

POSTGRES_DB: postgres

If you are using a full machine executor, you can simply treat it like you would any other virtual machine and spin up a network of Docker containers.

jobs:

build:

machine: true

steps:

- run: docker-compose up

# OR

- run: docker run -d -p 5432:5432 -e POSTGRES_USER=postgres postgres

- run: docker run -d -p 8080:80 MY_APP:MY_TAG

# ...etc.

For more information and examples, visit our awesome documentation:

Test summaries

Are you running test suites? We have a Test Summary feature that parses JUnit XML formatted files and stores the data. This enables you to see a summary of how many tests you ran and whether all of them passed. If any of them fail, you’ll see which ones, and their output will be accessible right there. Convenient for fast, quick access to the information that matters.

Example use of test summary

To implement this, you’ll need to configure your test suite to output the results in JUnit XML format and into a file. Then you’ll store that file in a folder and use the store_test_results YAML key:

jobs:

build:

executor: my-executor

steps:

# Other build steps

- run:

name: Run TestCafe tests

command: |

yarn runTests -- ${TESTFILES} # Outputs > /tmp/test-results/results.xml

- store_test_results:

path: /tmp/test-results

# ...etc.

View the build and see my configuration.

For more information and examples, visit our awesome documentation:

Insights

When we collect all build information and test summaries across all your projects, pipelines, workflows, and jobs, we start tracking how long each one of them runs, how often, and what is its success rate. That way you can easily see which parts of your project’s pipelines are most problematic and should be improved first. You can find this information in the Insights tab in your CircleCI dashboard.

For more information on Insights you can read the initial release, and documentation:

If insights excite you, you might also find interesting our 2020 State of Software Delivery report, where we explore information from over 160,000 software projects, on build times, and how teams work together.

Artifacting

CircleCI’s jobs run inside new, clean, and isolated execution environments each time. Subsequently, if you want to make any files or build artifacts available for external access, you can use our artifacting feature. Artifacting file(s) makes them available in the Artifacts tab on your Job Details page - incidentally, since we use AWS S3 for this, you could preview files as if they were statically hosted as long as the reference paths are correct.

Example artifact creation

This example is the same as above: I’ve run a test suite and output results to /tmp/test-results/results.xml. Then, I artifact these files using the store_artifacts key, and they are now viewable on the Artifacts tab.

View the build and see my configuration.

jobs:

build:

executor: my-executor

steps:

# Other build steps

- run:

name: Run TestCafe tests

command: |

yarn runTests -- ${TESTFILES}

- store_artifacts:

path: /tmp/test-results

destination: .

# ...etc.

For more information and examples, visit our awesome documentation:

SSHing into builds

Test output not obvious? Build failing for a reason you can’t identify? You can rerun with SSH on our platform. This reruns the same build, but this time the SSH port is opened. Using the same SSH key as your GitHub or Bitbucket account, you can login and authenticate into the execution environment, as it’s running, to live debug the build. By having direct access to the environment, you can actually examine the environment, tail logs, or poke at other items to investigate the build.

It’s a highly useful feature for productive debugging, as opposed to trial-and-error from outside the environment.

For more information and examples, visit our awesome documentation:

Building forked pull requests and passing secrets

Open source projects often require collaboration across project forks from community developers. By default, CircleCI will only build branches on your own project on GitHub, and not on external forks. If you wish to also allow building pull requests across forks, you can toggle that in your project’s settings - under the Advanced Settings tab.

Your CircleCI pipelines might also require secrets in order to perform certain actions, like sign binary packages, or interact with external services for example when deploying to a cloud environment. You can toggle that under the same tab as well.

For more information, see the related documentation page:

Conclusion

Out of all the features mentioned above, only three are premium or semi-premium. There are plenty of capabilities to improve build speeds and boost productivity for developers using CircleCI. Our users tell us again and again how much they love our product and enjoy the features we have to offer; from here, we will only be making improvements to the experience.