Automating Python app releases with Pulumi and IaC

Developer Advocate, CircleCI

Continuous delivery enables developers, teams, and organizations to effortlessly update code and release new features to their customers. This is all possible due to recent culture shifts within teams and organizations as they begin embrace CI/CD and DevOps practices. Implementing CI/CD and DevOps practices enables these teams to better leverage modern tools to build, test, and deploy their software with high confidence and consistentency.

Infrastructure as code (IaC) enables teams to easily manage their cloud resources by statically defining and declaring these resources in code, then deploying and dynamically maintaining these resources via code. In this post, I’ll demonstrate how to implement IaC within a CI/CD pipeline. This post also demonstrates how to implement the Pulumi orb into a pipeline. This orb defines and deploys an application to a Google Kubernetes Engine (GKE) cluster. Pulumi will provide the IaC component of this post.

Prerequisites

To follow along with this post, you will need to that the following:

- A CircleCI account

- A Docker Hub account

- A Google Cloud account

- A GitHub account

- A Pulumi account

- Install Pulumi locally

- Some familiarity with Kubernetes and GKE

- Install the Python Language Runtime

Getting started

In this post, I’ll demonstrate how to integrate the Pulumi orb into a CircleCI pipeline. The Pulumi orb will be used to deploy a Python application to a GKE cluster on Google Cloud. The application is a simple Flask app that displays a message in the browser. If you have a different application, you can use the same steps to deploy it to GKE.

If you prefer to follow along with the code examples, you can clone the starter branch of this repository here on GitHub using this command:

This will create a new directory called pulumi-project with the starter code, which contains a simple Flask app that displays a message in the browser.

In the next section, you will add Pulumi to this project. It’s easy to set up using the following command:

This will initialize a new Pulumi project within the existing Python codebase and prepare it for defining infrastructure as code.

Pulumi setup

Pulumi enables developers to write code in their favorite language (e.g., JavaScript, Python, Go, etc.), deploying cloud apps and infrastructure easily, without the need to learn specialized DSLs or YAML templating solutions. The use of first class languages enables abstractions and reuse. Pulumi provides high-level cloud packages in addition to low-level resource definitions across all the major clouds service providers (e.g., AWS, Azure, GCP, etc.) so that you can master one system to deliver to all of them.

To begin, ensure that you have set up accounts with Pulumi and Google Cloud. Next, navigate to the root of your project directory and issues the following command to create a new Pulumi project using the Pulumi CLI:

You will be asked to provide a project name, project description, and stack name. The project name is the name of your Pulumi project, the project description is a short description of your project, and the stack name is the name of the environment you are deploying to (e.g., dev, staging, prod, etc.). For this tutorial, you will use the following values:

- Project name:

pulumi-project - Project description:

A minimal Google Cloud Python Pulumi program - Stack name:

k8s

After creating a new Pulumi project, you now have three files at the root of the project directory:

Pulumi.yamlspecifies metadata about your project.Pulumi.<stack name>.yaml- Contains configuration values for the stack you initialized. Replace<stack name>with the one you defined when you created the new Pulumi project. For the purpose of this tutorial, you will name this filePulumi.k8s.yaml.**__main__.py**- The Pulumi program that defines your stack resources. This is where the IaC magic happens.

Edit the Pulumi.<stack name>.yaml file and paste this into it:

config:

gcp:project: <YOUR_PROJECT_ID>

gcp:region: <YOUR_REGION>

gcp:zone: <YOUR_ZONE>

gke:name: k8s

Replace the placeholders with the values that are specific to your GCP project. The gcp:project value is the project ID of your GCP project. The gcp:region and gcp:zone values are the region and zone where you want to create your GKE cluster. For example, if you want to create a cluster in the us-central1-a zone, you would set the gcp:region value to us-central1 and the gcp:zone value to us-central1-a.

config:

gcp:project: cicd-workshops-460410

gcp:region: us-central1

gcp:zone: us-central1-a

gke:name: k8s

Defining infrastructure with Pulumi

Now that you’ve initialized your Pulumi project, the next step is to define the cloud infrastructure using Python. This includes provisioning a GKE cluster, deploying your application, and exposing it through a load balancer. All of this is managed declaratively in code using Pulumi’s SDK.

Open the __main__.py file and replace it’s contents with the following:

import os

import pulumi

import pulumi_kubernetes

from pulumi import ResourceOptions

from pulumi_kubernetes.apps.v1 import Deployment

from pulumi_kubernetes.core.v1 import Namespace, Pod, Service

from pulumi_gcp import container

conf = pulumi.Config('gke')

gcp_conf = pulumi.Config('gcp')

stack_name = conf.require('name')

gcp_project = gcp_conf.require('project')

gcp_zone = gcp_conf.require('zone')

location = gcp_conf.require("zone")

app_name = 'cicd-app'

app_label = {'appClass':app_name}

cluster_name = app_name

image_tag = ''

if 'CIRCLE_SHA1' in os.environ:

image_tag = os.environ['CIRCLE_SHA1']

else:

image_tag = 'latest'

docker_image = 'yemiwebby/orb-pulumi-gcp:{0}'.format(image_tag)

machine_type = 'e2-small'

cluster = container.Cluster(

cluster_name,

location=location,

initial_node_count=2,

min_master_version='latest',

node_version='latest',

node_config={

'machine_type': machine_type,

'disk_type': 'pd-standard',

'disk_size_gb': 100,

'oauth_scopes': [

"https://www.googleapis.com/auth/compute",

"https://www.googleapis.com/auth/devstorage.read_only",

"https://www.googleapis.com/auth/logging.write",

"https://www.googleapis.com/auth/monitoring",

]

}

)

# Set the Kubeconfig file values here

def generate_k8_config(master_auth, endpoint, context):

config = '''apiVersion: v1

clusters:

- cluster:

certificate-authority-data: {masterAuth}

server: https://{endpoint}

name: {context}

contexts:

- context:

cluster: {context}

user: {context}

name: {context}

current-context: {context}

kind: Config

preferences: {prefs}

users:

- name: {context}

user:

exec:

apiVersion: "client.authentication.k8s.io/v1beta1"

command: "gke-gcloud-auth-plugin"

provideClusterInfo: true

'''.format(masterAuth=master_auth, context=context, endpoint=endpoint,

prefs='{}')

return config

gke_masterAuth = cluster.master_auth.cluster_ca_certificate

gke_endpoint = cluster.endpoint

gke_context = gcp_project+'_'+gcp_zone+'_'+cluster_name

k8s_config = pulumi.Output.all(gke_masterAuth,gke_endpoint,gke_context).apply(lambda args: generate_k8_config(*args))

cluster_provider = pulumi_kubernetes.Provider(cluster_name, kubeconfig=k8s_config)

ns = Namespace(cluster_name, opts=ResourceOptions(provider=cluster_provider))

gke_deployment = Deployment(

app_name,

metadata={

'namespace': ns,

'labels': app_label,

},

spec={

'replicas': 3,

'selector':{'matchLabels': app_label},

'template':{

'metadata':{'labels': app_label},

'spec':{

'containers':[

{

'name': app_name,

'image': docker_image,

'ports':[{'name': 'port-5000', 'container_port': 5000}]

}

]

}

}

},

opts=ResourceOptions(provider=cluster_provider)

)

deploy_name = gke_deployment

gke_service = Service(

app_name,

metadata={

'namespace': ns,

'labels': app_label,

},

spec={

'type': "LoadBalancer",

'ports': [{'port': 80, 'target_port': 5000}],

'selector': app_label,

},

opts=ResourceOptions(provider=cluster_provider)

)

pulumi.export("kubeconfig", k8s_config)

pulumi.export("app_endpoint_ip", gke_service.status['load_balancer']['ingress'][0]['ip'])

This Pulumi program defines a complete infrastructure pipeline for deploying a containerized Python application to Google Kubernetes Engine (GKE). It begins by creating a GKE cluster with two nodes using the e2-small machine type. It then dynamically generates a kubeconfig file using output values from the cluster resource and sets up the Kubernetes provider accordingly. Within this configured context, the code provisions a dedicated namespace and deploys the Flask application using a Deployment resource with three replicas.

To make the application accessible externally, a LoadBalancer type Service is created, routing external traffic on port 80 to port 5000 inside the container. The image used for deployment is tagged using the Git SHA from CircleCI when available, allowing for consistent and traceable deployments across pipeline runs. You should update the docker_image variable with your own image name from Docker Hub or any other container registry you use.

If you want to learn more about defining infrastructure using Pulumi in Python, check out the official Pulumi Python documentation.

Update dependencies

The Pulumi project includes a requirements.txt file that specifies the Python dependencies needed to run your infrastructure and application code. Before proceeding, ensure that this file includes the required libraries for Pulumi, Kubernetes, and your Python app. You can copy and update the contents as shown below:

pulumi>=3.0.0,<4.0.0

pulumi-gcp>=8.0.0,<9.0.0

pulumi-kubernetes>=4.0.0,<5.0.0

flask

pyinstaller

pytest

pylint

Google Cloud setup

Before deploying infrastructure using Pulumi, you’ll need to configure access to your Google Cloud environment. This includes setting up credentials that allow both your local Pulumi CLI and CI/CD pipeline to authenticate and interact with Google Cloud resources.

Create a GCP project

While a default project is often created for new Google Cloud accounts, it’s a good practice to create a separate project specifically for this tutorial. This makes it easier to manage billing, permissions, and clean-up once you’re done testing. After creating the new project, make sure to note the Project ID. This is the unique identifier you’ll use in your configuration files and CLI commands, and it’s different from the display name.

Enable required APIs

Once your project is created, navigate to the APIs & Services > Enabled APIs & Services section in the Google Cloud Console.

Click ENABLE APIS AND SERVICES search for the following APIs and enable them:

- Kubernetes Engine API

- Compute Engine API

- Cloud Resource Manager API

- IAM Service Account Credentials API

These APIs are necessary for provisioning clusters, managing compute resources, and granting secure access through service accounts.

Getting project credentials

Next, you’ll need to create a service account key that Pulumi can use to provision resources in your GCP project. Visit the Create service account key page in the Google Cloud Console. You can either use the default service account or create a new one. Make sure to select JSON as the key type and click Create. Save the downloaded .json file inside your project’s directory.

Important security note: Rename the file to cicd_demo_gcp_creds.json to avoid accidental exposure of sensitive information. You should also add this file to your .gitignore to prevent it from being committed to your repository. Be extremely cautious with this file, if it gets into the wrong hands, it could be used to access your cloud resources and incur charges.

Assign required roles to the service account

After generating your service account, you’ll need to assign the correct IAM roles to allow Pulumi to provision infrastructure.

- Go to IAM & Admin > IAM in the Google Cloud Console.

- Find the email of the service account you just created.

- Click the pencil icon next to its entry to edit roles.

- Assign these roles:

- Kubernetes Engine Admin

- Compute Admin

- Service Account User

Note: If you created a new service account from scratch, you can also assign these roles directly during creation under the Permissions step.

These permissions allow the Pulumi program (and your CI/CD pipeline) to:

- Create and manage GKE clusters

- Allocate IP addresses and compute resources

- Use the service account credentials securely in automation

CircleCI setup

With GCP credentials in place, the next step is to prepare your CI/CD pipeline for deployment. To securely inject these credentials into CircleCI, you need to encode the file into a base64 string.

Encode the Google Service Account file

To store your credentials as an environment variable in CircleCI, run the following command in your terminal to convert the .json file to a base64-encoded string:

The output will look something like this:

ewogICJ0eXBlIjogInNlcnZpY2VfYWNjb3VudCIsCiAgInByb2plY3RfaWQiOiAiY2ljZC13b3Jrc2hvcHMiLAogICJwcml2YXRlX2tleV9pZCI6ICJiYTFmZDAwOThkNTE1ZTE0NzE3ZjE4NTVlOTY1NmViMTUwNDM4YTQ4IiwKICAicHJpdmF0ZV9rZXkiOiAiLS0tLS1CRUdJTiBQUklWQVRFIEtFWS0tLS0tXG5NSUlFdlFJQkFEQU5CZ2txaGtpRzl3MEJBUUVGQUFTQ0JLY3dnZ1NqQWdFQUFvSUJBUURjT1JuRXFla3F4WUlTXG5UcHFlYkxUbWdWT3VzQkY5NTE1YkhmYWNCVlcyZ2lYWjNQeFFBMFlhK2RrYjdOTFRXV1ZoRDZzcFFwWDBxY2l6XG5GdjFZekRJbXkxMCtHYnlUNWFNV2RjTWw3ZlI2TmhZay9FeXEwNlc3U0FhV0ZnSlJkZjU4U0xWcC8yS1pBbjZ6XG5BTVdHZjM5RWxSNlhDaENmZUNNWXorQmlZd29ya3Nob3BzLmlhbS5nc2VydmljZWFjY291bnQuY29tIgp9Cg==

Copy the results into your clipboard because we’ll be using it in the next section.

CI/CD pipeline with Pulumi integration

Now that your application, Pulumi setup, and GCP credentials are ready, it’s time to integrate everything into your CircleCI pipeline using the Pulumi orb. The configuration file below defines a complete CI/CD process that builds, tests, packages, and deploys your app to Google Kubernetes Engine (GKE).

To see the full example project, view the repo on GitHub here. I’ll guide you through and explain the important Pulumi integration bits of this example config so that you have a clear understanding of what’s going on.

version: 2.1

orbs:

pulumi: pulumi/pulumi@2.1.0

jobs:

build_test:

docker:

- image: cimg/python:3.13.3

environment:

PIPENV_VENV_IN_PROJECT: "true"

steps:

- checkout

- run:

name: Install Python Dependencies

command: |

pipenv install --skip-lock

- run:

name: Run Tests

command: |

pipenv run pytest

build_push_image:

docker:

- image: cimg/python:3.13.3

steps:

- checkout

- setup_remote_docker:

docker_layer_caching: false

- run:

name: Build and push Docker image

command: |

pipenv install --skip-lock

pipenv run pyinstaller -F hello_world.py

echo 'export TAG=${CIRCLE_SHA1}' >> $BASH_ENV

echo 'export IMAGE_NAME=orb-pulumi-gcp' >> $BASH_ENV

source $BASH_ENV

docker build -t $DOCKER_LOGIN/$IMAGE_NAME -t $DOCKER_LOGIN/$IMAGE_NAME:$TAG .

echo $DOCKER_PWD | docker login -u $DOCKER_LOGIN --password-stdin

docker push $DOCKER_LOGIN/$IMAGE_NAME

docker push $DOCKER_LOGIN/$IMAGE_NAME:$TAG

deploy_to_gcp:

docker:

- image: cimg/python:3.13.3

environment:

CLOUDSDK_PYTHON: "/usr/bin/python3"

GOOGLE_SDK_PATH: "~/google-cloud-sdk/"

steps:

- checkout

- pulumi/login:

access-token: ${PULUMI_ACCESS_TOKEN}

- run:

name: Install Python and GCP SDK Dependencies

command: |

cd ~/

pip install --upgrade pip

pip install pulumi pulumi-gcp pulumi-kubernetes

curl -o gcp-cli.tar.gz https://dl.google.com/dl/cloudsdk/channels/rapid/google-cloud-sdk.tar.gz

tar -xzvf gcp-cli.tar.gz

mkdir -p ${HOME}/project/pulumi-project

echo ${GOOGLE_CLOUD_KEYS} | base64 --decode --ignore-garbage > ${HOME}/project/pulumi-project/cicd_demo_gcp_creds.json

./google-cloud-sdk/install.sh --quiet

echo 'export PATH=$PATH:$HOME/google-cloud-sdk/bin' >> $BASH_ENV

source $BASH_ENV

gcloud auth activate-service-account --key-file ${HOME}/project/pulumi-project/cicd_demo_gcp_creds.json

- run:

name: Set GOOGLE_APPLICATION_CREDENTIALS

command: |

echo "export GOOGLE_APPLICATION_CREDENTIALS=${HOME}/project/pulumi-project/cicd_demo_gcp_creds.json" >> $BASH_ENV

source $BASH_ENV

- run:

name: Set gcloud Project

command: |

gcloud config set project $PROJECT_ID

- run:

name: Install GKE Auth Plugin via gcloud

command: |

source $BASH_ENV

gcloud components install gke-gcloud-auth-plugin --quiet

- pulumi/refresh:

stack: k8s

working_directory: ${HOME}/project/pulumi-project/

- pulumi/update:

stack: k8s

working_directory: ${HOME}/project/pulumi-project/

workflows:

build_test_deploy:

jobs:

- build_test

- build_push_image:

requires:

- build_test

- deploy_to_gcp:

requires:

- build_push_image

The following code snippet specifies that the Pulumi orb will be utilized in this pipeline.

This pipeline is divided into three stages:

-

build_test: Installs dependencies and runs your test suite usingpytest. -

build_push_image: Builds the Flask app into a single binary usingpyinstaller, creates a Docker image, and pushes it to Docker Hub. -

deploy_to_gcp: Sets up GCP credentials, installs the Google Cloud SDK and Pulumi, and runspulumi refreshfollowed bypulumi updateto deploy your infrastructure and app to GKE.

I’ll be focusing on the build_push_image and the deploy_to_gcp jobs.

build_push_image:

build_push_image:

docker:

- image: cimg/python:3.13.3

steps:

- checkout

- setup_remote_docker:

docker_layer_caching: false

- run:

name: Build and push Docker image

command: |

pipenv install --skip-lock

pipenv run pyinstaller -F hello_world.py

echo 'export TAG=${CIRCLE_SHA1}' >> $BASH_ENV

echo 'export IMAGE_NAME=orb-pulumi-gcp' >> $BASH_ENV

source $BASH_ENV

docker build -t $DOCKER_LOGIN/$IMAGE_NAME -t $DOCKER_LOGIN/$IMAGE_NAME:$TAG .

echo $DOCKER_PWD | docker login -u $DOCKER_LOGIN --password-stdin

docker push $DOCKER_LOGIN/$IMAGE_NAME

docker push $DOCKER_LOGIN/$IMAGE_NAME:$TAG

After the application is tested and it successfully passes, the build_push_image job packages the application into a single executable binary. It then kicks of the docker build command which builds a new Docker image based on a Dockerfile that lives in the project’s repo. This job also uses existing environment variables and defines some new environment variables which are used to specify unique Docker image names. Below is the Dockerfile for this project:

FROM python:3.13.3-slim

WORKDIR /opt/hello_world

COPY dist/hello_world .

RUN chmod +x ./hello_world

EXPOSE 5000

CMD ["./hello_world"]

This image matches the configuration in your Pulumi Kubernetes service setup, which maps external port 80 to internal app port 5000.

The docker push command uploads your the newly built Docker image to Docker Hub for storage and retrieval in the future.

deploy_to_gcp:

deploy_to_gcp:

docker:

- image: cimg/python:3.13.3

environment:

CLOUDSDK_PYTHON: "/usr/bin/python3"

GOOGLE_SDK_PATH: "~/google-cloud-sdk/"

steps:

- checkout

- pulumi/login:

access-token: ${PULUMI_ACCESS_TOKEN}

- run:

name: Install Python and GCP SDK Dependencies

command: |

cd ~/

pip install --upgrade pip

pip install pulumi pulumi-gcp pulumi-kubernetes

curl -o gcp-cli.tar.gz https://dl.google.com/dl/cloudsdk/channels/rapid/google-cloud-sdk.tar.gz

tar -xzvf gcp-cli.tar.gz

mkdir -p ${HOME}/project/pulumi-project

echo ${GOOGLE_CLOUD_KEYS} | base64 --decode --ignore-garbage > ${HOME}/project/pulumi-project/cicd_demo_gcp_creds.json

./google-cloud-sdk/install.sh --quiet

echo 'export PATH=$PATH:$HOME/google-cloud-sdk/bin' >> $BASH_ENV

source $BASH_ENV

gcloud auth activate-service-account --key-file ${HOME}/project/pulumi-project/cicd_demo_gcp_creds.json

- run:

name: Set GOOGLE_APPLICATION_CREDENTIALS

command: |

echo "export GOOGLE_APPLICATION_CREDENTIALS=${HOME}/project/pulumi-project/cicd_demo_gcp_creds.json" >> $BASH_ENV

source $BASH_ENV

- run:

name: Set gcloud Project

command: |

gcloud config set project $PROJECT_ID

- run:

name: Install GKE Auth Plugin via gcloud

command: |

source $BASH_ENV

gcloud components install gke-gcloud-auth-plugin --quiet

- pulumi/refresh:

stack: k8s

working_directory: ${HOME}/project/pulumi-project/

- pulumi/update:

stack: k8s

working_directory: ${HOME}/project/pulumi-project/

The deploy_to_gcp: job specified above is the portion of the pipeline that utilizes the Pulumi app and orb to actually stand up the new GKE cluster on GCP. Below, I’ll briefly guide you through the deploy_to_gcp: job.

The above code demonstrates the specification and execution of the Pulumi orb’s login: command. The access-token: parameter is passed the ${PULUMI_ACCESS_TOKEN} environment variable which you set in the CircleCI dashboard.

curl -o gcp-cli.tar.gz https://dl.google.com/dl/cloudsdk/channels/rapid/google-cloud-sdk.tar.gz

tar -xzvf gcp-cli.tar.gz

mkdir -p ${HOME}/project/pulumi-project

echo ${GOOGLE_CLOUD_KEYS} | base64 --decode --ignore-garbage > ${HOME}/project/pulumi-project/cicd_demo_gcp_creds.json

./google-cloud-sdk/install.sh --quiet

echo 'export PATH=$PATH:$HOME/google-cloud-sdk/bin' >> $BASH_ENV

source $BASH_ENV

gcloud auth activate-service-account --key-file ${HOME}/project/pulumi-project/cicd_demo_gcp_creds.json

These commands download and install the Google Cloud SDK. This SDK is required to create and modify GKE clusters on GCP. The first two lines downlad and unpack the SDK. The echo ${GOOGLE_CLOUD_KEYS} | base64 --decode... command decodes the ${GOOGLE_CLOUD_KEYS} environment variable and then populates the cicd_gcp_creds.json with its decoded contents. This file must exist in the Pulumi app project’s directory. The rest of the commands in this particular run: block install the SDK and the last line authorizes the service account to access GCP via the cicd_demo_gcp_creds.json file.

- pulumi/refresh:

stack: k8s

working_directory: ${HOME}/project/pulumi-project/

- pulumi/update:

stack: k8s

working_directory: ${HOME}/project/pulumi-project/

These steps use the Pulumi orb to manage infrastructure changes. The pulumi/refresh command synchronizes the Pulumi stack’s state with the actual resources in Google Cloud. This ensures that any changes made outside Pulumi are recognized before proceeding. After that, pulumi/update provisions or updates the defined infrastructure.

The stack value (k8s in this case) refers to the name of your Pulumi stack, while working_directory points to the location of your Pulumi project within the repo. Update this path to match your project structure if it differs.

Save all the changes and push the code to your Github repository.

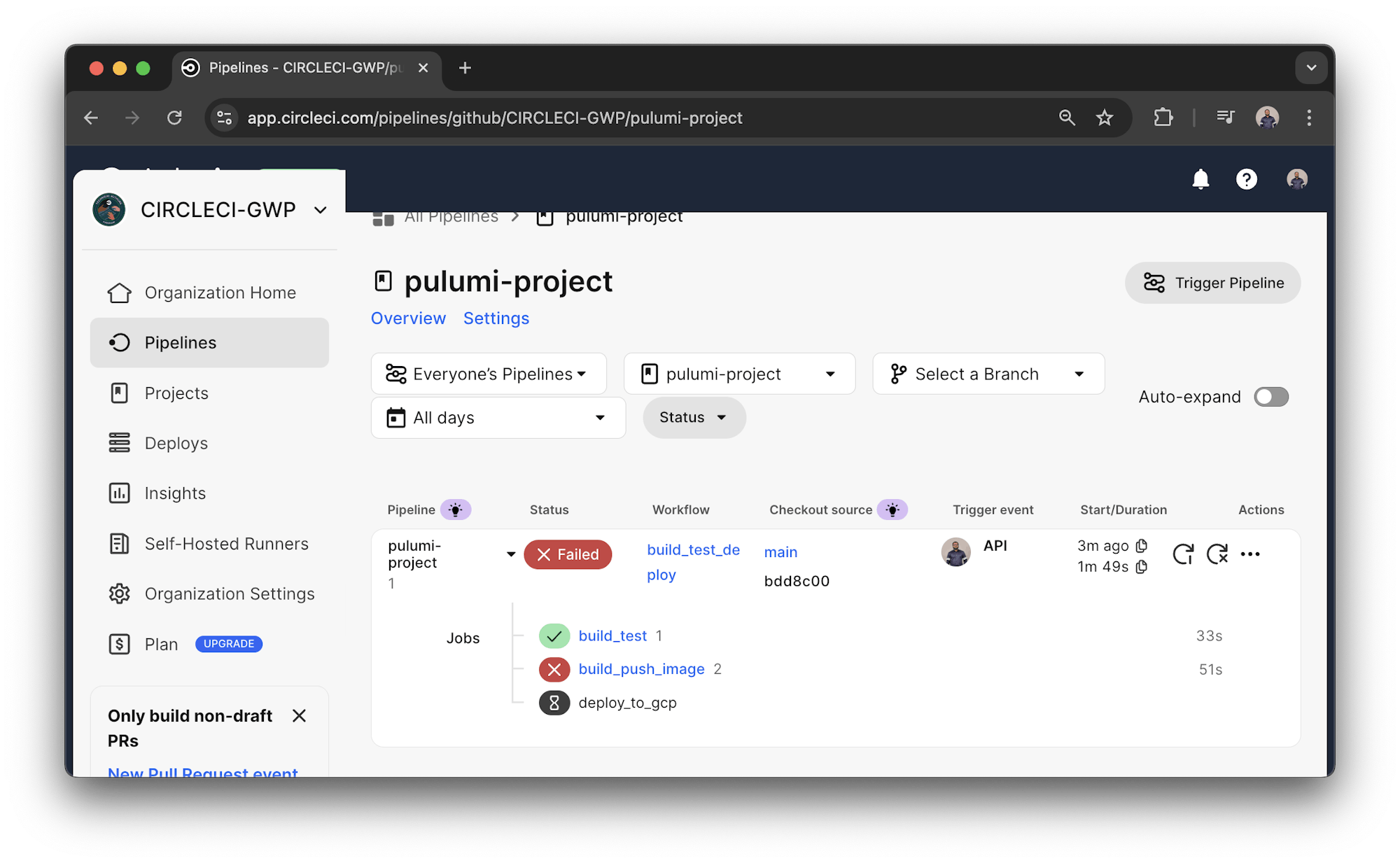

Build project on CircleCI and deploy to GCP

Once you’ve pushed your project to GitHub, navigate to the Projects tab in the CircleCI dashboard and locate your repository.

Click Set Up Project, specify the branch that contains your config.yml, and then click Set Up Project again to trigger the first pipeline run.

This initial build may fail if the required environment variables are not yet set.

Configure environment variables

To allow CircleCI to authenticate with external services like Docker Hub, Google Cloud, and Pulumi, you need to add the following project-level environment variables:

- $DOCKER_LOGIN is your Docker Hub username

- $DOCKER_PWD is your Docker Hub password

- $GOOGLE_CLOUD_KEYS is the base64-encoded content of your GCP service account JSON key

- $PULUMI_ACCESS_TOKEN is the access token from your Pulumi account

- PROJECT_ID is your GCP project ID

Add these variables via the Project Settings > Environment Variables section in the CircleCI dashboard.

After saving the variables, re-run the pipeline. If everything is configured properly, you should see the pipeline progress through the following stages:

build_testruns application tests.build_push_imagebuilds and pushes the Docker image.deploy_to_gcpprovisions infrastructure and deploys the app to GKE.

When complete, your application will be live on a GKE cluster with an external IP address exposed via a LoadBalancer service.

Conclusion

In this tutorial, you learned how to integrate infrastructure as code into a CI/CD pipeline using Pulumi and CircleCI. I showed you how to provision and deploy a containerized Python application to Google Kubernetes Engine (GKE) by combining Pulumi’s flexibility with CircleCI’s automation capabilities.

By using the Pulumi orb, you simplified your pipeline configuration and streamlined infrastructure provisioning. CircleCI orbs not only improve readability and reusability of configuration files but also accelerate delivery by bundling common tasks into reusable components.

While this example focused on GKE, the same principles can be applied to other cloud providers and deployment targets. You can explore the Orb Registry to find orbs tailored to your ecosystem: AWS, Azure, Docker, or testing tools.

To review the full working example and try it yourself, check out the GitHub repository here.